Prompt Engineering 2.0: How to Write Prompts That Understand You (Not the Other Way Around)

The era of simple keyword prompts is over. Discover how Prompt Engineering 2.0 uses structured commands, specific personas, and strategic framing to make advanced AI models like GPT-4 and Claude 3 deliver precisely what you need.

TrendFlash

Introduction: Beyond Simple Questions to Collaborative Dialogue

Remember when interacting with AI felt like a game of chance? You'd type a question, cross your fingers, and hope the response was somewhat useful. Those days are over. With the advent of powerful models like GPT-4, Claude 3, and Gemini, we've entered the era of Prompt Engineering 2.0. This isn't about tricking the AI; it's about communicating with clarity and precision. Modern prompt engineering is the art of structuring your instructions so that the AI doesn't just hear you—it understands you. It’s the crucial skill that transforms these powerful models from quirky chatbots into indispensable partners for writing, coding, analysis, and creativity. As these tools become more integrated into our daily workflows, mastering this skill is no longer optional—it's a core component of AI literacy.

The Foundation: Core Principles of Effective Prompts

Before diving into advanced techniques, you must master the fundamentals. These core principles are the building blocks for all effective AI communication and are essential for anyone looking to leverage the best AI tools in 2025.

1. Clarity and Specificity are Non-Negotiable

Vague prompts get vague answers. The more specific you are about your desired context, outcome, length, format, and style, the better the result. Compare "Write a poem" to "Write a short, inspiring poem about renewable energy, in the style of Robert Frost, focusing on the resilience of solar panels." The second prompt gives the AI a clear target to hit, leveraging its training on specific poetic styles and themes.

2. Structure Your Prompt Deliberately

How you organize your prompt matters. A best practice is to put the primary instruction at the beginning and use separators like ### or """ to distinguish the task from the content it needs to process. This creates a clear, parsable structure for the model, mimicking the structured data it was trained on and reducing ambiguity.

3. Prefer Positive Instructions

It's more effective to tell the AI what to do rather than what not to do. Instead of saying "Don't be too technical," instruct it to "Explain this in simple, accessible language for a beginner." This focuses the model on the desired behavior instead of asking it to invert a negative command, which can sometimes accidentally reinforce the very thing you're trying to avoid.

Advanced Prompt Engineering Techniques for 2025

Now, let's explore the sophisticated methods that define Prompt Engineering 2.0. These techniques leverage how modern large language models process information to achieve unprecedented levels of accuracy and relevance.

1. Role Prompting: Unleash Domain Expertise

What it is: Assigning the AI a specific role, persona, or expertise area to guide its responses. This technique activates the model's embedded knowledge of that domain, leading to more targeted, contextual, and specialized outputs.

Why it works: By adopting a role, the AI consistently applies the associated vocabulary, priorities, and communication style, maintaining a specific viewpoint throughout the interaction. It's a way of pre-configuring the model's "state of mind."

Example in Action:

- Less Effective: "Explain mutual funds."

- Prompt Engineering 2.0: "Act as a seasoned financial advisor with a CFA certification and 15 years of experience. Explain the benefits and risks of mutual funds to a first-time investor in a professional yet approachable tone, and provide three actionable recommendations."

This technique is incredibly powerful for everything from creative writing to customer service and is a cornerstone of AI-powered customer service agents.

2. Few-Shot and One-Shot Prompting: Teach by Example

What it is: Providing the model with one (one-shot) or several (few-shot) examples of the task you want it to perform within the prompt itself.

Why it works: This technique, known as in-context learning, demonstrates the exact format, style, and reasoning you expect, allowing the model to pattern-match and replicate it for your specific query. It's like giving the AI a template to follow.

Example in Action (Few-Shot):

Extract keywords from the text below. Text 1: Stripe provides APIs that web developers can use to integrate payment processing. Keywords 1: Stripe, payment processing, APIs, web developersText 2: OpenAI has trained cutting-edge language models that are very good at understanding and generating text. Keywords 2: OpenAI, language models, text processing, API

Text 3: [Your text here] Keywords 3:

This is particularly useful for complex tasks where the desired output format is difficult to describe with instructions alone and is a key skill for developers working with machine learning in 2025.

3. Chain-of-Thought (CoT) Prompting: Force Logical Reasoning

What it is: Encouraging the model to break down complex problems into a series of intermediate reasoning steps before arriving at a final answer.

Why it works: It mimics how humans solve problems and prevents the model from jumping to an incorrect conclusion. For advanced reasoning, you can combine it with few-shot prompting by providing examples of step-by-step reasoning. For simpler tasks, a "zero-shot" approach using a phrase like "Let's think step by step" can be remarkably effective.

Example in Action:

- Standard Prompt: "If I have 8 marbles, give 3 away, and find 4 more, how many do I have?"

- CoT Prompt: "If I have 8 marbles, give 3 away, and find 4 more, how many do I have? Let's think through this step by step." The model will then output: "You started with 8. After giving away 3, you have 5 left. Then, you found 4 more, so 5 + 4 = 9 marbles."

This technique is at the heart of AI reasoning explained and is critical for complex problem-solving.

4. Using the Right Parameters: Temperature and Max Tokens

While not part of the prompt text itself, understanding key API parameters is a critical part of Prompt Engineering 2.0.

- Temperature: Controls the randomness of the output. A temperature of 0 makes the output deterministic and highly focused, which is best for factual tasks like data extraction. A higher temperature (e.g., 0.8-1.0) increases creativity, which is useful for storytelling or brainstorming.

- Max Tokens: Sets a hard cutoff for the length of the generated response. Set this high enough to allow the model to finish its thought without being cut off prematurely, especially for long-form content.

Before and After: A Prompt Transformation

Let's see how these techniques combine to transform a basic prompt into a powerful, precise instruction.

| Before (Vague & Ineffective) | After (Structured & Powerful) | Techniques Used |

|---|---|---|

| "Tell me about customer service." | """ You are an experienced customer service manager. Analyze the customer email below and draft a response.

Your Goals:

Empathize with the customer's frustration. Address the core issue: a delayed software update. Provide a concrete solution: offer a 15% discount on their next invoice. Do not ask for their password. Customer Email: [Paste email here] Output Format: A professional email response in 3-4 short paragraphs. """ | Role Prompting (Customer Service Manager), Specificity (goals, solution), Positive Instruction (what to do), Clear Output Format |

Your Prompt Engineering 2.0 Cheatsheet

Download our free PDF cheatsheet summarizing these techniques. (Note: This is a call-to-action for your website. You would link it to a downloadable PDF you create.)

- Be Specific: Define context, outcome, length, format, style.

- Use Structure: Instruction first, separate context with ### or """.

- Give a Role: "You are a [Role] with [X years] experience."

- Show, Don't Tell: Use few-shot examples to demonstrate format.

- Ask for Steps: For complex problems, add "Let's think step by step."

- Control Creativity: Use a low temperature (0-0.3) for facts, higher (0.7-1.0) for ideas.

Conclusion: Mastering the Language of Collaboration

Prompt Engineering 2.0 marks a fundamental shift from hoping the AI understands to knowing how to make yourself understood. By applying these structured techniques—role-playing, providing examples, and encouraging chain-of-thought reasoning—you move from being a passive user to an active director of AI capabilities. This skill set is becoming as critical as traditional writing and communication, especially as the future of work evolves. The true power of modern AI isn't just in its answers, but in the quality of your questions and instructions. Start practicing today, and you'll unlock a new level of productivity and creativity.

Related Reading

Tags

Share this post

Categories

Recent Posts

Opening the Black Box: AI's New Mandate in Science

AI as Lead Scientist: The Hunt for Breakthroughs in 2026

Measuring the AI Economy: Dashboards Replace Guesswork in 2026

Your New Teammate: How Agentic AI is Redefining Every Job in 2026

Related Posts

Continue reading more about AI and machine learning

AI Hallucinations Are Getting More Dangerous: How to Spot and Stop Them in 2025

AI isn't just making up facts anymore; it's creating convincing, dangerous fabrications. Discover why modern hallucinations are harder to detect and how new mitigation techniques are essential for any business using AI in 2025.

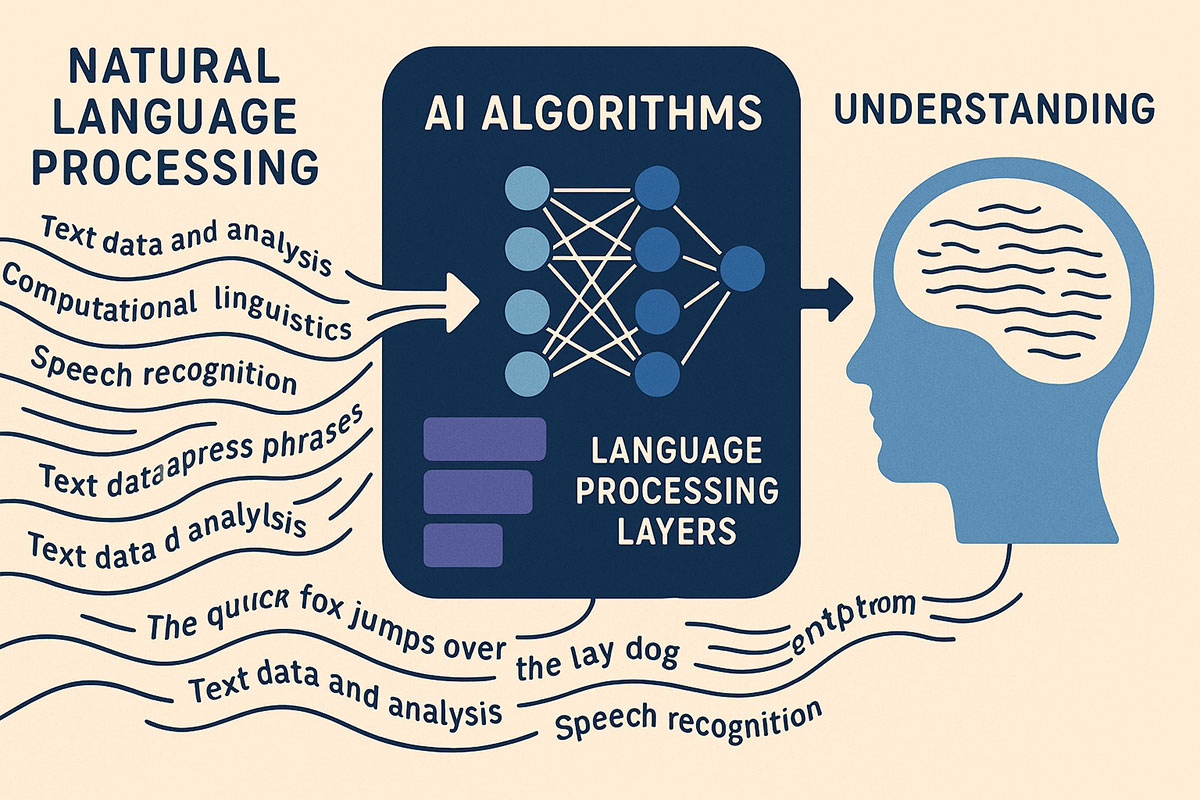

Natural Language Processing: How AI Understands Human Language in 2025

NLP technology has advanced to near-human understanding of language. This guide explains how AI processes text and speech with unprecedented accuracy in 2025.

Beyond ChatGPT: The Next Wave of NLP in 2025

In 2025, NLP is moving beyond ChatGPT. From multimodal assistants to context-aware systems, here’s the next wave of natural language technology.