Machine Learning in 2025: Zero-Shot, Few-Shot and the Quest for Data Efficiency

Discover how cutting-edge machine learning techniques are breaking the data barrier. This guide explores Zero-Shot, Few-Shot, and Active Learning—methods that are making AI more adaptable and efficient than ever in 2025.

TrendFlash

Introduction: The End of the Big Data Era?

For years, the mantra in artificial intelligence was simple: more data equals better models. However, as we move through 2025, a fundamental shift is underway. Experts warn we could run out of high-quality language data as soon as 2026, forcing the industry to rethink its approach to machine learning. Meanwhile, the cost of collecting and labeling massive datasets remains prohibitive for many applications, from medical diagnostics to personalized e-commerce.

This challenge has catalyzed a revolution in data-efficient machine learning. Techniques like Zero-Shot Learning, Few-Shot Learning, and Active Learning are moving from academic curiosities to essential business tools, enabling models to learn more effectively from limited data. These methodologies are not just optimizing resources—they're expanding AI applications into domains where data was previously too scarce or expensive to obtain. In this comprehensive guide, we'll explore how these techniques work, their real-world applications, and why they're defining the future of machine learning.

Zero-Shot Learning: Classifying the Unseen

Imagine showing a friend pictures of cats, lions, and horses, but never showing them a tiger. Later, when presented with a tiger image, they can confidently identify it based on its shared attributes with the animals they've seen—stripes like a zebra, but a feline shape like a lion. This human-like ability to recognize the unfamiliar is precisely what Zero-Shot Learning (ZSL) enables for AI systems.

Zero-shot learning allows models to correctly classify data belonging to classes they have never encountered during training. It achieves this by leveraging semantic representations or attributes that describe relationships between different concepts. For instance, a ZSL model trained to recognize various animals can identify a 'tiger' without ever seeing one by understanding that it shares attributes with known classes—it has 'stripes' (like a zebra) and is a 'feline' (like a lion).

How Zero-Shot Learning Works: The Technical Magic

At its core, ZSL creates a mapping between the input space (like images or text) and a semantic attribute space. This attribute space acts as an intermediary layer that allows the model to connect what it has seen to what it hasn't:

- Attribute-Based Approaches: Objects or concepts are described using a set of attributes, allowing models to generalize to unseen classes based on shared characteristics.

- Semantic Embeddings: Techniques like word embeddings create a vector space where semantically similar concepts are located near each other, enabling models to infer relationships between known and unknown classes.

- Generative Models: Some ZSL approaches generate synthetic examples of unseen classes based on their semantic descriptions, effectively creating training data for classes the model has never encountered.

Real-World Applications of Zero-Shot Learning

ZSL is particularly valuable in dynamic environments where new categories constantly emerge or where comprehensive training data is impossible to obtain:

- E-commerce Product Categorization: Automatically classifying new products as they're added to inventory without retraining the model.

- Multilingual Translation: Translating between language pairs the model wasn't explicitly trained on by leveraging linguistic similarities.

- Content Moderation: Identifying new forms of inappropriate content by understanding their semantic relationship to known problematic content.

- Medical Imaging: Recognizing rare conditions by their similarity to more common ailments based on medical descriptions.

The major benefit of zero-shot learning is its ability to expand AI capabilities without the need for extensive data collection or model retraining, dramatically accelerating time-to-market for new applications.

Few-Shot Learning: Mastering Tasks with Minimal Examples

If Zero-Shot Learning is about recognizing the completely unknown, Few-Shot Learning (FSL) is about rapid adaptation with minimal guidance. Few-shot learning enables models to learn new tasks or recognize new classes from just a handful of examples—sometimes as few as one to five samples per class.

Think of it as learning a new skill from a quick demonstration. Just as you might learn to identify rare bird species from just a few photographs, few-shot learning models can generalize from minimal data. This approach has become particularly powerful with the rise of large pre-trained models like GPT, which can be quickly adapted to specialized tasks with minimal additional training.

The Mechanics of Few-Shot Learning

Few-shot learning employs several sophisticated techniques to achieve its remarkable efficiency:

- Meta-Learning (Learning to Learn): Models are trained on a variety of learning tasks so they develop the ability to quickly adapt to new tasks with limited data. The model essentially learns the process of learning itself.

- Model-Agnostic Meta-Learning (MAML): This algorithm learns an optimal initialization of model parameters that can be rapidly fine-tuned with just a few examples for new tasks, achieving good performance quickly.

- Prototypical Networks: This approach represents each class by the average (prototype) of its few examples, then classifies new samples based on their distance to these prototypes in embedding space.

- Metric Learning: Techniques that learn a distance function between data points, enabling comparisons between new examples and the limited labeled examples available.

Few-Shot Learning in Action: Practical Applications

The applications of FSL are particularly valuable in domains where data is inherently scarce or expensive to label:

- Medical Diagnosis: Classifying rare diseases from a small number of medical images, such as identifying COVID-19 patterns from limited chest X-rays.

- Personalized E-commerce: Tailoring product recommendations for niche customer segments with minimal historical data.

- Sentiment Analysis: Quickly adapting to analyze customer feedback for new product categories or in different languages with few labeled examples.

- Industrial Quality Control: Identifying new types of manufacturing defects from just a few examples of faulty products.

In one compelling example, few-shot learning achieved an impressive 97% accuracy in airline tweet classification using only a small number of training examples, demonstrating its practical efficacy despite minimal data.

Active Learning: Smart Data Selection for Maximum Impact

While Zero-Shot and Few-Shot Learning focus on learning from limited data, Active Learning takes a different approach: it optimizes which data gets labeled in the first place. Active learning is an iterative technique that prioritizes labeling the most informative examples from an unlabeled dataset, dramatically reducing annotation efforts while maximizing model improvement.

Think of it as a smart student who knows which questions to ask their teacher. Instead of passively studying everything, they identify the concepts they find most confusing and seek clarification specifically on those areas. Similarly, an active learning system selectively queries the most valuable data points for labeling, focusing human annotation efforts where they'll have the greatest impact on model performance.

How Active Learning Works: The Iterative Process

The active learning process typically follows this cycle:

- The model is initially trained on a small labeled dataset.

- The model evaluates a large pool of unlabeled data and selects the most 'informative' examples (typically the most uncertain or ambiguous cases).

- These selected examples are sent for human annotation.

- The model is retrained with the newly labeled data.

- The process repeats until performance targets are met or labeling budget is exhausted.

This approach employs an acquisition function that identifies unlabeled samples likely to maximize the model's improvement, ensuring that each labeling effort delivers maximum return on investment.

Real-World Benefits and Applications of Active Learning

The practical advantages of active learning are substantial, particularly for businesses with limited labeling resources:

- Reduced Labeling Costs: By focusing on the most informative samples, active learning can reduce labeling volumes by 50-80% while maintaining similar performance to models trained on fully labeled datasets.

- Improved Model Accuracy: Concentrating on challenging edge cases helps models refine their decision boundaries where it matters most, often resulting in better performance on difficult examples than models trained on random data.

- Faster Model Development: The reduced labeling burden accelerates the model development lifecycle, getting AI solutions to production faster.

These benefits make active learning invaluable across numerous applications:

- Image Recognition: Vision models can more efficiently classify specific features by focusing labeling efforts on ambiguous or difficult images.

- Natural Language Processing: Reducing the annotation bottleneck for text classification, sentiment analysis, and entity recognition tasks.

- Binary Classification: Radically narrowing classification boundaries for tasks like predicting customer churn or fraud detection.

- Autonomous Vehicles: Prioritizing the labeling of rare but critical driving scenarios that are most valuable for safety.

Comparative Analysis: Choosing the Right Approach

With three powerful techniques available, how do you choose the right one for your specific machine learning challenge? The table below provides a clear comparison of their strengths, limitations, and ideal use cases:

| Technique | Strengths | Limitations | Ideal Use Cases |

|---|---|---|---|

| Active Learning | Reduced labeling costs, highly efficient with unlabeled data | Requires access to large unlabeled datasets and iterative labeling process | Image classification, NLP tasks, binary classification |

| Zero-Shot Learning | Exceptional generalization to unseen classes, no need for task-specific data | Performance depends heavily on quality of semantic features and attributes | Object recognition, text classification, expanding to new categories |

| Few-Shot Learning | Rapid adaptation with minimal data, reduced data dependency | Requires specialized meta-learning architectures, can struggle with highly complex tasks | Medical imaging, sentiment analysis, personalized recommendations |

The Business Impact: Why These Methods Matter in 2025

The shift toward data-efficient machine learning isn't just a technical curiosity—it's delivering tangible business value across industries. As the Stanford 2025 AI Index Report highlights, AI is increasingly embedded in everyday life, with performance on demanding benchmarks continuing to improve significantly. These efficiency-focused approaches are central to this expansion.

Driving Down Costs and Expanding Applications

Data-efficient methods directly address two critical business constraints: budget and data availability. By reducing dependency on massive labeled datasets, they make AI accessible to organizations without the resources of tech giants. This democratization is particularly crucial as the market for AI training data grows from approximately $2.5 billion to an expected $30 billion within a decade—a price tag that some argue only 'big tech' can afford.

Meanwhile, these techniques are expanding AI into previously inaccessible domains. In healthcare, few-shot learning enables diagnostics with limited patient data. In retail, zero-shot learning allows for rapid adaptation to new product categories. In cybersecurity, active learning helps prioritize the investigation of the most suspicious activities when human analyst time is limited.

Enhancing Precision and Efficiency

Beyond cost savings, these methods often yield more robust and precise models. Active learning, for instance, refines AI systems by concentrating on ambiguous or edge-case scenarios, resulting in models that perform better on challenging real-world data than those trained on randomly selected examples.

The business case is clear: whether you're a startup with limited data resources or an enterprise looking to optimize AI spending, these efficient learning techniques offer a path to higher performance with lower investment.

Future Trends and Developments

The trajectory of data-efficient machine learning points toward even more powerful and accessible techniques in the near future. Several key trends are worth watching:

- Hybrid Approaches: Combining multiple techniques—such as using active learning to select the most valuable examples for few-shot learning—will likely yield even greater efficiencies.

- Improved Foundation Models: As large pre-trained models become more capable, their zero-shot and few-shot performance will continue to improve, making these approaches viable for increasingly complex tasks.

- Automated Machine Learning (AutoML) Integration: Data-efficient methods are increasingly being incorporated into AutoML pipelines, making them accessible to non-experts.

- Cross-Modal Transfer: Techniques that leverage knowledge across different data types (e.g., using text to understand images) will enhance zero-shot capabilities.

As research continues, we can expect these methods to become standard tools in the machine learning toolkit, fundamentally changing how we approach AI development and deployment.

Conclusion

The era of brute-force machine learning—throwing increasingly massive datasets at increasingly large models—is giving way to a more nuanced and efficient approach. Zero-Shot Learning, Few-Shot Learning, and Active Learning represent a fundamental shift toward smarter, more adaptable AI systems that can achieve remarkable performance with limited data.

As we navigate 2025 and beyond, these techniques will become increasingly essential for businesses looking to leverage AI without the prohibitive costs of data collection and labeling. Whether you're building customer service chatbots, medical diagnostic tools, or recommendation systems, understanding and applying these data-efficient methods will be crucial for staying competitive in the rapidly evolving AI landscape.

The future of machine learning isn't just about building bigger models—it's about building smarter, more efficient learning processes. And that future is already here.

Related Reading

Tags

Share this post

Categories

Recent Posts

Opening the Black Box: AI's New Mandate in Science

AI as Lead Scientist: The Hunt for Breakthroughs in 2026

Measuring the AI Economy: Dashboards Replace Guesswork in 2026

Your New Teammate: How Agentic AI is Redefining Every Job in 2026

Related Posts

Continue reading more about AI and machine learning

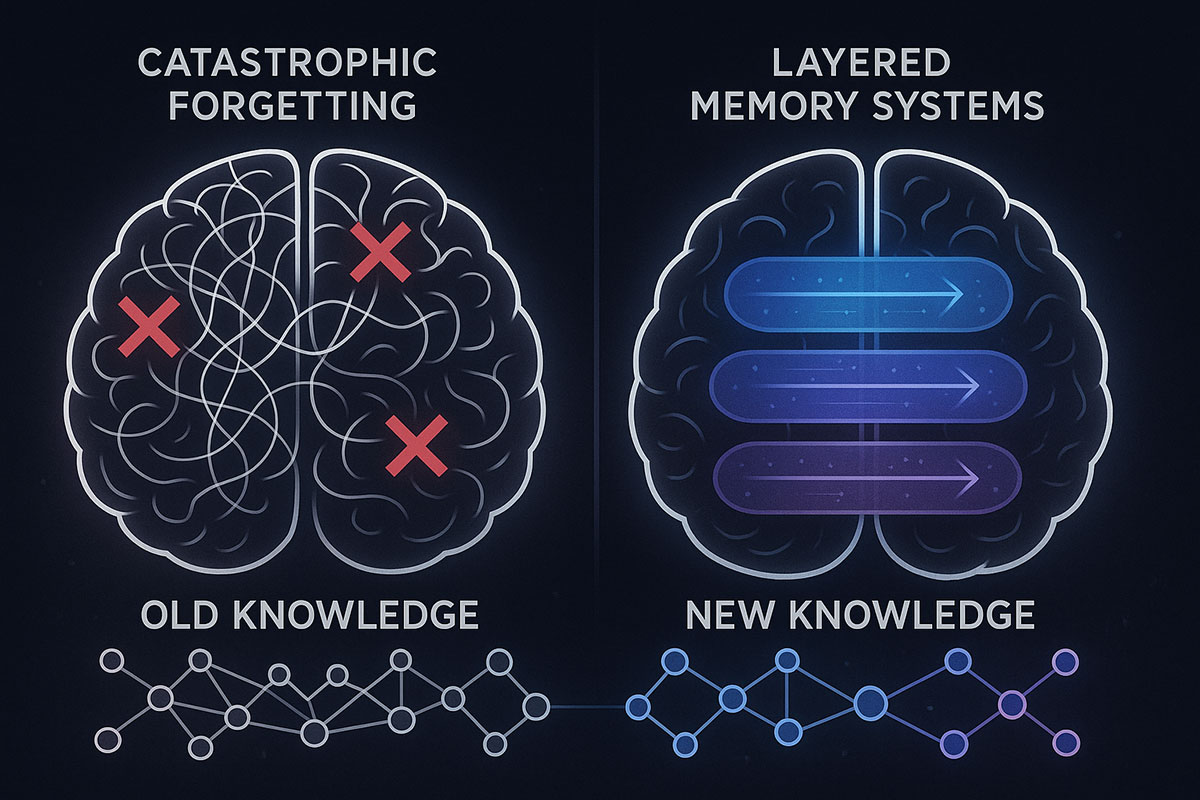

Google's HOPE Model: AI That Finally Learns Continuously (Catastrophic Forgetting Solved)

Google just unveiled HOPE, a self-modifying AI architecture that solves catastrophic forgetting—the fundamental problem preventing AI from learning continuously. For the first time, AI can absorb new knowledge without erasing what it already knows. Here's why this changes everything.

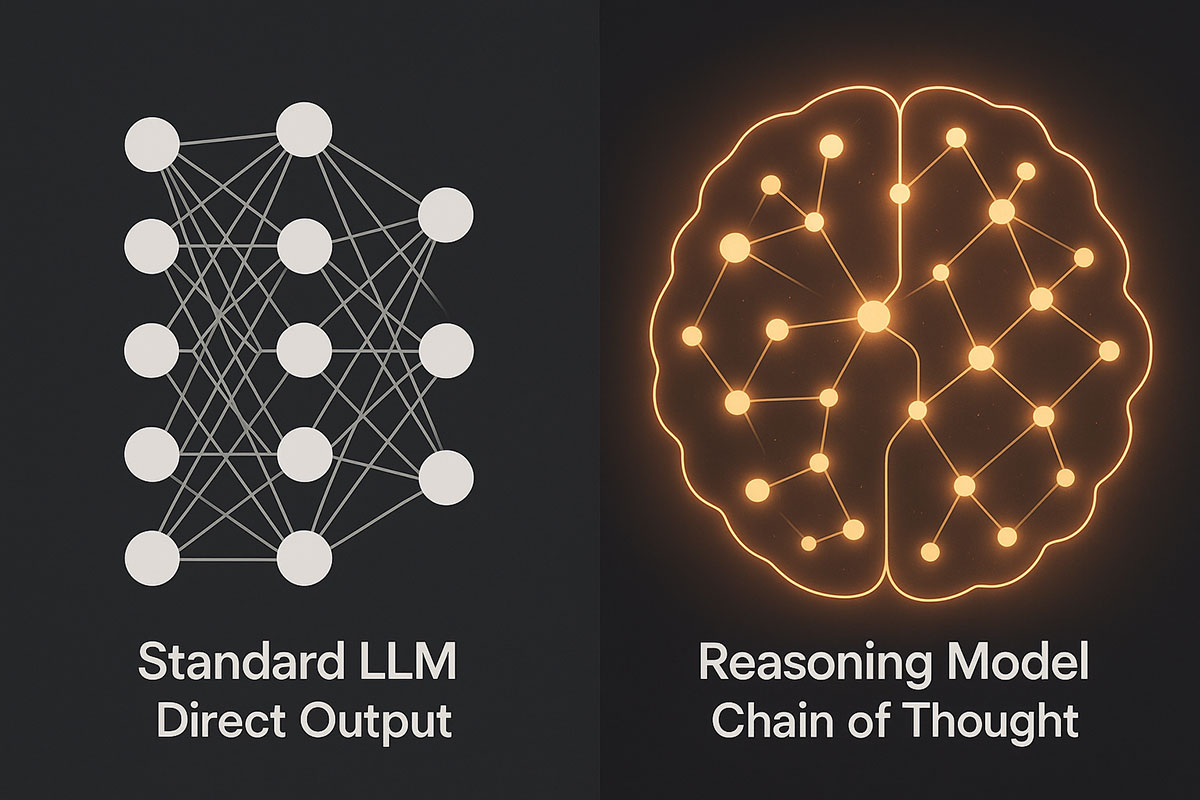

AI Reasoning Models Explained: OpenAI O1 vs DeepSeek V3.2 - The Next Leap Beyond Standard LLMs (November 2025)

Reasoning models represent a fundamental shift in AI architecture. Unlike standard language models that generate answers instantly, these systems deliberately "think" through problems step-by-step, achieving breakthrough performance in mathematics, coding, and scientific reasoning. Discover how O1 and DeepSeek V3.2 are redefining what AI can accomplish.

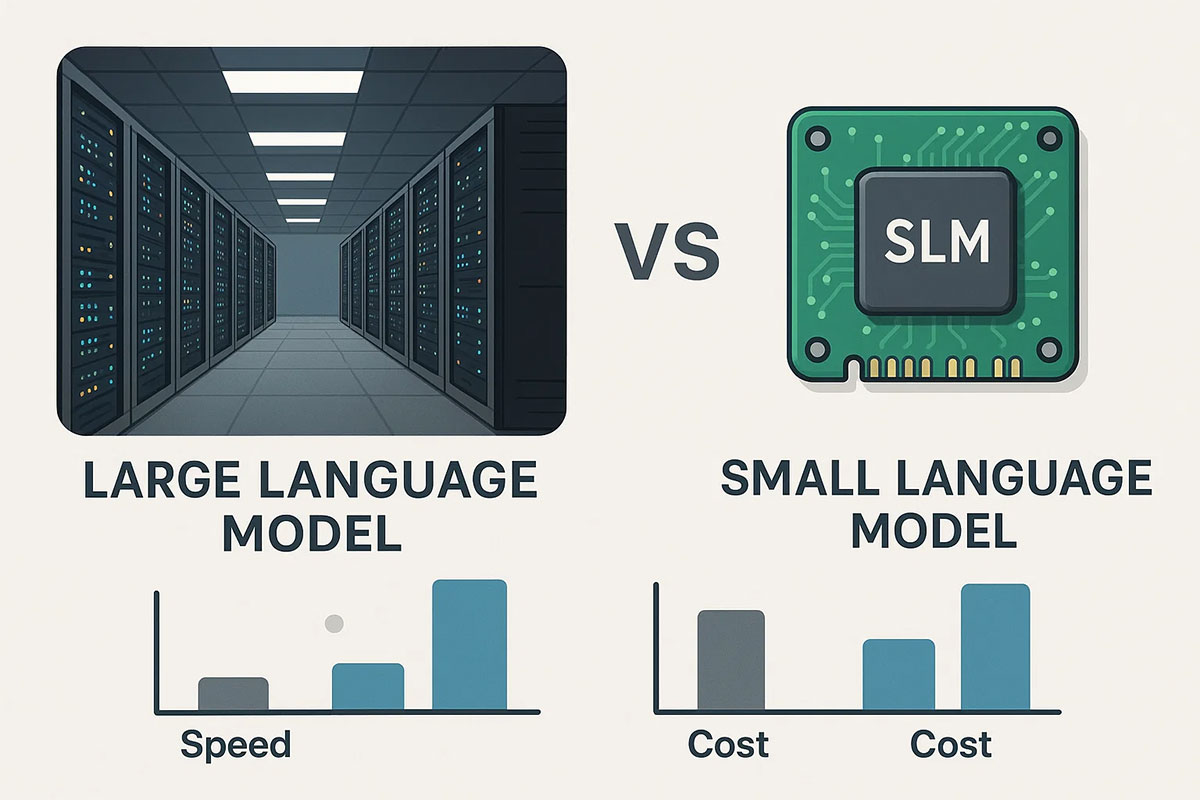

Why Smaller AI Models (SLMs) Will Dominate Over Large Language Models in 2025: The On-Device AI Revolution

The AI landscape is shifting from "bigger is better" to "right-sized is smarter." Small Language Models (SLMs) are delivering superior business outcomes compared to massive LLMs through dramatic cost reductions, faster inference, on-device privacy, and domain-specific accuracy. This 2025 guide explores why SLMs represent the future of enterprise AI.