Why Smaller AI Models (SLMs) Will Dominate Over Large Language Models in 2025: The On-Device AI Revolution

The AI landscape is shifting from "bigger is better" to "right-sized is smarter." Small Language Models (SLMs) are delivering superior business outcomes compared to massive LLMs through dramatic cost reductions, faster inference, on-device privacy, and domain-specific accuracy. This 2025 guide explores why SLMs represent the future of enterprise AI.

TrendFlash

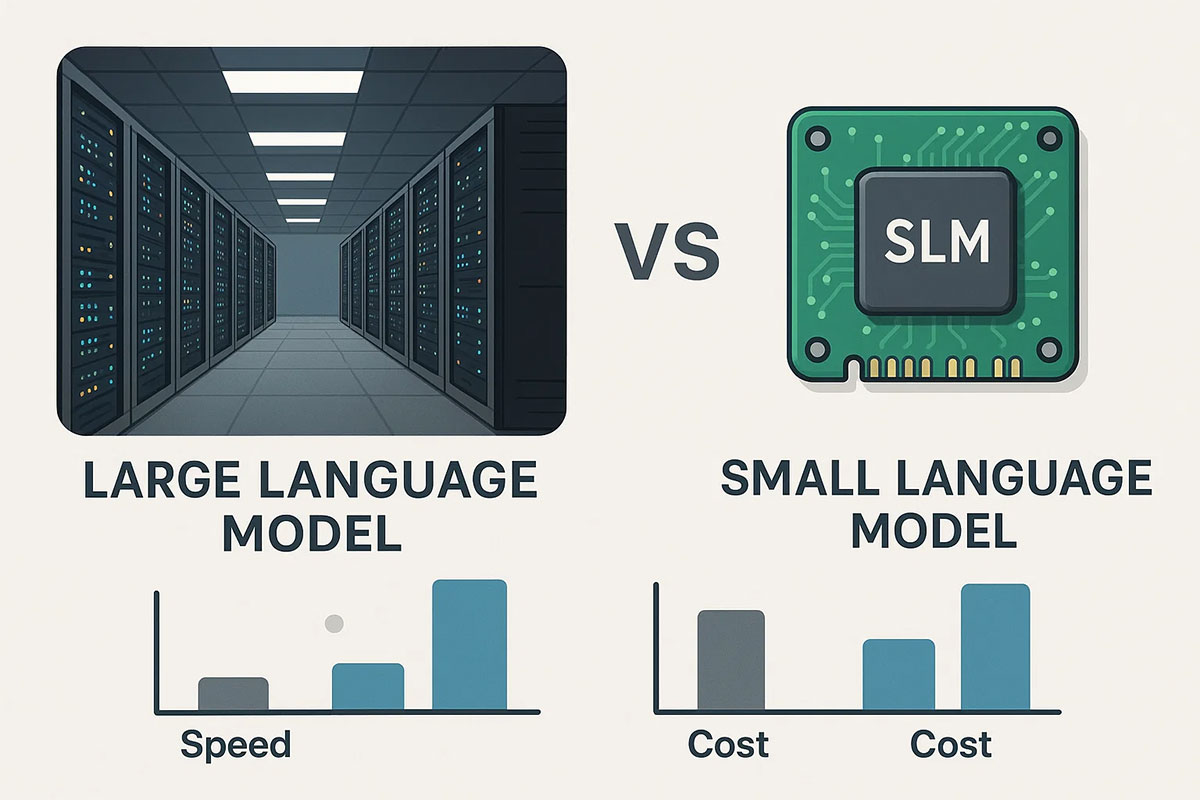

The conventional wisdom in artificial intelligence has long held that bigger models perform better. But 2025 marks a fundamental shift in this paradigm. Small Language Models (SLMs)—models with billions of parameters rather than hundreds of billions—are increasingly outperforming their massive cousins on real-world tasks that matter to enterprises: speed, accuracy, cost, and privacy.

This isn't a niche trend. According to Gartner's 2025 AI Adoption Survey, 68% of enterprises that deployed SLMs reported improved model accuracy and faster return on investment compared to those relying on general-purpose large language models. The market underscores this shift: the global SLM market, valued at USD 0.93 billion in 2025, is projected to reach USD 5.45 billion by 2032, representing a 28.7% compound annual growth rate. The fundamental calculation has changed—organizations increasingly understand that a 7-billion-parameter model fine-tuned for their specific domain often delivers superior results compared to a 175-billion-parameter general-purpose model.

The Cost Revolution: From Expensive to Accessible

The most immediately compelling argument for SLMs involves cost. Large Language Models like GPT-4 cost $10-30 per 1 million tokens for inference—the actual usage of the model, not training. These costs accumulate quickly at scale. An enterprise running 100 daily active chatbots, each consuming 50,000 tokens daily, faces monthly inference costs approaching $4,500 with GPT-4.

By contrast, SLMs deliver comparable or superior results at fractional costs. DeepSeek models demonstrate the cost advantage vividly: processing 1 million input tokens plus 1 million output tokens costs just $0.70 total, compared to $10-30 for GPT-4. This represents approximately 95% cost reduction for inference alone.

Mistral 7B, one of the most respected open-weight SLMs, delivers performance comparable to Llama 2 13B—a model roughly twice its size—while requiring less compute to run. Microsoft's Phi-4 series and Google's efficiency-focused models offer similar dynamics. These cost dynamics transform the economics of AI deployment. What was previously only economically viable for large, well-capitalized organizations suddenly becomes accessible to smaller companies and individual developers.

The cost advantage extends beyond inference. Fine-tuning an SLM on proprietary data typically costs 40-70% less than fine-tuning comparable LLM capabilities. This enables organizations to customize models for specific domains at manageable cost. A supply chain optimization firm recently reduced model response latency by 47% and cut cloud inference costs by over 50% by switching from a general LLM to an SLM fine-tuned on logistics workflows.

Speed: Response Times That Enable Real-Time Applications

Enterprise applications frequently demand rapid responses. Customer service chatbots need sub-second response times to feel natural. Real-time fraud detection requires decision-making in milliseconds. Autonomous systems need immediate perception and action cycles. SLMs excel at these time-critical applications.

According to Microsoft's benchmarking, SLMs often respond up to 5x faster than equivalent LLMs. This dramatic speed advantage stems from simpler architecture—fewer parameters mean fewer computations required for each inference step. On an A100 GPU, Mistral 7B achieves approximately 30 tokens per second inference speed compared to much lower throughput from larger models.

Phi-4-mini-flash-reasoning demonstrates the efficiency frontier. This 3.8-billion-parameter model achieves 1,955 tokens per second throughput on Intel Xeon 6 processors—enabling real-time inference on edge devices. Importantly, when processing longer sequences (2K prompt length with 32K generation), Phi-4-mini-flash-reasoning demonstrates up to 10x higher throughput compared to larger reasoning models, with near-linear latency growth rather than the quadratic growth observed in larger models.

This speed advantage enables entirely new use cases. Personal AI assistants that operate entirely on-device become practical with SLMs—no cloud connectivity required, no waiting for responses from distant servers. Mobile applications gain sophisticated reasoning capabilities without constant cloud communication. Industrial systems achieve real-time decision-making at the edge of the network, closest to where data originates.

Privacy: Keeping Sensitive Data Local

The privacy implications of SLMs deserve particular emphasis. When data travels to cloud-based LLMs for processing, it becomes subject to data residency regulations, privacy agreements with cloud providers, and potential security vulnerabilities inherent in network transmission. Organizations handling sensitive information—healthcare providers managing patient records, financial institutions processing transaction data, governments handling classified information—face serious compliance challenges with cloud-dependent models.

SLMs enable on-device processing where data never leaves the organization's infrastructure. A healthcare organization can deploy a Phi-4 model on local servers to analyze medical records, with patient data remaining entirely within the institution's secure environment. A financial services firm can run risk assessment models on edge devices at branch locations, keeping customer financial data local. A government agency can perform classification tasks on air-gapped systems using SLMs, eliminating cloud dependency entirely.

This privacy advantage directly addresses regulatory requirements. Under GDPR, organizations must minimize data transfer to third parties. HIPAA regulations require healthcare organizations to maintain strict control over protected health information. CCPA and emerging privacy regulations increasingly restrict data sharing with cloud providers. SLMs enable organizations to comply with these regulations while maintaining advanced AI capabilities.

The practical implication: 75% of enterprise data processing is projected to occur at the edge by 2025. SLMs represent the enabling technology for this transition. Unlike massive models requiring cloud-scale infrastructure, SLMs deploy on edge servers, industrial IoT devices, and even consumer devices, ensuring data privacy through local processing.

Accuracy: Domain-Specific Excellence

The prevailing assumption suggests that larger models necessarily outperform smaller ones. Yet empirical evidence increasingly contradicts this. When fine-tuned on domain-specific data, SLMs frequently outperform significantly larger general-purpose models on specialized tasks.

IBM Watson research demonstrated this convincingly: enterprises using SLMs in regulated sectors achieved 35% fewer critical AI output errors compared to organizations relying on general-purpose LLMs. This isn't because the SLM is more intelligent in an absolute sense, but because it has been optimized through training and fine-tuning on the specific domain where it will operate.

Mistral 7B exemplifies this dynamic. While smaller than GPT-3.5, it achieves comparable or superior performance on standard benchmarks like MMLU (62.6% accuracy) and outperforms significantly larger models on specific domains where it received specialized training. Similarly, Microsoft's Phi-4 scores over 80% on the MATH benchmark, outperforming models with 50x more parameters when evaluated on mathematical reasoning tasks specifically.

The practical implication is profound: organizations should select models based on the specific tasks they need solved, not purely on parameter count. A retail company optimizing inventory forecasting might deploy a Mistral 7B fine-tuned on retail transaction patterns, achieving better results than a generic LLM while reducing costs and latency. A manufacturing firm predicting equipment failures might deploy Phi-4 fine-tuned on industrial sensor data, obtaining domain-specific accuracy while maintaining the computational efficiency necessary for real-time edge deployment.

Deployability: From Cloud-Only to Everywhere

Large Language Models exist primarily as cloud services. Few organizations have the infrastructure to run GPT-4 or competing models locally. This creates vendor lock-in—organizations become dependent on cloud providers for their AI capabilities, subject to their pricing, API terms, and service availability.

SLMs, by contrast, deploy virtually anywhere. This includes cloud environments for organizations prioritizing scalability, edge servers for local processing and privacy, consumer devices for offline-capable applications, and embedded systems for IoT and robotics applications. An SLM can run on an NVIDIA A100 GPU in a data center and simultaneously on a smartphone's processor, adapting its resource consumption to available hardware.

This deployability advantage extends to open-source models. Mistral 7B, Phi-4, TinyLlama, and others are available as open-weight models that organizations can download and deploy without licensing restrictions. This contrasts with LLMs typically available only through cloud APIs with specific terms of service. Organizations can fine-tune open-weight SLMs on proprietary data, maintain complete control over the customization process, and deploy with confidence that their models won't be affected by provider pricing changes or API discontinuations.

For organizations with stricter security requirements, on-premises deployment of SLMs offers absolute data control. Healthcare systems, government agencies, and enterprises handling sensitive information can maintain full custody of their AI models and data, eliminating cloud dependency entirely.

Real-World Implementation: SLM Success Stories

The theoretical advantages of SLMs translate into concrete business results. An enterprise SaaS provider increased internal chatbot usage by 62% within three months of replacing a generic LLM with an SLM trained on internal support tickets and onboarding FAQs. The SLM's responses aligned better with the company's procedures and terminology, generated fewer nonsensical outputs, and responded faster—factors that together dramatically increased user adoption.

A supply chain optimization firm reduced operational costs by 50% by switching from API-based LLM inference to an on-premises SLM fine-tuned on logistics workflows. Beyond cost savings, the on-device processing reduced latency by 47%, enabling real-time inventory recommendations that were previously impossible with cloud-dependent LLMs due to network delays.

A financial services firm deployed SLMs for regulatory compliance checking across transaction data. The SLM achieved superior accuracy compared to general LLMs because it learned patterns specific to the institution's regulatory framework and past compliance incidents. The on-premises deployment ensured customer financial data never reached external cloud providers, meeting regulatory requirements while enabling sophisticated AI analysis.

SLMs vs. LLMs: When Each Model Type Excels

This isn't an argument that SLMs should replace LLMs universally. Different model sizes excel at different tasks. The optimal strategy involves understanding which problems SLMs solve best and where LLMs retain advantages.

SLMs excel at:

- Specialized domain tasks where focused training on relevant data improves accuracy

- Time-critical applications requiring real-time responses (fraud detection, reactive systems)

- Privacy-sensitive scenarios where data must remain on-premises or on-device

- Cost-sensitive applications at large scale (high-volume chatbots, distributed systems)

- Edge and on-device deployment scenarios with limited computational resources

- Regulatory compliance scenarios requiring explainability and control

LLMs retain advantages for:

- Generalist tasks where broad knowledge across domains is essential

- Complex creative work requiring deep reasoning across multiple domains

- Scenarios where state-of-the-art performance on diverse benchmarks is required

- Research and exploration where the model must handle unexpected inputs gracefully

The most effective enterprise AI strategy often involves both model types. Large, capable models handle exploratory tasks, research, and complex reasoning requiring broad knowledge. Smaller, specialized models handle production workloads where specific performance, cost, and deployment requirements matter most.

Implementation Strategy: Transitioning from LLMs to SLMs

Organizations considering a shift toward SLMs should approach the transition methodically. Begin by auditing current LLM usage patterns. Which tasks consume the most tokens and therefore the most cost? Which could benefit from domain-specific optimization? Which have time-sensitivity requirements that could be improved by faster inference?

The second step involves pilot deployment. Rather than immediately replacing all LLM usage, deploy an SLM on a specific high-value, well-defined task. Measure results carefully—accuracy metrics, latency, cost, and user satisfaction. This pilot provides concrete data about whether SLMs deliver the expected benefits for your organization.

The third step addresses fine-tuning and customization. If the pilot SLM was general-purpose, fine-tune it on your domain-specific data. This typically improves accuracy while further reducing costs compared to the generic model.

The final step involves gradual migration of production workloads. As organizations gain confidence in SLM performance through expanding pilots, they incrementally shift production usage from LLMs to SLMs. This minimizes risk while capturing cost and performance benefits.

Technical Considerations in SLM Deployment

Successfully deploying SLMs requires attention to several technical factors. Model selection involves understanding benchmark performance on tasks relevant to your organization. While MMLU and other standard benchmarks provide useful comparisons, domain-specific benchmarks matter more. A retail company should evaluate SLMs on retail-specific tasks; a healthcare organization should prioritize medical domain performance.

Training infrastructure requirements shrink with SLMs. While fine-tuning a 175-billion-parameter LLM might require multiple A100 GPUs and extensive infrastructure, fine-tuning a 7-billion-parameter SLM often requires a single GPU. This expands access to model customization to smaller organizations and individual teams.

Inference infrastructure depends on deployment approach. On-cloud deployment requires GPU resources but scales well with demand. On-premises deployment requires purchasing hardware with appropriate specifications for expected usage. On-device deployment requires ensuring the SLM size fits within the device's memory constraints—modern smartphones can run Phi-4-mini with its 3.8 billion parameters, while IoT devices might require even smaller models.

Monitoring and evaluation of SLM performance in production requires attention to domain-specific metrics. Generic metrics like ROUGE or BLEU scores matter less than domain-specific accuracy measures. A financial fraud detection SLM should be evaluated primarily on false positive/negative rates and precision/recall on actual fraud detection. A retail demand forecasting SLM should be evaluated on forecast accuracy against actual sales.

Cost Modeling and ROI Analysis

The financial case for SLMs typically involves several components. Direct inference cost savings are easiest to quantify—SLMs cost orders of magnitude less per token than LLMs. Organizations can calculate monthly LLM costs under current usage patterns and project SLM costs on identical workloads, revealing cost savings that frequently exceed 50%.

Infrastructure cost savings matter particularly for large-scale deployments. Organizations running thousands of inference requests daily can reduce infrastructure costs by deploying on-premises or edge SLMs rather than paying cloud API costs. A single GPU server might handle the inference load that previously required cloud API expenditures of $10,000-100,000 monthly, with payback periods of weeks or months.

Latency improvements deliver business value in specific scenarios. Reducing response latency by 5x (typical SLM vs. LLM) improves user experience and enables real-time applications previously impossible with cloud-dependent models. Financial companies quantify fraud detection speed in terms of prevented losses. E-commerce platforms quantify checkout speed improvements in terms of conversion rate improvements.

Accuracy improvements on domain-specific tasks directly impact business outcomes. A more accurate demand forecasting model reduces inventory waste. A more accurate customer churn prediction model enables more effective retention campaigns. These business impacts often exceed the direct cost savings, making the financial case for SLMs compelling.

The Competitive Landscape: Which SLMs Matter in 2025

Several SLM families have established themselves as industry-leading in 2025. Mistral 7B remains one of the most respected open-weight SLMs, delivering strong performance across diverse benchmarks. Its Grouped-Query Attention architecture enables efficient inference while maintaining accuracy.

Microsoft's Phi series, particularly Phi-4 and Phi-4-mini, focuses on STEM reasoning excellence. Phi-4 achieves mathematics performance comparable to much larger models, making it particularly valuable for technical applications. Phi-4-mini prioritizes extreme efficiency while maintaining reasonable performance, enabling mobile and IoT deployment.

Google's Gemma series provides models optimized for Google Cloud infrastructure while offering competitive open-weight alternatives. Meta's Llama 3.1 provides a strong all-purpose SLM with good performance across diverse tasks and strong community support.

DeepSeek's models bring particularly aggressive cost optimization through architectural innovations like Group Query Attention and Mixture of Experts approaches, achieving very low inference costs while maintaining competitive performance.

For most organizations, the optimal choice among these depends on specific requirements. If mathematical reasoning matters, Phi-4 is attractive. If cost optimization is paramount, DeepSeek models are compelling. If community support and ecosystem maturity matter, Mistral 7B has advantages. For most general-purpose tasks, any of these models deliver superior business outcomes compared to running generic LLMs.

The Future: SLMs as Default Architecture

Looking forward to late 2025 and beyond, several trends become apparent. First, organizations will increasingly view SLM deployment as the default approach for production workloads, reserving cloud-based LLMs for research and exploration. This represents a fundamental shift from the current paradigm where LLMs dominate production deployments.

Second, specialized SLMs will proliferate for specific domains. Rather than generic SLMs, organizations will deploy healthcare-specific SLMs trained on medical literature and clinical records, retail-specific SLMs trained on sales and inventory data, manufacturing-specific SLMs trained on industrial sensor data, and similar domain-specialized variants. This specialization further improves accuracy while maintaining efficiency advantages.

Third, on-device AI will become the norm for consumer applications. Rather than cloud-dependent AI assistants, consumers will increasingly use offline-capable AI on their devices, powered by efficient SLMs. This shift improves privacy, reduces latency, and eliminates cloud dependency—benefits that consumers increasingly value.

Fourth, the cost-performance frontier will continue shifting toward smaller, more efficient models. As organizations recognize that smaller models deliver better business outcomes in most scenarios, investment in even more efficient models will accelerate. We should expect SLMs with 1-2 billion parameters delivering performance approaching current 7-10 billion-parameter models.

The narrative that "bigger is better" in AI has given way to a more nuanced understanding: right-sized models that align with specific business requirements deliver superior outcomes on the dimensions that matter most—cost, speed, accuracy, and privacy. Organizations that embrace small language models in 2025 will gain significant competitive advantages through more efficient operations, faster deployment, greater control over AI systems, and ultimately superior business outcomes.

Related Reading:

- Efficient AI Models vs. Mega Models: Why Smaller May Be Better in 2025

- On-Device AI in 2025: NPUs Bring Private, Instant Intelligence to Your Phone

- Machine Learning in 2025: Zero-Shot, Few-Shot, and the Quest for Data Efficiency

- The Rise of Small Models: Why Lightweight AI Is Overtaking Giants in Real-World Use

- AI in Business & Startups: Practical Implementation Guide

Tags

Share this post

Categories

Recent Posts

Opening the Black Box: AI's New Mandate in Science

AI as Lead Scientist: The Hunt for Breakthroughs in 2026

Measuring the AI Economy: Dashboards Replace Guesswork in 2026

Your New Teammate: How Agentic AI is Redefining Every Job in 2026

Related Posts

Continue reading more about AI and machine learning

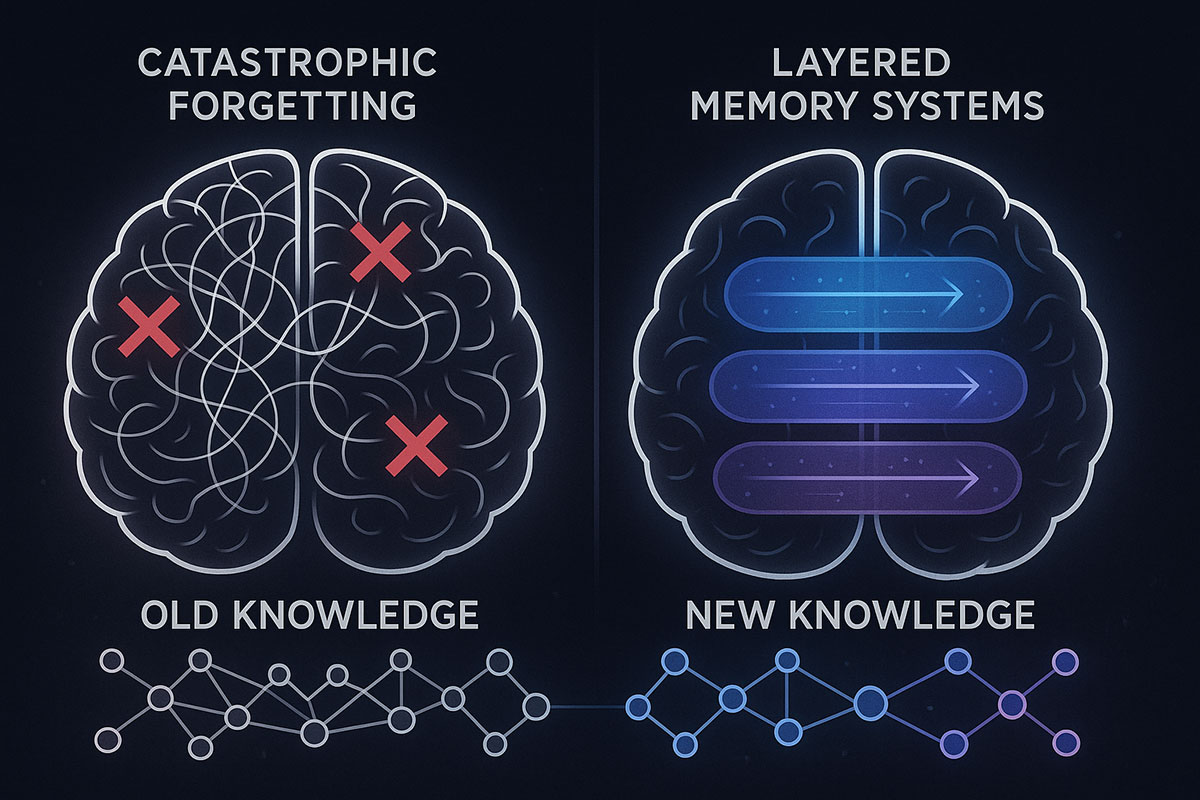

Google's HOPE Model: AI That Finally Learns Continuously (Catastrophic Forgetting Solved)

Google just unveiled HOPE, a self-modifying AI architecture that solves catastrophic forgetting—the fundamental problem preventing AI from learning continuously. For the first time, AI can absorb new knowledge without erasing what it already knows. Here's why this changes everything.

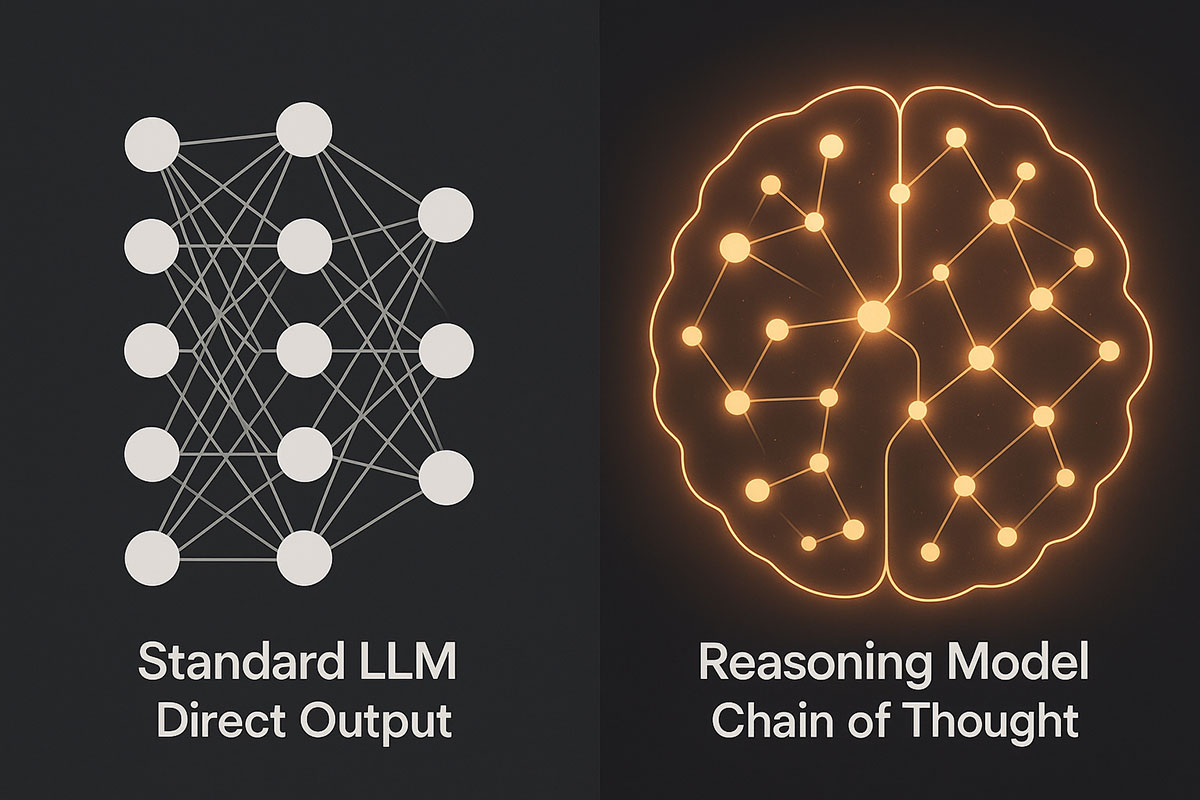

AI Reasoning Models Explained: OpenAI O1 vs DeepSeek V3.2 - The Next Leap Beyond Standard LLMs (November 2025)

Reasoning models represent a fundamental shift in AI architecture. Unlike standard language models that generate answers instantly, these systems deliberately "think" through problems step-by-step, achieving breakthrough performance in mathematics, coding, and scientific reasoning. Discover how O1 and DeepSeek V3.2 are redefining what AI can accomplish.

A Step-by-Step Guide for Developers: Build Your First Generative AI Model in 2025

With fresh guides and increasing interest in custom generative AI, now is the perfect time for developers to gain hands-on experience building their own models from scratch.