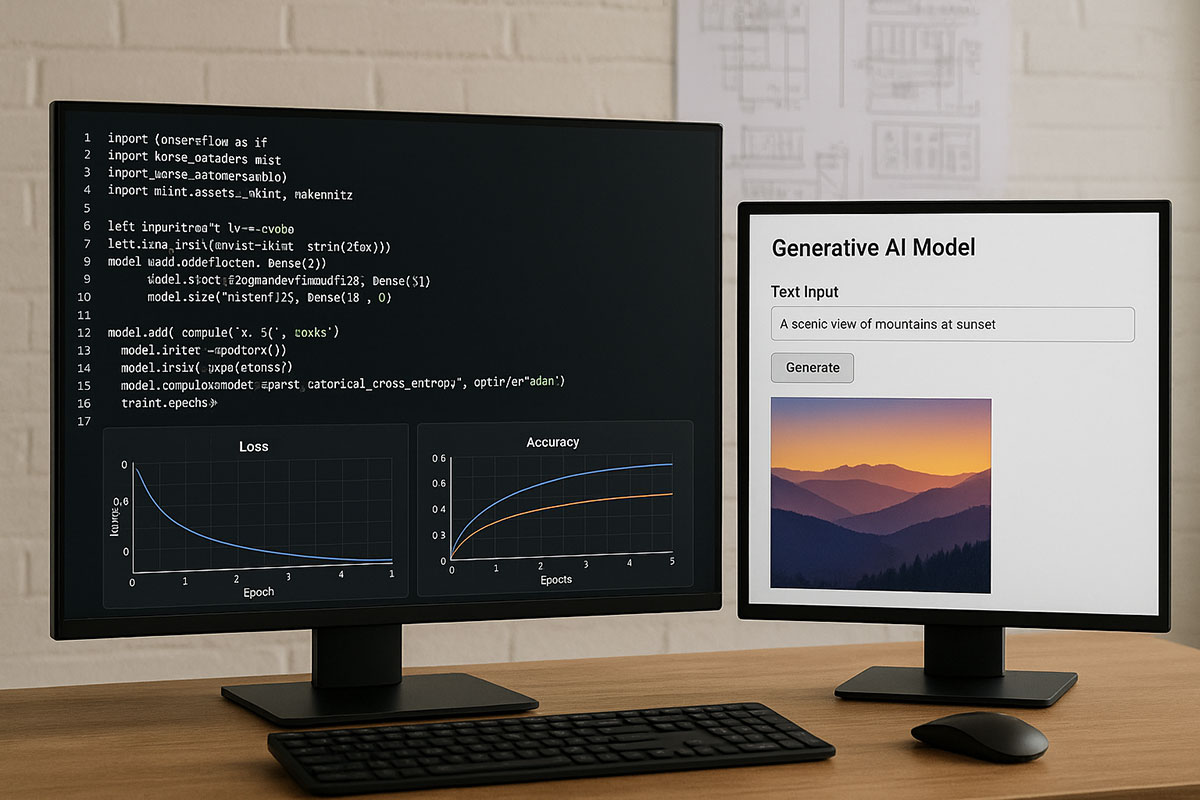

A Step-by-Step Guide for Developers: Build Your First Generative AI Model in 2025

With fresh guides and increasing interest in custom generative AI, now is the perfect time for developers to gain hands-on experience building their own models from scratch.

TrendFlash

Introduction: The Democratization of Generative AI Development

In 2025, building custom generative AI models has transitioned from an exclusive domain of research scientists to an accessible skill for software developers. With the maturation of open-source frameworks, availability of pre-trained models, and cloud resources, developers now have unprecedented opportunity to create specialized generative AI solutions tailored to specific domains and use cases. The growing interest in custom generative AI models is driven by the recognition that while general-purpose models like GPT-4 and Gemini are powerful, they often fall short for specialized applications requiring domain expertise, specific data contexts, or particular operational constraints.

This comprehensive guide provides a practical, step-by-step approach to building your first generative AI model. We'll move beyond theoretical concepts to hands-on implementation, covering everything from data preparation and model architecture selection to training, fine-tuning, and deployment. Whether you're looking to create a domain-specific language model, a custom image generator, or a specialized code assistant, this guide will equip you with the fundamental knowledge and practical strategies needed to succeed in the rapidly evolving landscape of generative AI development.

Understanding the Generative AI Development Landscape in 2025

Before diving into implementation, it's crucial to understand the current state of generative AI development tools and methodologies. The field has matured significantly, with several key trends shaping how developers approach building custom models.

The Shift Towards Efficient Model Architectures

While transformer architectures continue to dominate, 2025 has seen the rise of more efficient alternatives like Mamba and other state-space models that offer comparable performance with significantly better computational efficiency. For many custom applications, these emerging architectures provide a better balance of performance and resource requirements than traditional transformers, making them ideal for developers working with limited computational budgets.

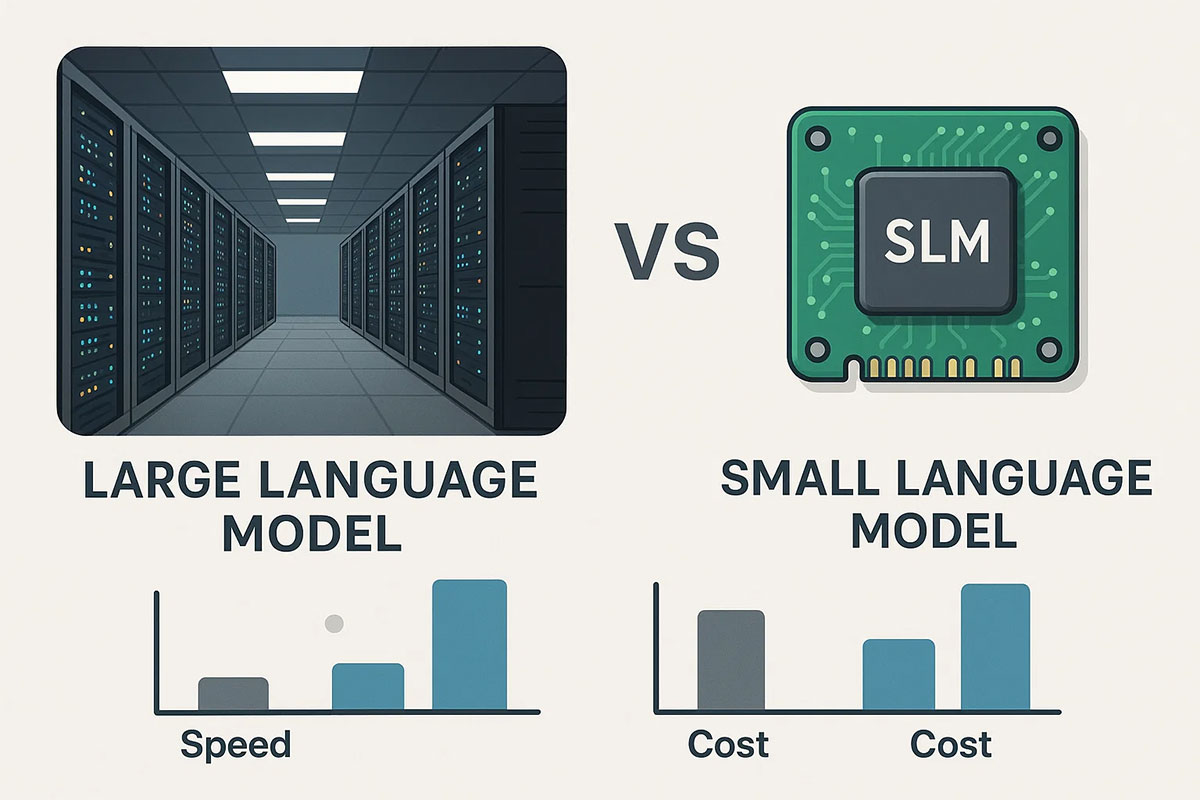

Specialized vs. General Models

The trend toward specialized, smaller models continues to gain momentum. Rather than building massive general-purpose models from scratch, developers are increasingly focusing on creating compact, domain-specific models that outperform larger general models within their narrow area of expertise. This approach aligns with the broader industry recognition that "smaller is smarter" for many enterprise AI applications.

Phase 1: Project Definition and Scope

Successful generative AI projects begin with careful planning and realistic scope definition. Attempting to build too ambitious a model as your first project is a common recipe for frustration and failure.

Selecting Your First Project

Choose a project that aligns with both your interests and practical constraints. Good first projects have clearly defined boundaries, available training data, and manageable computational requirements. Some ideal starter projects include:

- Domain-specific text generator: Fine-tune a small language model on technical documentation, product descriptions, or style-specific writing.

- Specialized image generator: Create a model that generates images in a specific style or domain using transfer learning.

- Code assistant for a niche language/framework: Build a specialized coding assistant tailored to a specific programming ecosystem.

- Structured data generator: Create synthetic datasets that mimic real-world data patterns for testing or training purposes.

Defining Success Metrics

Establish clear, measurable criteria for success before beginning development. These might include output quality assessments, inference speed requirements, resource utilization limits, or specific capability benchmarks. Well-defined metrics guide your development process and provide objective measures of progress.

Phase 2: Data Preparation and Strategy

Data quality and preparation fundamentally determine the success of your generative AI model. The adage "garbage in, garbage out" is particularly relevant in generative AI development.

Data Collection and Sourcing

Identify and collect relevant training data from appropriate sources. For many specialized applications, publicly available datasets provide a solid foundation that can be supplemented with domain-specific data. In 2025, the availability of high-quality curated datasets has significantly improved, with platforms like Hugging Face hosting thousands of specialized datasets across domains.

When collecting custom data, prioritize quality over quantity. A few thousand well-curated examples typically yield better results than millions of noisy, inconsistent samples. Implement rigorous data collection protocols that maintain consistency and accuracy throughout your dataset.

Data Preprocessing and Cleaning

Transform raw data into a format suitable for model training through systematic preprocessing:

- Normalization: Standardize formats, encodings, and structures across your dataset.

- Deduplication: Remove duplicate entries that can bias model training.

- Quality filtering: Implement automated and manual checks to exclude low-quality samples.

- Annotation: Add appropriate labels, tags, or metadata that will guide the learning process.

- Tokenization: Convert raw text into model-compatible tokens using appropriate tokenizers.

Data Partitioning Strategy

Split your processed data into distinct sets for training, validation, and testing. A typical partitioning ratio might be 70% for training, 15% for validation, and 15% for testing. The validation set guides hyperparameter tuning and training decisions, while the test set provides a final, unbiased evaluation of model performance.

Phase 3: Model Architecture Selection

Choosing the right architecture involves balancing performance requirements, available resources, and project specificity. While transformers remain a versatile choice, several architecture options deserve consideration for different use cases.

Architecture Options for Generative AI

| Architecture | Best For | Resource Requirements | Implementation Complexity |

|---|---|---|---|

| Transformer-Based | Text generation, code generation, sequential data | High | Medium |

| Diffusion Models | Image generation, audio synthesis | High | High |

| Generative Adversarial Networks (GANs) | Image generation, data augmentation | Medium | High |

| Variational Autoencoders (VAEs) | Controlled generation, anomaly detection | Low | Low-Medium |

| State-Space Models (Mamba) | Long-sequence modeling, efficient inference | Medium | Medium |

Base Model Selection Strategy

For most developers, starting with a pre-trained base model and fine-tuning provides the most practical approach. The selection of an appropriate base model significantly influences your project's success. Consider factors such as:

- Domain alignment: How closely the base model's training data matches your target domain

- Architecture compatibility: Whether the model's architecture supports your required modifications

- Licensing: Whether the model's license permits your intended use case

- Resource requirements: Whether the model can be fine-tuned and deployed within your computational constraints

In 2025, the ecosystem of available base models has expanded dramatically, with options ranging from massive thousand-parameter models to efficient compact models optimized for specific tasks.

Phase 4: Model Training and Fine-Tuning

The training phase transforms your prepared data and selected architecture into a functional generative AI model. This phase requires careful configuration, monitoring, and adjustment.

Training Configuration

Establish appropriate training hyperparameters based on your model architecture, dataset size, and computational resources. Key considerations include:

- Learning rate: Typically start with small values (1e-5 to 1e-4) for fine-tuning

- Batch size: Balance memory constraints with training stability

- Training steps/epochs: Determine based on dataset size and convergence monitoring

- Optimizer selection: AdamW remains popular, with new optimizers emerging for specific architectures

Fine-Tuning Techniques

Several fine-tuning approaches have become standard practice in 2025:

- Full fine-tuning: Update all model parameters (computationally expensive but comprehensive)

- Parameter-Efficient Fine-Tuning (PEFT): Techniques like LoRA (Low-Rank Adaptation) that update only a small subset of parameters

- Adapter-based fine-tuning: Insert small trainable modules between model layers

- Prompt tuning: Learn optimal prompt representations while keeping the base model frozen

For most developers, PEFT methods offer an excellent balance of performance and efficiency, often achieving 90-95% of full fine-tuning performance with only 1-5% of the parameter updates.

Training Monitoring and Intervention

Implement comprehensive monitoring throughout the training process. Track loss curves, evaluation metrics, and resource utilization. Set up automated checkpoints to preserve training progress and enable rollback if needed. Watch for signs of overfitting, such as diverging train/validation metrics, and be prepared to adjust your approach accordingly.

Phase 5: Evaluation and Validation

Rigorous evaluation ensures your model meets quality standards and functions appropriately before deployment. Generative AI models require multifaceted evaluation approaches.

Automated Metrics

Implement standard automated metrics relevant to your model type:

- Text models: Perplexity, BLEU score, ROUGE metrics

- Image models: FID (Fréchet Inception Distance), IS (Inception Score), CLIP score

- Code models: Compilation rate, functional correctness, code quality metrics

While automated metrics provide valuable quantitative insights, they rarely capture the full picture of model quality and should be supplemented with human evaluation.

Human Evaluation

Design structured human evaluation processes to assess output quality, relevance, coherence, and usefulness. Create evaluation rubrics that specify criteria and rating scales, then have domain experts assess model outputs across a representative sample of use cases. Human evaluation remains the gold standard for many aspects of generative AI quality.

Bias and Safety Testing

Implement comprehensive testing to identify potential biases, safety issues, or harmful outputs. Use structured prompts designed to surface problematic behaviors and establish protocols for addressing identified issues. In 2025, tools for automated bias and safety testing have matured significantly, making this crucial step more accessible to individual developers.

Phase 6: Deployment and Integration

Successfully deploying a generative AI model requires careful consideration of infrastructure, scalability, and integration patterns.

Deployment Options

Select a deployment approach that aligns with your use case requirements and resources:

- Cloud API deployment: Host your model as an API endpoint using services like Google Cloud, AWS, or Azure

- Edge deployment: Package your model for local execution on end-user devices

- Hybrid approaches: Combine cloud and edge components to balance latency, cost, and functionality

Performance Optimization

Implement optimizations to enhance inference speed and reduce resource requirements:

- Quantization: Reduce numerical precision of model weights (e.g., from 32-bit to 8-bit)

- Model pruning: Remove less important weights or components

- Compiler optimizations: Use specialized compilers like NVIDIA TensorRT or OpenAI Triton

- Caching strategies: Implement response caching for frequently generated content

Integration Patterns

Design clean integration interfaces that separate your model's functionality from application logic. Common patterns include:

- API-based integration: Expose model functionality through well-defined REST or GraphQL APIs

- Library integration: Package your model as a software library for direct incorporation into applications

- Agent integration: Incorporate your model into larger AI agent systems using frameworks like Microsoft's AI agent platform

Phase 7: Maintenance and Iteration

Generative AI models require ongoing maintenance and refinement to maintain performance and adapt to changing requirements.

Monitoring and Feedback Loops

Implement comprehensive monitoring of your deployed model's performance, usage patterns, and output quality. Establish feedback mechanisms that capture user interactions and model outputs for continuous improvement. In 2025, specialized MLOps platforms have made these capabilities more accessible to individual developers and small teams.

Continuous Improvement Cycle

Establish a regular cycle for model updates and improvements:

- Collect and process new training data from user interactions

- Retrain or fine-tune the model with updated datasets

- Evaluate updated model performance against previous versions

- Deploy improved models using canary or blue-green deployment strategies

Essential Tools and Frameworks for 2025

The generative AI development ecosystem has matured significantly, with several essential tools streamlining the development process:

Core Development Frameworks

- PyTorch and TensorFlow: Remain the foundational frameworks for model development

- Hugging Face Transformers: Provides thousands of pre-trained models and utilities

- Diffusers: Specialized library for diffusion-based generative models

- Parameter-Efficient Fine-Tuning libraries: Implementations of LoRA and related techniques

Training and Deployment Platforms

- Google Cloud AI Platform: Offers comprehensive tools for model development and deployment

- AWS SageMaker: Provides end-to-end machine learning workflow management

- Azure Machine Learning: Microsoft's platform for building, training, and deploying models

- Hugging Face Inference Endpoints: Simplified deployment of transformer-based models

Common Pitfalls and How to Avoid Them

Learning from others' mistakes can accelerate your generative AI development journey:

- Underestimating data requirements: Start with data collection and preparation, not model architecture

- Neglecting evaluation rigor: Implement comprehensive evaluation before deployment

- Overlooking resource constraints: Consider inference costs and latency during design

- Ignoring model safety: Implement safety measures from the beginning, not as an afterthought

- Failing to plan for maintenance: Design for ongoing improvement from the start

Related Reading

Tags

Share this post

Categories

Recent Posts

Opening the Black Box: AI's New Mandate in Science

AI as Lead Scientist: The Hunt for Breakthroughs in 2026

Measuring the AI Economy: Dashboards Replace Guesswork in 2026

Your New Teammate: How Agentic AI is Redefining Every Job in 2026

Related Posts

Continue reading more about AI and machine learning

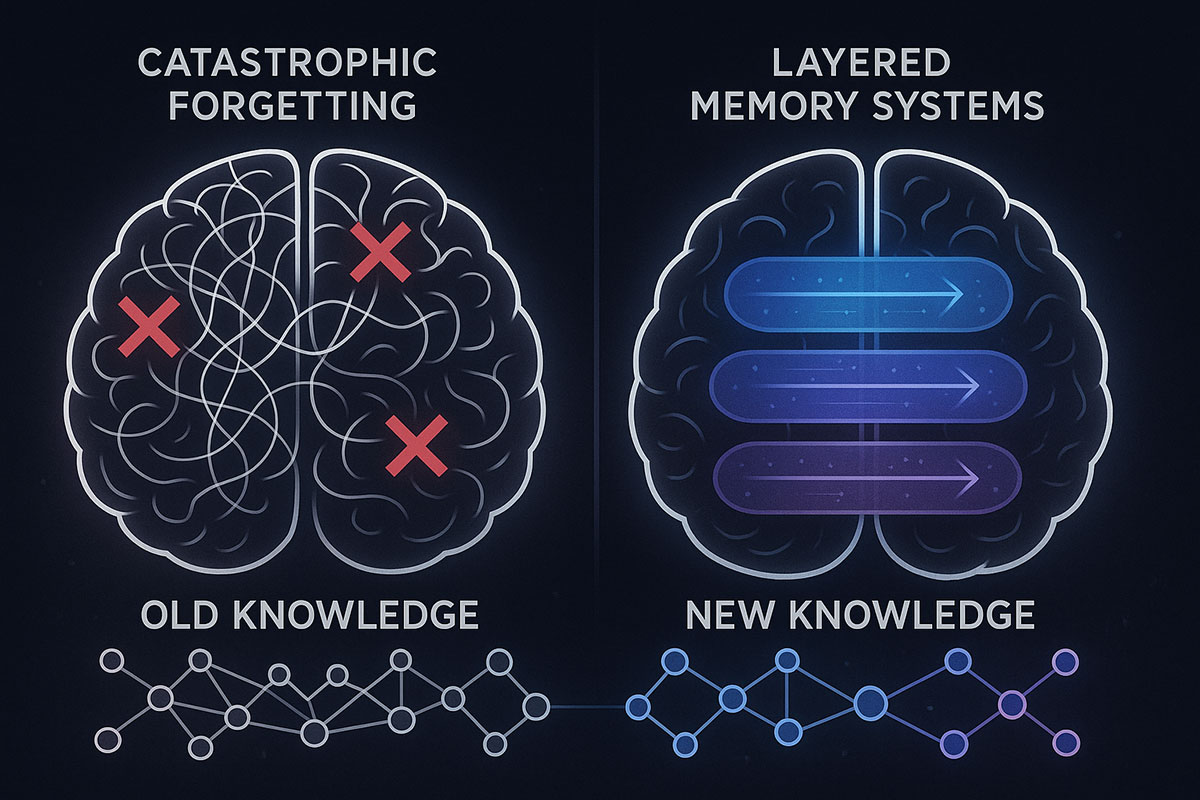

Google's HOPE Model: AI That Finally Learns Continuously (Catastrophic Forgetting Solved)

Google just unveiled HOPE, a self-modifying AI architecture that solves catastrophic forgetting—the fundamental problem preventing AI from learning continuously. For the first time, AI can absorb new knowledge without erasing what it already knows. Here's why this changes everything.

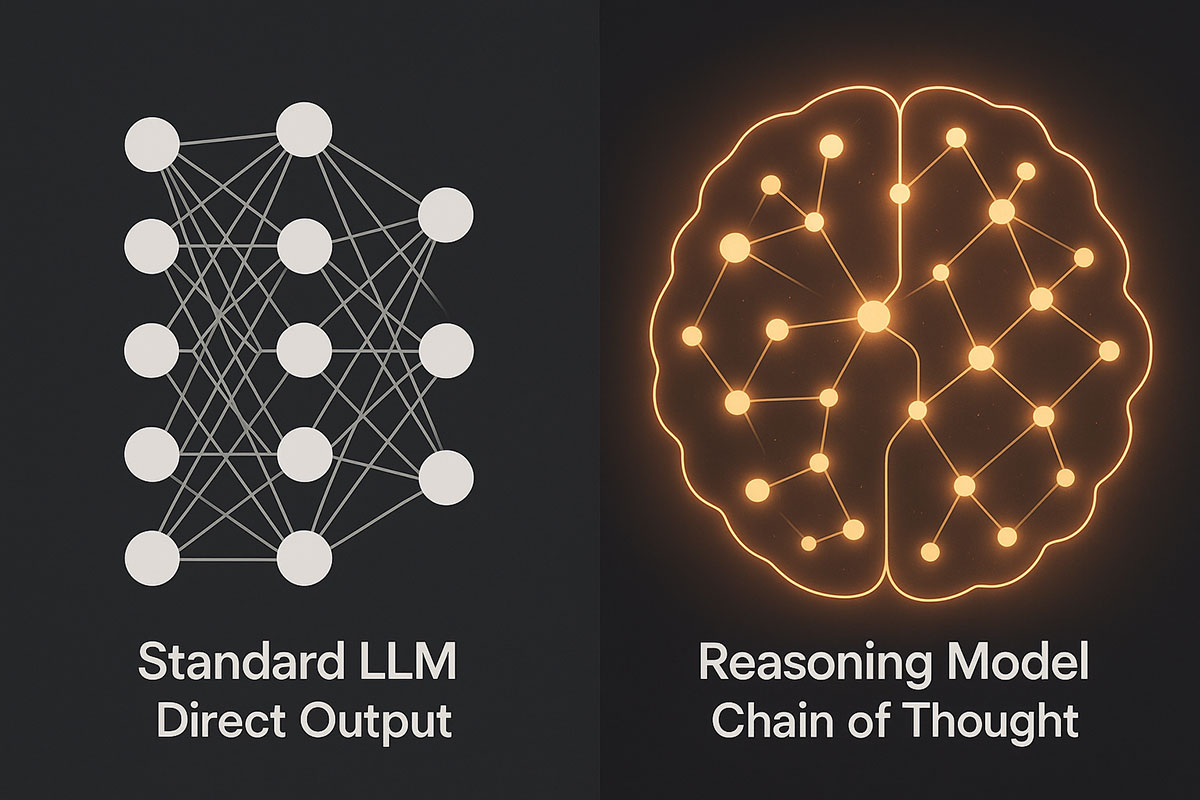

AI Reasoning Models Explained: OpenAI O1 vs DeepSeek V3.2 - The Next Leap Beyond Standard LLMs (November 2025)

Reasoning models represent a fundamental shift in AI architecture. Unlike standard language models that generate answers instantly, these systems deliberately "think" through problems step-by-step, achieving breakthrough performance in mathematics, coding, and scientific reasoning. Discover how O1 and DeepSeek V3.2 are redefining what AI can accomplish.

Why Smaller AI Models (SLMs) Will Dominate Over Large Language Models in 2025: The On-Device AI Revolution

The AI landscape is shifting from "bigger is better" to "right-sized is smarter." Small Language Models (SLMs) are delivering superior business outcomes compared to massive LLMs through dramatic cost reductions, faster inference, on-device privacy, and domain-specific accuracy. This 2025 guide explores why SLMs represent the future of enterprise AI.