SLMs vs LLMs in 2025: Why 'Smaller Is Smarter' for Enterprise AI

Discover why small language models are outperforming massive LLMs for enterprise applications. Get the complete comparison on cost, privacy, latency, and real deployment patterns that make SLMs the practical choice.

TrendFlash

Introduction: The Enterprise AI Shift from Big to Smart

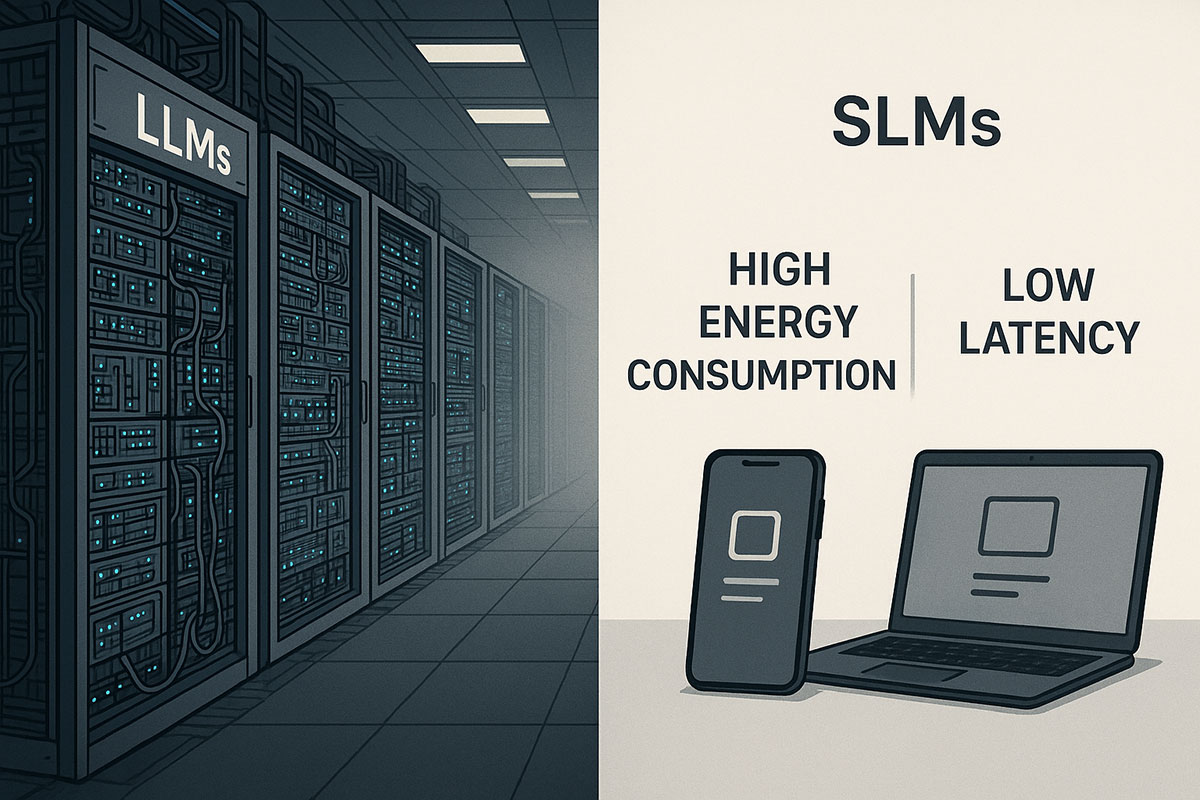

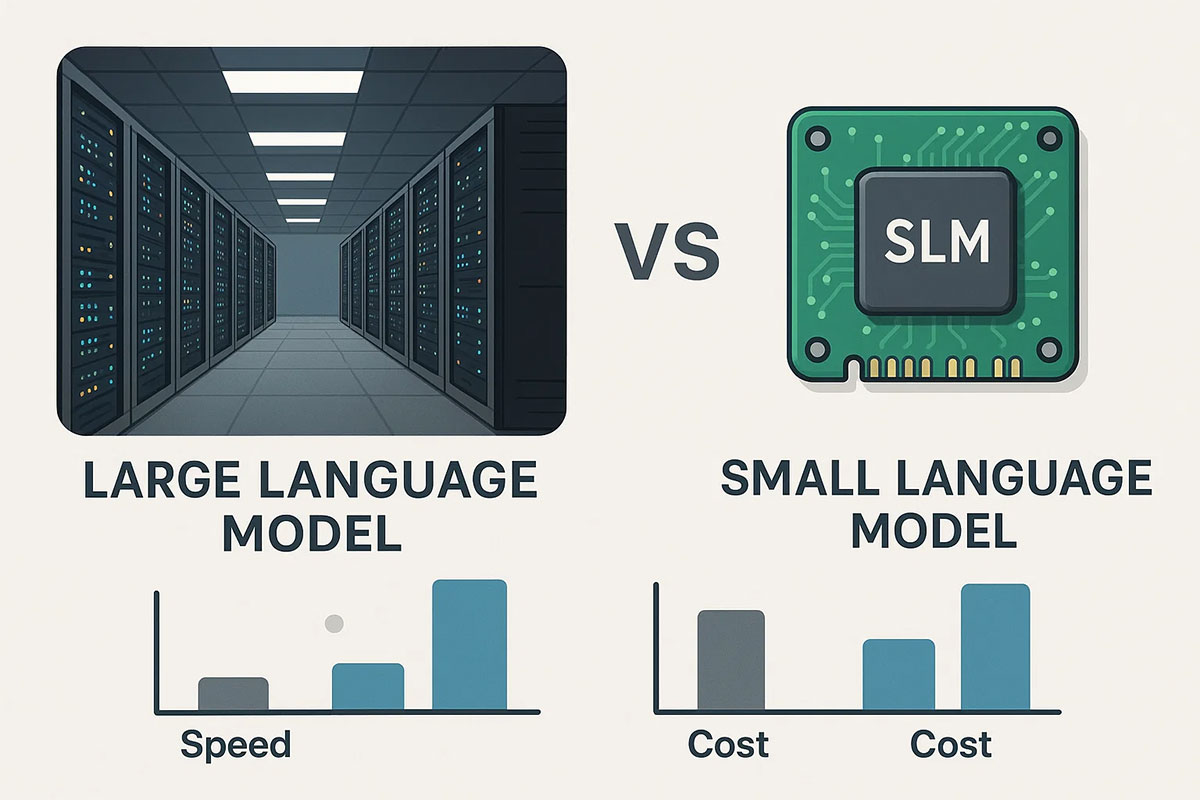

Throughout 2023-2024, the AI world was dominated by large language models (LLMs)—massive neural networks with hundreds of billions of parameters that demonstrated remarkable capabilities but came with equally massive costs, latency, and infrastructure requirements. But in 2025, a fundamental shift is underway. Enterprises are increasingly turning to small language models (SLMs)—compact, efficient AI models that deliver comparable performance for specific tasks at a fraction of the cost and complexity.

The narrative is changing from "bigger is better" to "smarter is better." While LLMs like GPT-4 and Claude 3 continue to push the boundaries of general intelligence, SLMs are proving to be the practical backbone for real-world enterprise applications. From Google's expanding on-device Gemini Nano to Microsoft's Phi-3 models running locally on smartphones, the industry is embracing efficiency without sacrificing capability.

Defining the Battle: SLMs vs LLMs

Understanding the fundamental differences between these two approaches is crucial for making informed AI strategy decisions.

Large Language Models (LLMs)

LLMs are characterized by their massive scale—typically trained on internet-scale datasets with parameter counts ranging from tens of billions to over a trillion. Their strength lies in general knowledge and broad capabilities across diverse domains. However, this generality comes at significant cost: high inference latency, substantial computational requirements, and challenges with data privacy when using cloud-based APIs.

Small Language Models (SLMs)

SLMs represent a focused approach to AI—models typically under 10 billion parameters, often specialized for specific domains or tasks. They sacrifice some breadth of knowledge for dramatically improved efficiency, lower costs, and the ability to run on consumer hardware or edge devices. The key insight driving SLM adoption is that most enterprise applications don't need general world knowledge—they need reliable, efficient performance on well-defined tasks.

The 2025 Landscape: Why SLMs Are Gaining Momentum

Multiple converging trends have positioned SLMs as the pragmatic choice for enterprise AI deployment in 2025.

Economic Realities Hit AI Budgets

As AI initiatives move from experimental pilots to production systems, cost control becomes paramount. LLM API costs that seemed manageable for prototypes become prohibitive at scale. One financial services company discovered their customer service AI would cost $4.2 million annually using GPT-4—but only $87,000 using a fine-tuned SLM with comparable quality for their specific use case.

The Privacy and Data Sovereignty Imperative

With increasing global data protection regulations (India's Digital Personal Data Protection Act, EU's AI Act, etc.), sending sensitive enterprise data to external AI APIs becomes legally and ethically problematic. SLMs enable organizations to keep data within their controlled environments, addressing critical compliance requirements.

Latency Requirements for Real-time Applications

Enterprise applications like customer service chatbots, real-time translation, and document processing require sub-second response times. LLMs often struggle with consistent low-latency performance, while SLMs can deliver responses in milliseconds—making them suitable for interactive applications.

Technical Deep Dive: How SLMs Achieve Efficiency

The performance gains of SLMs aren't magic—they're the result of deliberate architectural choices and training methodologies.

Efficient Transformer Architectures

While early LLMs used standard transformer architectures, SLMs leverage optimized variants that reduce computational complexity:

- Sparse Attention Mechanisms: Instead of processing all tokens simultaneously, these models focus computational resources on the most relevant parts of the input

- Mixture of Experts (MoE): Routing different inputs to specialized sub-networks within the model, effectively increasing capacity without proportional cost increases

- Knowledge Distillation: Training smaller models to mimic the behavior of larger ones, transferring capabilities while reducing size

- Quantization Techniques: Reducing numerical precision from 32-bit to 8-bit or 4-bit representations with minimal accuracy loss

Quality Training Data over Quantity

SLMs achieve their efficiency through carefully curated, high-quality training datasets rather than internet-scale scraping. Microsoft's Phi-3 models, for instance, were trained on "textbook-quality" data—synthetically generated educational content and carefully selected web data. This approach demonstrates that data quality can compensate for data quantity.

SLM vs LLM: Comprehensive Comparison Matrix

This decision matrix helps enterprises evaluate which approach suits their specific needs across critical dimensions.

| Evaluation Criteria | Small Language Models (SLMs) | Large Language Models (LLMs) | Winner for Enterprise |

|---|---|---|---|

| Infrastructure Cost | $500-$5,000/month for full deployment | $10,000-$100,000+/month | SLMs (85% cost reduction) |

| Inference Latency | 50-200ms for typical queries | 500ms-5s+ for complex queries | SLMs (3-10x faster) |

| Data Privacy | Full on-premises/edge deployment | Typically cloud API-based | SLMs (Data never leaves premises) |

| Customization | Easy fine-tuning for domain specificity | Limited fine-tuning, expensive | SLMs (Specialized performance) |

| General Knowledge | Limited to training domain | Broad world knowledge | LLMs (For general applications) |

| On-device Deployment | Yes (phones, IoT devices) | No (requires cloud infrastructure) | SLMs (Complete edge capability) |

| Energy Consumption | 10-50W during inference | 500-5000W+ during inference | SLMs (90% less energy) |

| Development Speed | Weeks to production deployment | Months for complex integration | SLMs (Faster time-to-value) |

Real-World Deployment Patterns for SLMs

Enterprises across industries are finding innovative ways to deploy SLMs that deliver tangible business value.

Pattern 1: Specialized Domain Experts

Instead of using a general-purpose LLM for all tasks, companies deploy multiple specialized SLMs:

- Legal Document SLM: Fine-tuned on legal contracts and regulations for compliance checking

- Customer Service SLM: Trained on product documentation and support tickets for accurate responses

- Technical Documentation SLM: Optimized for generating and maintaining technical manuals

A major Indian IT services company deployed this pattern, reducing their AI costs by 76% while improving accuracy on domain-specific tasks by 42% compared to their previous LLM approach.

Pattern 2: On-Device AI Assistants

SLMs enable truly private, always-available AI assistants on mobile devices and laptops:

- Offline Document Analysis: Reviewing and summarizing documents without internet connection

- Real-time Meeting Transcription: Processing audio locally for privacy-sensitive meetings

- Personalized Learning: Adaptive educational apps that work without cloud dependency

Pattern 3: Multi-Agent Workflow Systems

SLMs excel in agentic AI systems where multiple specialized models collaborate:

- Research Agent: SLM specialized in information gathering and synthesis

- Analysis Agent: SLM optimized for data interpretation and insight generation

- Communication Agent: SLM focused on report writing and presentation creation

This approach allows enterprises to build sophisticated AI workflows where each component is optimized for its specific role, rather than using a single general-purpose model for everything.

The Enterprise Decision Matrix: When to Choose SLMs vs LLMs

Use this practical framework to determine the right approach for your specific use case.

Choose SLMs When:

- Task Specificity: Your application focuses on a well-defined domain or task

- Cost Sensitivity: Budget constraints make LLM APIs economically unviable at scale

- Privacy Requirements: Handling sensitive customer, financial, or proprietary data

- Latency Demands: Real-time or interactive applications requiring fast response

- Offline Capability: Applications that must function without internet connectivity

- Edge Deployment: Running AI directly on mobile devices, IoT sensors, or remote locations

Choose LLMs When:

- General Knowledge: Applications requiring broad world knowledge across diverse topics

- Complex Reasoning: Tasks involving sophisticated logical reasoning across domains

- Creative Generation: Content creation requiring high creativity and novelty

- Research Prototyping: Early-stage exploration where requirements are unclear

- Multi-modal Tasks: Applications combining text, image, and audio understanding

Implementation Roadmap: Adopting SLMs in Your Organization

Transitioning to an SLM-first approach requires careful planning and execution.

Phase 1: Assessment and Use Case Identification (Weeks 1-2)

- Audit current AI applications and identify candidates for SLM migration

- Evaluate data privacy, latency, and cost requirements for each use case

- Prioritize applications with clear ROI and lower migration complexity

Phase 2: Model Selection and Procurement (Weeks 3-6)

- Evaluate available SLMs (Microsoft Phi-3, Google Gemma, Mistral 7B, etc.)

- Conduct proof-of-concept testing with your specific data and tasks

- Select deployment infrastructure (on-premises, cloud VMs, edge devices)

Phase 3: Fine-tuning and Customization (Weeks 7-12)

- Prepare domain-specific training datasets

- Fine-tune selected SLMs for your specific applications

- Establish evaluation metrics and quality assurance processes

Phase 4: Deployment and Scaling (Weeks 13+)

- Deploy SLMs to production environment

- Implement monitoring, logging, and performance tracking

- Scale successful implementations across the organization

Future Trends: Where SLM Technology is Heading

The SLM ecosystem continues to evolve rapidly, with several key trends shaping their future development.

Specialized Hardware Integration

New processors specifically designed for efficient SLM inference are emerging. Google's TPU v5, NVIDIA's H200, and specialized AI chips from AMD and Intel are optimizing for the unique characteristics of small model inference.

Federated Learning Capabilities

SLMs are ideal for federated learning approaches where models are trained across decentralized devices without centralizing sensitive data. This enables privacy-preserving model improvement while maintaining data isolation.

Automated Model Optimization

Tools like Neural Magic's SparseML and Google's Model Optimization Toolkit are making it increasingly easy to compress, quantize, and optimize SLMs for specific deployment scenarios without manual tuning.

Related Reading

- Agentic AI: Your New Virtual Coworker is Here

- Transformer Architecture 2025: Complete Guide to Efficient AI Models

- Enterprise AI Implementation 2025: Strategy to Production

The era of "bigger is better" in AI is giving way to a more nuanced understanding of efficiency, specialization, and practical deployment. While LLMs will continue to drive research boundaries, SLMs are becoming the workhorse of enterprise AI—delivering reliable, cost-effective, and privacy-preserving intelligence where it matters most. Organizations that embrace this shift now will gain significant competitive advantage in the evolving AI landscape.

Share this post

Categories

Recent Posts

Opening the Black Box: AI's New Mandate in Science

AI as Lead Scientist: The Hunt for Breakthroughs in 2026

Measuring the AI Economy: Dashboards Replace Guesswork in 2026

Your New Teammate: How Agentic AI is Redefining Every Job in 2026

Related Posts

Continue reading more about AI and machine learning

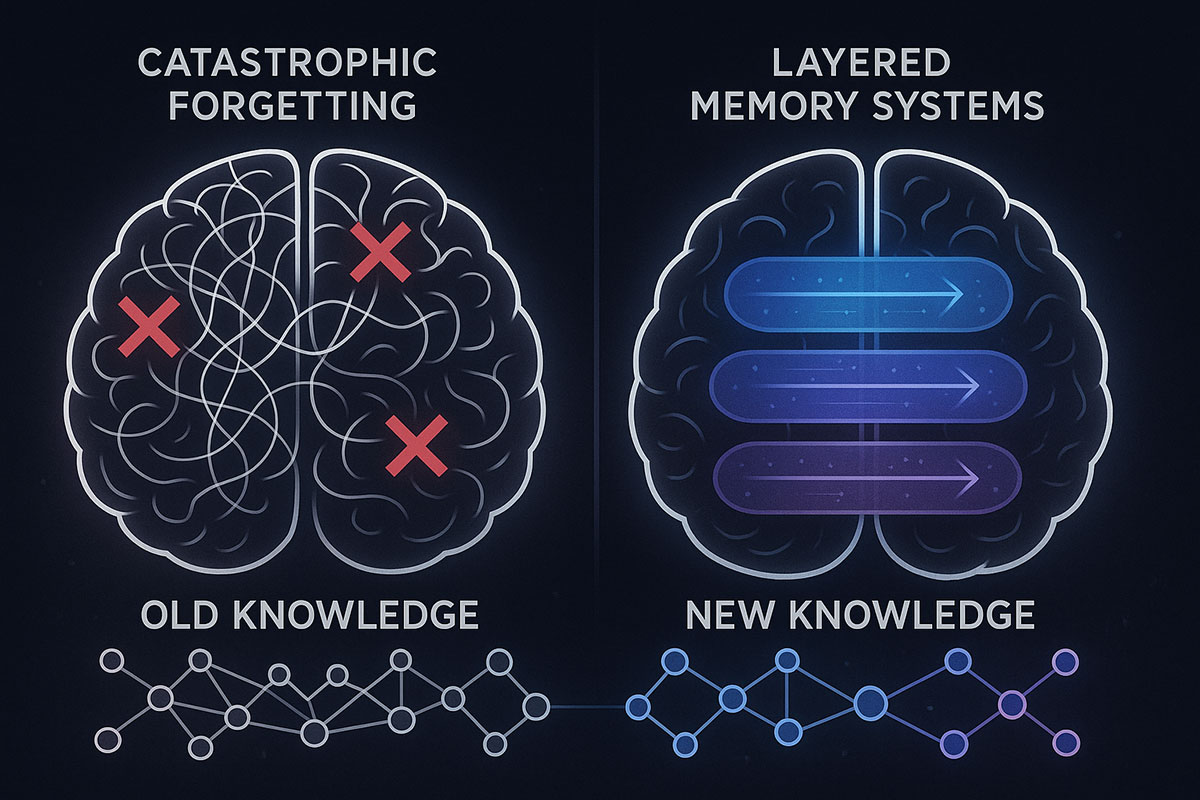

Google's HOPE Model: AI That Finally Learns Continuously (Catastrophic Forgetting Solved)

Google just unveiled HOPE, a self-modifying AI architecture that solves catastrophic forgetting—the fundamental problem preventing AI from learning continuously. For the first time, AI can absorb new knowledge without erasing what it already knows. Here's why this changes everything.

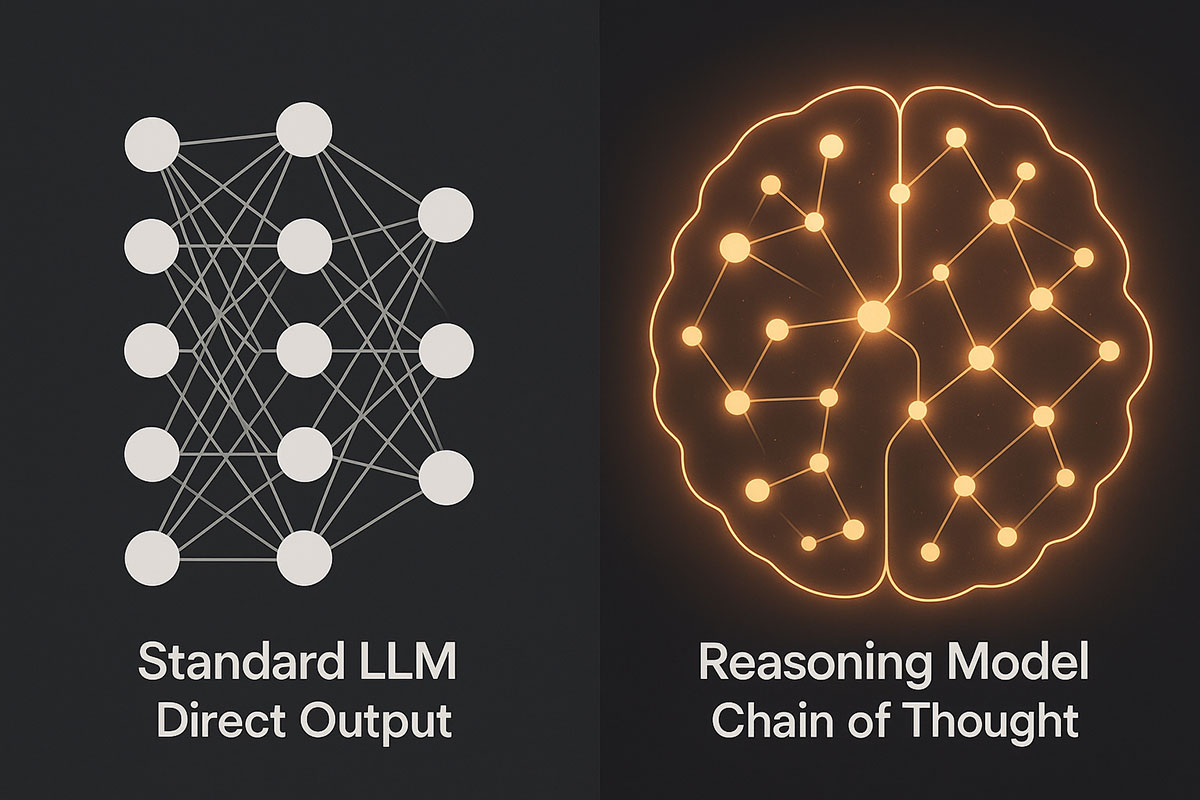

AI Reasoning Models Explained: OpenAI O1 vs DeepSeek V3.2 - The Next Leap Beyond Standard LLMs (November 2025)

Reasoning models represent a fundamental shift in AI architecture. Unlike standard language models that generate answers instantly, these systems deliberately "think" through problems step-by-step, achieving breakthrough performance in mathematics, coding, and scientific reasoning. Discover how O1 and DeepSeek V3.2 are redefining what AI can accomplish.

Why Smaller AI Models (SLMs) Will Dominate Over Large Language Models in 2025: The On-Device AI Revolution

The AI landscape is shifting from "bigger is better" to "right-sized is smarter." Small Language Models (SLMs) are delivering superior business outcomes compared to massive LLMs through dramatic cost reductions, faster inference, on-device privacy, and domain-specific accuracy. This 2025 guide explores why SLMs represent the future of enterprise AI.