Introduction: The New "Third Parent" in the Room

It started with a few kids using ChatGPT to write essays. Now, in 2025, artificial intelligence is as fundamental to the school experience as the backpack and the lunchbox. A recent report highlights that a staggering 86% of students and 85% of teachers are actively using AI tools in their daily educational lives. The Economist recently ran a cover story on "How AI is rewiring childhood," and for good reason: the change is profound, rapid, and frankly, a little terrifying for many parents.

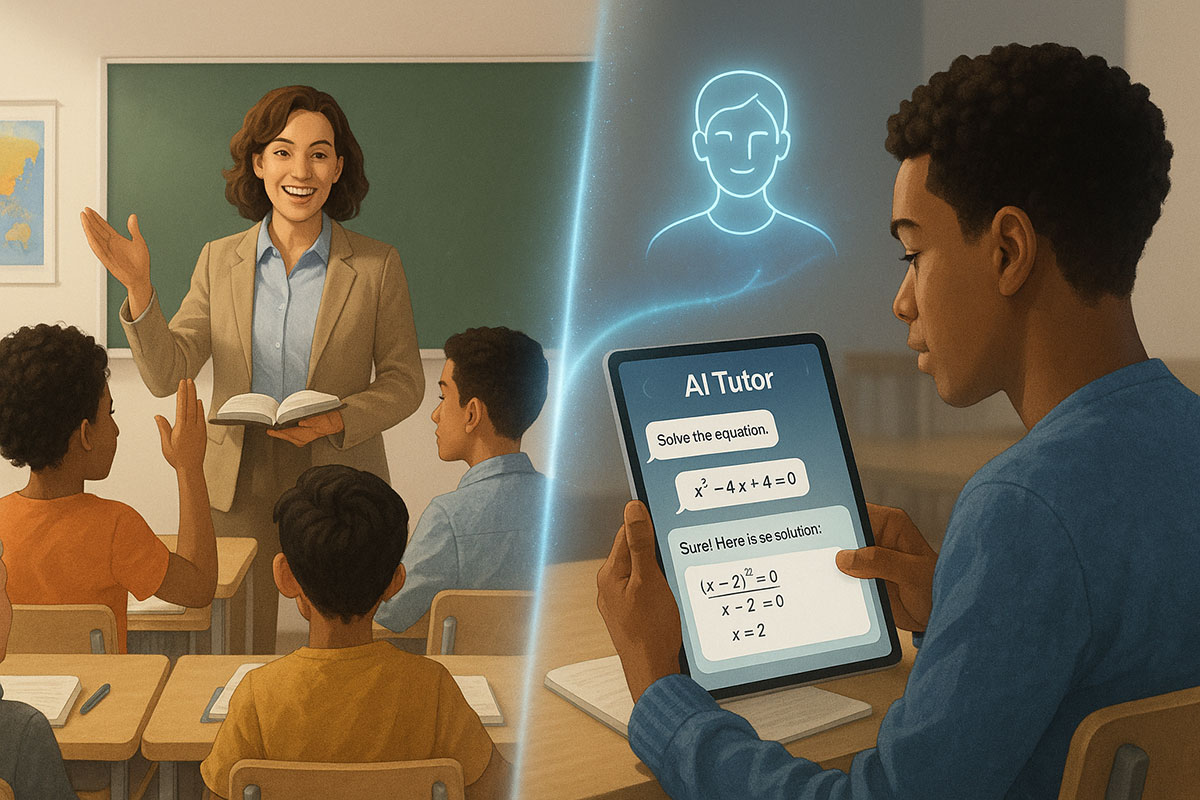

We are no longer debating if AI should be in schools. It is already there. The new question for parents is: How do we ensure this technology acts as a powerful tutor rather than a crutch? This guide cuts through the hysteria to give you the ground truth about AI in your child’s classroom this year.

The Good: Hyper-Personalized Learning is Finally Here

For decades, the "factory model" of education—one teacher, thirty students, same pace for everyone—has been broken. AI is finally fixing it.

1. The 24/7 Personal Tutor

Imagine your child is stuck on a quadratic equation at 8 PM. In the past, they would stay stuck (or ask you, and let’s be honest, you forgot algebra years ago). Today, AI tools act as infinite-patience tutors. They don't just give the answer; good AI tools explain the process, step-by-step, adapting their explanation style to what confuses the student. This levels the playing field for students who can't afford private human tutors.

2. Closing the Literacy Gap

AI tools are proving miraculous for students with learning differences like dyslexia or ADHD. Voice-to-text features, instant summarization of complex texts, and "rewrite this in simpler terms" commands are helping neurodivergent students keep up with peers in ways that were impossible just a few years ago.

3. Unlocking Creativity (Yes, Really)

While we worry about AI killing creativity, we are seeing the opposite in many art and coding classes. Students are using image generators to storyboard films they write, or using coding assistants to build complex apps they could never have syntax-checked on their own. The barrier to creation has lowered, even if the barrier to generation has disappeared.

The Bad: The "Hollow Learning" Trap

However, the anxiety parents feel is justified. There are real risks that schools are still scrambling to manage.

1. The Critical Thinking Atrophy

The biggest danger isn't "cheating" in the traditional sense; it is the outsourcing of thought. If a student asks an AI to "outline this essay" and then "write the paragraphs," they have skipped the crucial mental struggle of organizing their thoughts. We call this "Hollow Learning"—the work gets done, but the neural pathways aren't formed.

2. The Privacy Black Box

Data privacy remains a massive, murky issue. While platforms like ChatGPT Edu promise data security, the ecosystem of third-party "learning apps" is vast and unregulated. Your child's behavioral data, learning speed, and struggles are being collected. Who owns that profile of your child? In 2025, we still don't have a clear answer.

3. The Reality Gap

There is a growing disconnect between what students can produce with AI and what they know. Teachers report a surge in students who turn in university-level essays but struggle to answer basic questions about the topic in class. This "knowledge illusion" can set students up for massive failure when they face proctored exams or real-world situations where AI isn't available.

What Parents Can Do: A 3-Step Strategy

Banning AI is impossible (and counter-productive). Instead, adopt a strategy of "Managed Engagement."

1. Establish the "Sandwich Method" Rule

Teach your kids the Sandwich Method for homework:

- Top Bread (Human): The student does the initial brainstorming, outlining, and questioning. They drive the intent.

- Meat/Filling (AI): Use AI to generate research summaries, check code, or suggest alternative phrasings.

- Bottom Bread (Human): The student must edit, verify, and synthesize the final output. They must be the "Human in the Loop" who signs off on the quality.

2. Talk About "AI Hygiene"

Just as we teach dental hygiene, we must teach digital hygiene. Have open conversations about:

- Hallucinations: Show them examples where AI lies confidently. Teach them to never trust an unverified fact.

- Bias: Discuss how AI models might have stereotypes.

- Ownership: Ask, "If the AI wrote this sentence, is it really yours?"

3. Check the School's Policy (It's Changed)

School policies are evolving monthly. Some have moved to "blue book" exams (pen and paper) to combat AI, while others are integrating it into grades. Ensure you know the rules so your child doesn't accidentally commit academic dishonesty. Read our report on student AI usage survey data to see where your school stands compared to national trends.

Conclusion: Raising "AI-Native" Humans

The goal of school in 2025 isn't to train robots; it's to train humans who can direct robots. The children who will succeed aren't the ones who use AI to hide from work, but the ones who use it to do better work. As a parent, your role has shifted from "homework enforcer" to "ethics guide." It is a harder job, but a more important one.