Large Language Models Explained: The Brains Behind GPT-5 and Beyond

LLMs like GPT-5 are transforming industries, but how do they actually work? This post explains the building blocks of large language models in simple terms.

TrendFlash

Introduction: The LLM Revolution

Large Language Models (LLMs) have become the defining technology of the 2020s. ChatGPT, GPT-5, Claude, Gemini—these systems have captured public imagination and reshaped how millions interact with AI. Yet beneath the hype and impressive demos lies fascinating science. Understanding how LLMs actually work is essential for anyone building with them, evaluating their capabilities, or making decisions about enterprise adoption.

This comprehensive guide demystifies the architecture, training, and deployment of LLMs in 2025. Whether you're a developer, executive, or AI enthusiast, understanding these systems enables smarter decisions about their application.

What Is a Large Language Model?

Core Definition

An LLM is a deep neural network trained on massive text datasets to predict the next word (or token) in a sequence. This deceptively simple objective—predicting the next token—leads to emergent capabilities that enable translation, summarization, reasoning, coding, and creative writing.

Why "Large"?

"Large" refers to scale across multiple dimensions:

- Parameters: Modern LLMs contain billions to trillions of learned weights

- Training Data: Trained on hundreds of billions of tokens from internet text, books, code repositories

- Computational Cost: Training requires massive clusters, costing millions of dollars and months of compute time

- Context Windows: Modern LLMs maintain context over thousands or hundreds of thousands of tokens

This scale is not incidental—it's fundamental. Models trained at smaller scales don't exhibit the remarkable capabilities that have captured attention.

The Transformer Architecture: Understanding the Engine

Why Transformers Won

Before 2017, sequence modeling used Recurrent Neural Networks (RNNs), which processed text word-by-word sequentially. The Transformer architecture, introduced in "Attention Is All You Need," revolutionized this by enabling parallel processing of entire sequences through the self-attention mechanism.

Self-Attention: The Core Innovation

Self-attention allows each token to simultaneously consider all other tokens in the sequence, computing relevance weights dynamically. Rather than processing linearly, Transformers understand relationships between distant tokens immediately.

This enables:

- Parallelization: All tokens processed simultaneously during training (order of magnitude faster)

- Long-Range Dependencies: Understanding relationships across entire documents

- Gradient Flow: Better gradients for learning in deep networks

- Scalability: Performance improves predictably with more parameters and data

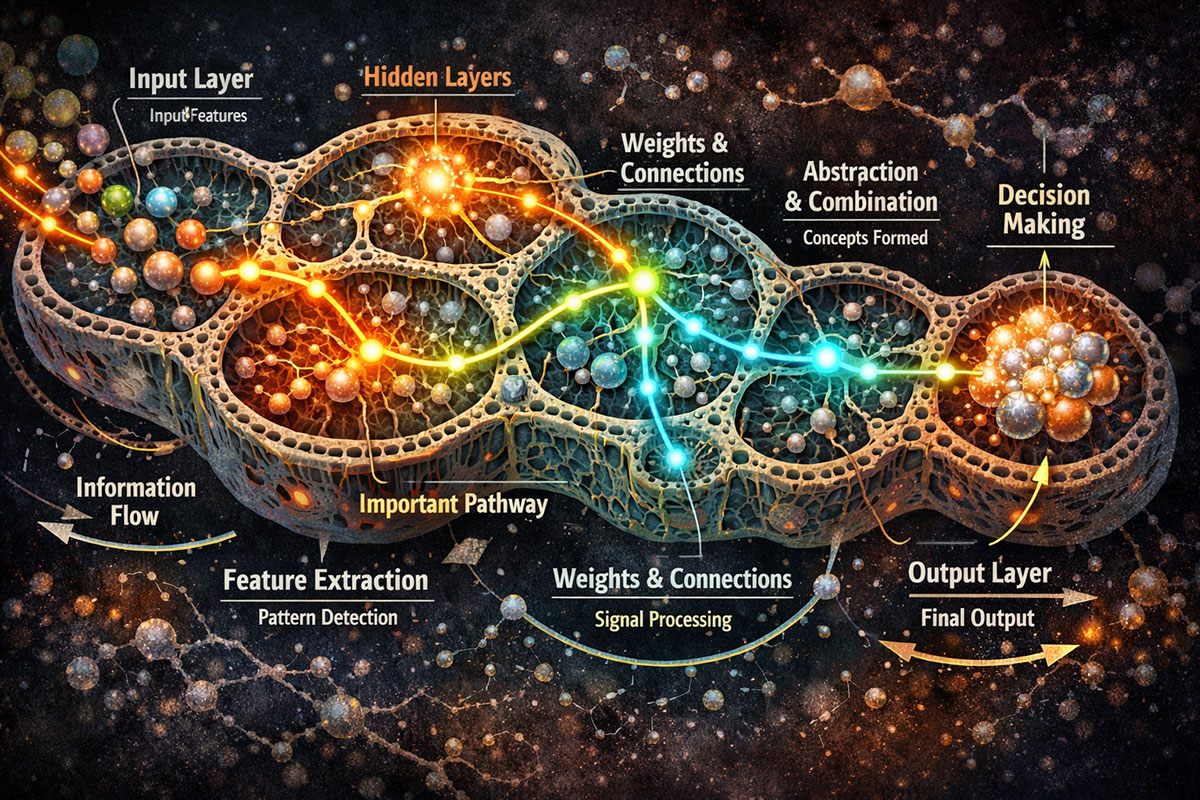

Architecture Layers

Transformers stack identical layers, each containing:

- Multi-Head Self-Attention: Multiple attention mechanisms in parallel, each learning different relationships

- Feed-Forward Networks: Fully connected layers applying nonlinearities

- Residual Connections: Connections bypassing layers, enabling training of very deep networks

- Layer Normalization: Stabilizing activations during training

Decoding (during inference) adds cross-attention layers connecting to encoder output (in sequence-to-sequence tasks) or generates text token-by-token using the learned patterns.

Training LLMs: From Raw Text to Intelligence

Phase 1: Pre-Training on Massive Data

LLMs start as randomly initialized neural networks. Through pre-training on hundreds of billions of tokens, they learn language patterns, factual knowledge, reasoning skills, and even coding ability.

The training objective is deceptively simple: predict the next token given previous tokens. Yet this objective, applied at scale, leads to learning that captures:

- Grammar and syntax

- Factual information about the world

- Logical reasoning patterns

- Coding patterns and algorithms

- Social and cultural knowledge

This is remarkable: from a simple prediction objective emerges general knowledge. This phenomenon, called emergent capability, remains partially mysterious. Models taught only to predict the next token somehow learn to reason, translate languages, and write code.

Phase 2: Instruction Fine-Tuning

Pre-trained models are powerful but sometimes uncontrolled. The next phase involves fine-tuning on curated datasets of high-quality instruction-following examples.

Example training data:

- Input: "Translate to French: Hello, how are you?"

- Output: "Bonjour, comment allez-vous?"

Through thousands of such examples, models learn to follow user instructions and provide useful responses.

Phase 3: Reinforcement Learning from Human Feedback (RLHF)

The final phase uses human preferences to refine behavior. Rather than maximizing simple objectives like predicting the next token, RLHF optimizes for outputs humans prefer.

Process:

- Model generates multiple responses to prompts

- Human evaluators rank responses by quality

- RL algorithm updates model to maximize probability of preferred responses

- Process repeats with new data

This produces models that are more helpful, harmless, and honest—what users want in practical LLMs.

Capabilities, Limitations, and Future Directions

What LLMs Excel At

- Text generation and creative writing

- Question answering and information retrieval

- Code generation and debugging

- Translation and summarization

- Reasoning about complex scenarios

- Explaining concepts at multiple levels

What LLMs Struggle With

- Hallucination: Confidently generating false information

- Recency: Knowledge cutoff means outdated information

- Arithmetic: Simple math sometimes wrong

- Real-Time Adaptation: Can't learn from interactions during use

- Causality: Correlation vs. causation sometimes confused

- Consistency: Can change "opinions" across conversations

Frontier Developments in 2025

The field is rapidly evolving. Recent developments include:

- Extended Context: Models now handle 100K+ tokens of context

- Multimodal: LLMs increasingly process images, audio, video alongside text

- Reasoning Models: Systems spending more compute on reasoning before answering

- Smaller Efficient Models: Smaller models matching larger ones on many tasks

- Autonomous Agents: LLMs powering autonomous agents that take action

Practical Considerations for LLM Deployment

Cost-Performance Trade-offs

Larger models are more capable but more expensive. Organizations must balance:

- Accuracy vs. latency vs. cost

- General-purpose models vs. fine-tuned specialists

- API-based services vs. self-hosted deployments

Safety and Alignment

LLMs can generate harmful content. Organizations using LLMs should consider:

- Filtering inappropriate outputs

- Transparency about AI system limitations

- Prompt engineering to guide desired behavior

- Ethical governance frameworks

Integration Patterns

Practical LLM deployment often combines:

- Retrieval-Augmented Generation (RAG): Combining LLMs with knowledge bases

- Fine-Tuning: Adapting models to specific domains

- Prompt Engineering: Crafting effective instructions

- Monitoring: Tracking performance and errors

Resources and Further Learning

Conclusion

Large Language Models represent a fundamental shift in computing. They're not just better search engines or chatbots—they're new computational tools enabling previously impossible applications.

Understanding LLM fundamentals is essential for anyone working with AI in 2025 and beyond. From architecture to training to deployment, this knowledge informs smarter decisions about technology adoption and responsible usage.

Continue exploring the evolution of NLP, prompt engineering mastery, and emerging AI breakthroughs.

Tags

Share this post

Categories

Recent Posts

Opening the Black Box: AI's New Mandate in Science

AI as Lead Scientist: The Hunt for Breakthroughs in 2026

Measuring the AI Economy: Dashboards Replace Guesswork in 2026

Your New Teammate: How Agentic AI is Redefining Every Job in 2026

Related Posts

Continue reading more about AI and machine learning

Opening the Black Box: AI's New Mandate in Science

AI is discovering proteins and simulating complex systems, but can we trust its answers if we don't understand its reasoning? The scientific community has issued a new mandate: open the black box. We explore the cutting-edge techniques transforming AI from an opaque predictor into a transparent, reasoning partner in the scientific process.

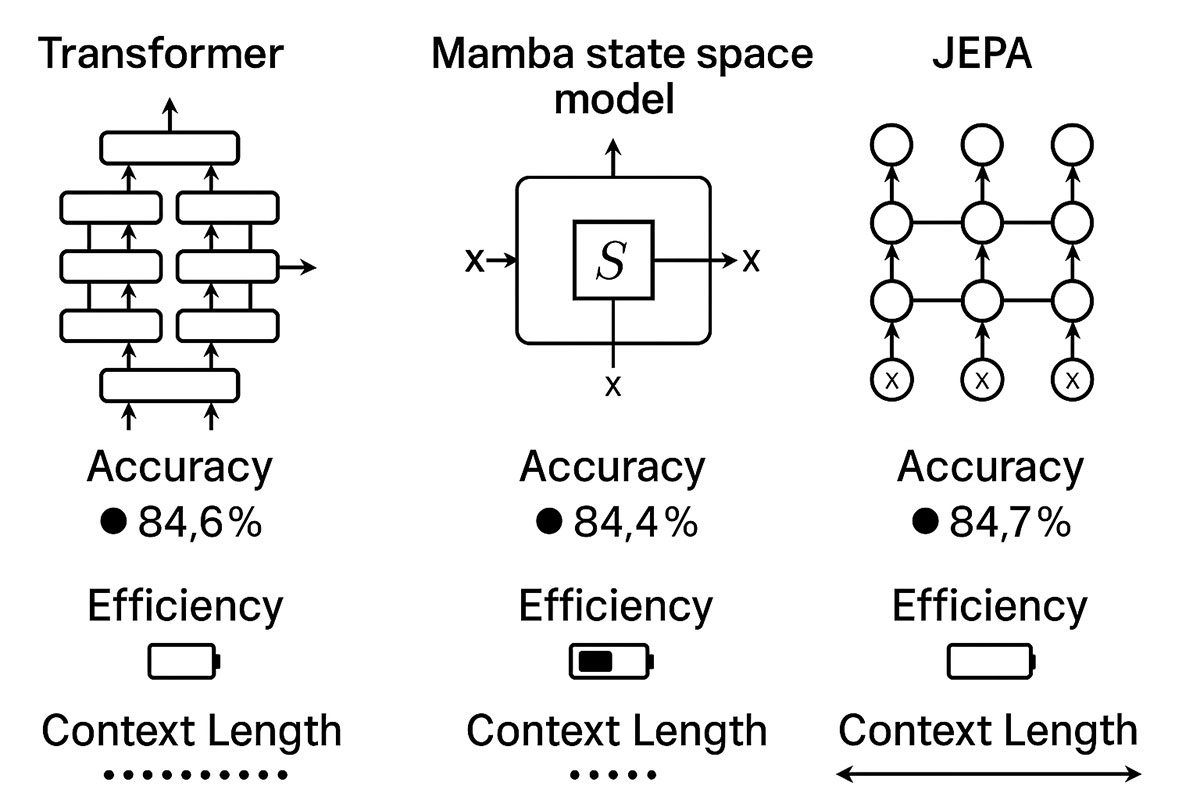

Deep Learning Architectures That Actually Work in 2025: From Transformers to Mamba to JEPA Explained

The deep learning landscape is shifting dramatically. While Transformers dominated the past five years, emerging architectures like Mamba and JEPA are challenging the status quo with superior efficiency, longer context windows, and competitive accuracy. This guide compares real-world performance, implementation complexity, and use cases to help you choose the right architecture.

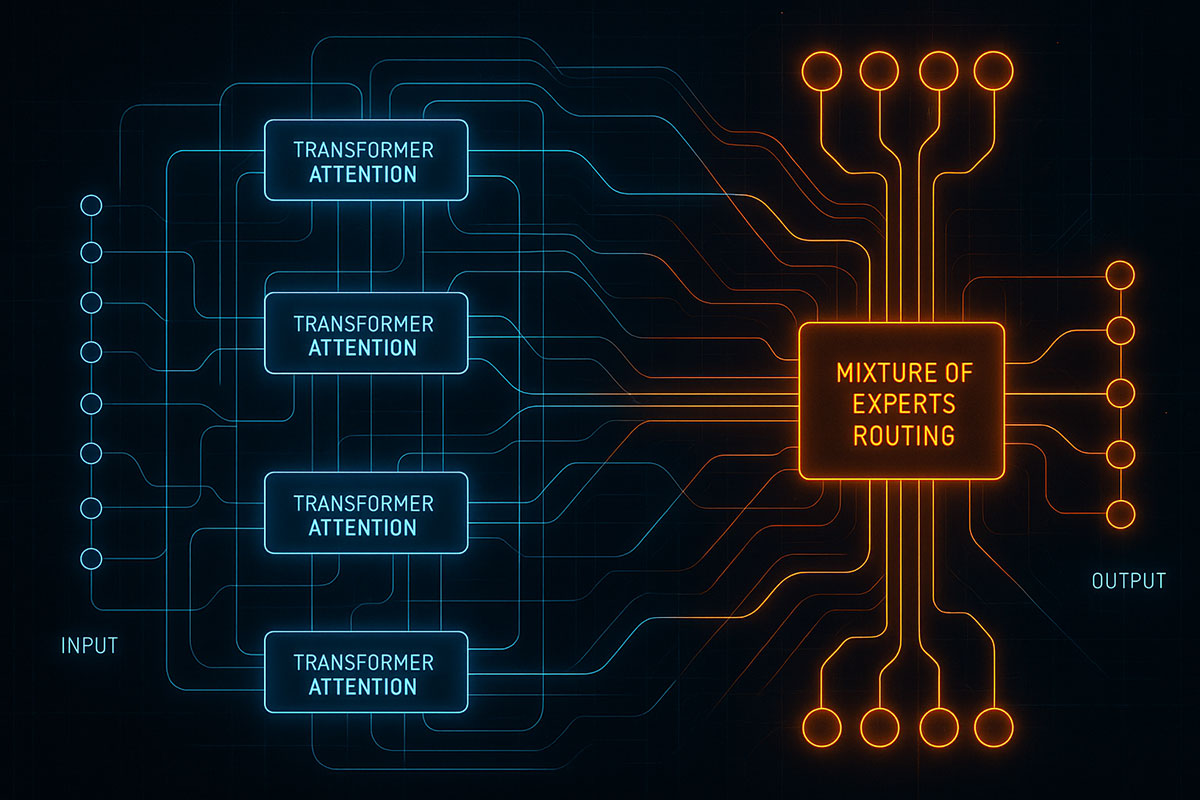

Deep Learning Architectures You Need to Know in 2025

From Transformers dominating NLP to Mixture of Experts revolutionizing efficiency, discover the deep learning architectures shaping AI innovation in 2025 and beyond.