AI for Climate Action in 2025: How Deep Learning Is Tackling Global Warming

Deep learning is fighting global warming in 2025. From predicting extreme weather to optimizing renewable energy, AI is taking on climate change.

TrendFlash

Introduction: From Idea to Reality

You have an AI idea. Now what? This guide walks through the entire journey from "I think AI could help with X" to actual production system with real users.

This is the practical playbook.

Phase 1: Validate the Idea (Weeks 1-4)

Step 1: Define the Problem Precisely

Don't: "Use AI to improve productivity"

Do: "Help customer service reps respond to emails 2x faster using AI"

How: Interview potential users, understand their actual pain point

Step 2: Check If AI Is Needed

Question: Does this problem actually need AI?

- Traditional software might solve it better

- Rules-based approach might suffice

- Is the problem novel enough to need AI?

Step 3: Assess Feasibility

- Is training data available? (crucial for AI)

- Do you have the expertise?

- What's the timeline?

- What's the budget?

Step 4: Talk to 20 Potential Users

Get 10+ people interested before building. If you can't convince people it's needed, they won't buy it.

Phase 2: Build MVP (Weeks 5-12)

Step 1: Gather Training Data

- How much data do you have?

- How much do you need? (depends on problem)

- Is it labeled? (if supervised learning)

- Data quality is critical

Realistic time: 2-4 weeks (often longer than expected)

Step 2: Choose Your Approach

Option A: Use Existing API (Fastest)

- Use OpenAI, Anthropic, Google API

- Build wrapper around it

- Time: 1-2 weeks

- Cost: Minimal ($0-500)

- Advantage: Fast to market

- Disadvantage: Not defensible

Option B: Fine-tune Existing Model (Medium)

- Use open-source model (Llama, etc.)

- Fine-tune on your data

- Time: 2-4 weeks

- Cost: $500-5,000 (GPU time)

- Advantage: More differentiated

- Disadvantage: Need some technical skills

Option C: Train Custom Model (Slowest)

- Build from scratch

- Time: 8-16 weeks

- Cost: $10K-100K+

- Advantage: Most defensible

- Disadvantage: Long timeline, expensive

Step 3: Build Simple Version

MVP doesn't mean beautiful. It means functional.

- Basic UI (even command line is fine)

- Integration with your chosen model

- Logging and evaluation

- Don't over-engineer

Step 4: Test With Real Users

- Get 5-10 real users to try it

- Get their feedback (critical)

- Measure actual metrics (not just feeling)

- Iterate based on feedback

Timeline: 1-2 weeks

Phase 3: Build Production System (Weeks 13-26)

Step 1: Understand What Broke in MVP

- Latency (too slow)

- Accuracy (not good enough)

- User experience (confusing)

- Cost (too expensive to scale)

Step 2: Address Scaling Issues

If accuracy problem:

- Get more training data

- Try different model

- Implement better evaluation

- Consider hybrid human-AI

If latency problem:

- Optimize inference

- Add caching layer

- Use smaller model (if possible)

- Distributed deployment

If cost problem:

- Use cheaper model

- Optimize API usage

- Batch processing

- Fine-tune your own (might be cheaper)

Step 3: Build Proper Infrastructure

- Database (store results)

- API (if needed)

- Monitoring (track performance)

- Logging (debug issues)

- Testing (catch regressions)

Step 4: Evaluate Rigorously

Metrics to track:

- Accuracy (how often is it right?)

- Precision/Recall (depending on use case)

- Latency (how fast?)

- Cost per prediction

- User satisfaction

Step 5: Deploy Beta

- Limited rollout first (10% of users)

- Monitor closely

- Gather feedback

- Iterate

- Then scale

Phase 4: Production & Maintenance (Ongoing)

Monitoring

- Track model performance over time

- Watch for model drift (accuracy decreases)

- Monitor costs

- Track user satisfaction

Retraining

- When does accuracy drop? (triggers retraining)

- How often? (monthly, quarterly, etc.)

- Automate if possible

Iteration

- Collect user feedback constantly

- Prioritize improvements

- Release updates regularly

- A/B test changes

Timeline Summary

| Phase | Duration | Key Activities |

|---|---|---|

| Validation | 4 weeks | Talk to users, validate problem |

| MVP | 8 weeks | Build basic version, get feedback |

| Production | 14 weeks | Scale, optimize, deploy |

| Ongoing | Indefinite | Monitor, maintain, improve |

Total to launch: 26 weeks (6 months)

Budget Summary

| Phase | Costs |

|---|---|

| Validation | $0-5K (your time) |

| MVP | $2-10K (compute, tools) |

| Production | $5-50K (infrastructure, team) |

| Ongoing | $1-10K/month (compute, hosting) |

Common Pitfalls to Avoid

- Over-building before validation

- Not talking to users early

- Using ML when rules-based would work

- Underestimating data collection time

- Ignoring model monitoring/drift

- Poor documentation (critical later)

Conclusion: The Path to Production

Building AI systems is not magic. It's disciplined project management + technical execution. Validate first, build MVP, iterate, scale production. The same playbook works for most AI projects.

Explore more on AI projects at TrendFlash.

Share this post

Categories

Recent Posts

Opening the Black Box: AI's New Mandate in Science

AI as Lead Scientist: The Hunt for Breakthroughs in 2026

Measuring the AI Economy: Dashboards Replace Guesswork in 2026

Your New Teammate: How Agentic AI is Redefining Every Job in 2026

Related Posts

Continue reading more about AI and machine learning

Opening the Black Box: AI's New Mandate in Science

AI is discovering proteins and simulating complex systems, but can we trust its answers if we don't understand its reasoning? The scientific community has issued a new mandate: open the black box. We explore the cutting-edge techniques transforming AI from an opaque predictor into a transparent, reasoning partner in the scientific process.

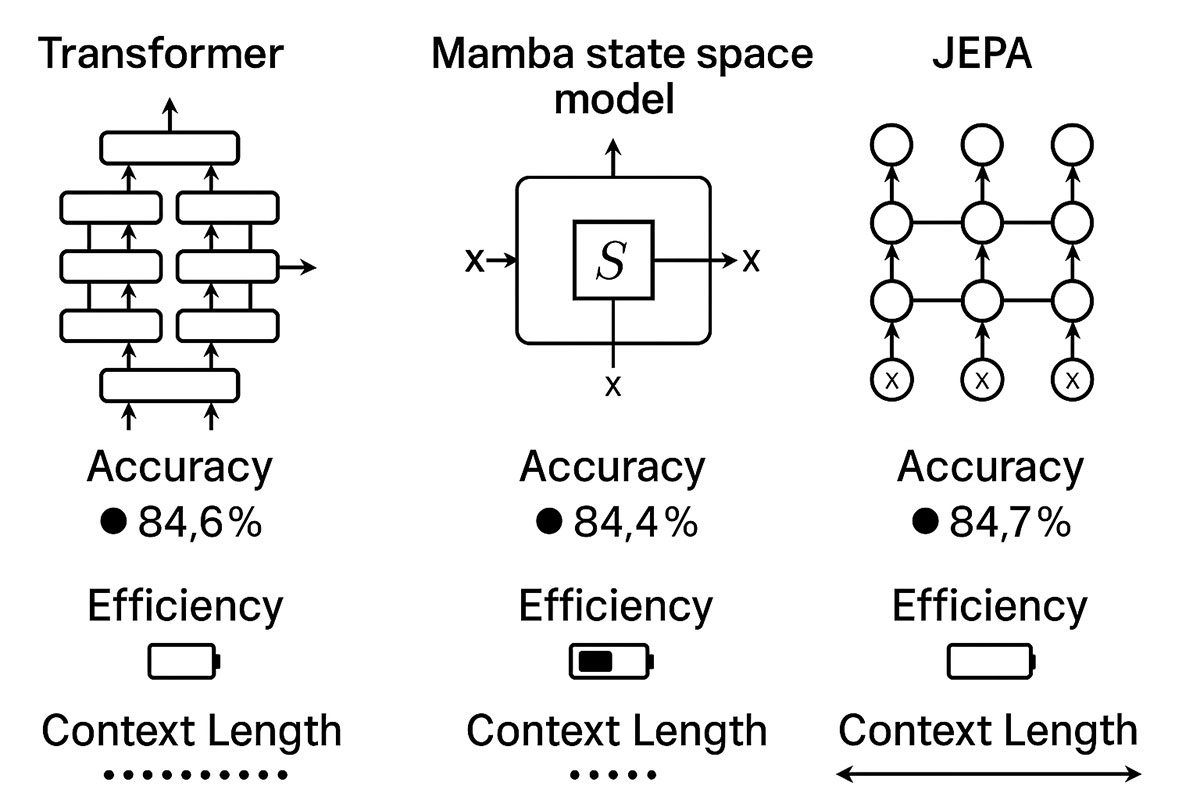

Deep Learning Architectures That Actually Work in 2025: From Transformers to Mamba to JEPA Explained

The deep learning landscape is shifting dramatically. While Transformers dominated the past five years, emerging architectures like Mamba and JEPA are challenging the status quo with superior efficiency, longer context windows, and competitive accuracy. This guide compares real-world performance, implementation complexity, and use cases to help you choose the right architecture.

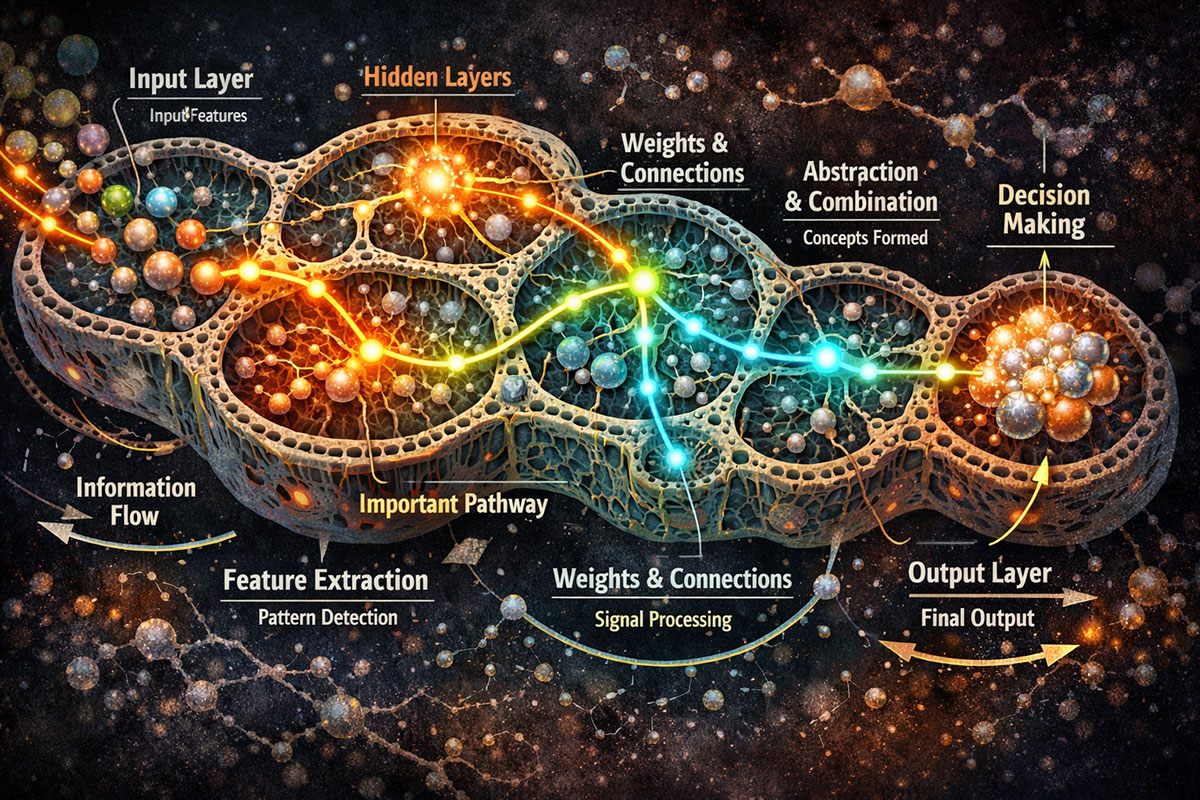

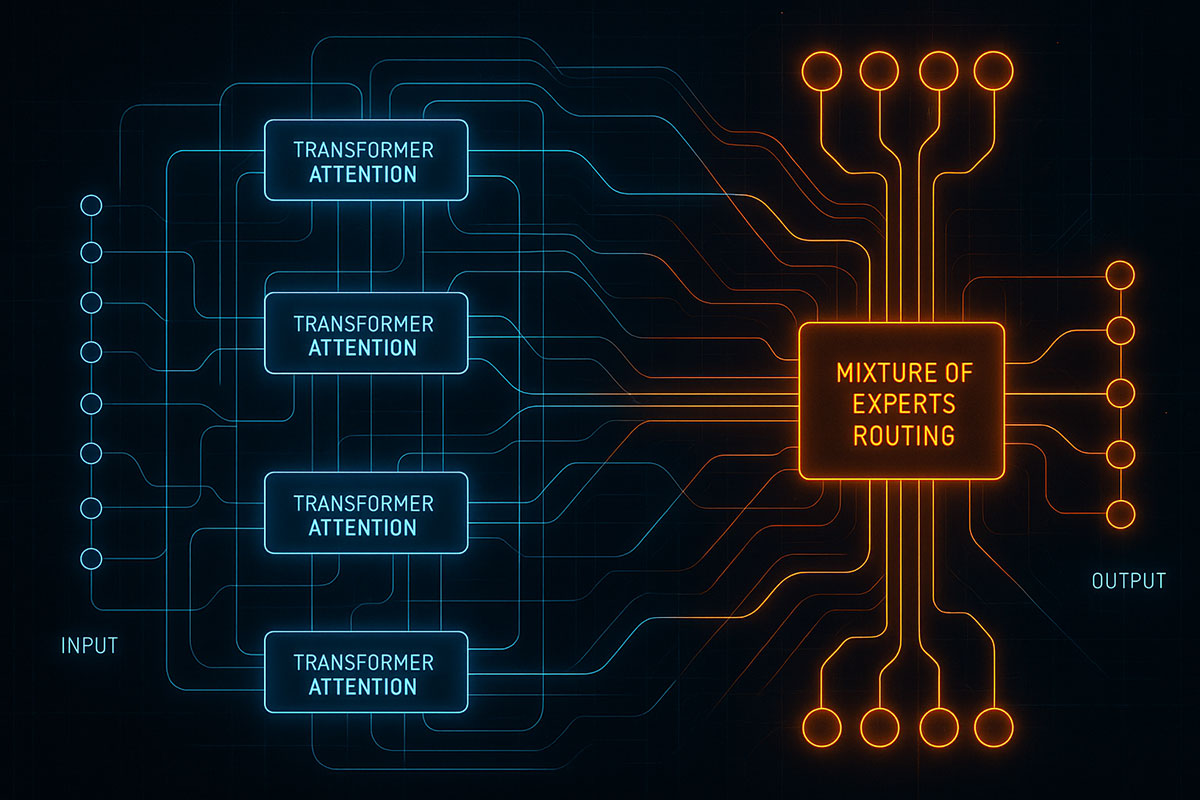

Deep Learning Architectures You Need to Know in 2025

From Transformers dominating NLP to Mixture of Experts revolutionizing efficiency, discover the deep learning architectures shaping AI innovation in 2025 and beyond.