Unlocking Language: How Natural Language Processing Powers ChatGPT, Gemini, and GPT-5

NLP is the core technology that lets machines talk, write, and even reason with us. This post explains how NLP powers tools like ChatGPT, Gemini, and GPT-5—and why it’s transforming communication in 2025.

TrendFlash

Introduction: Language Is AI's Greatest Frontier

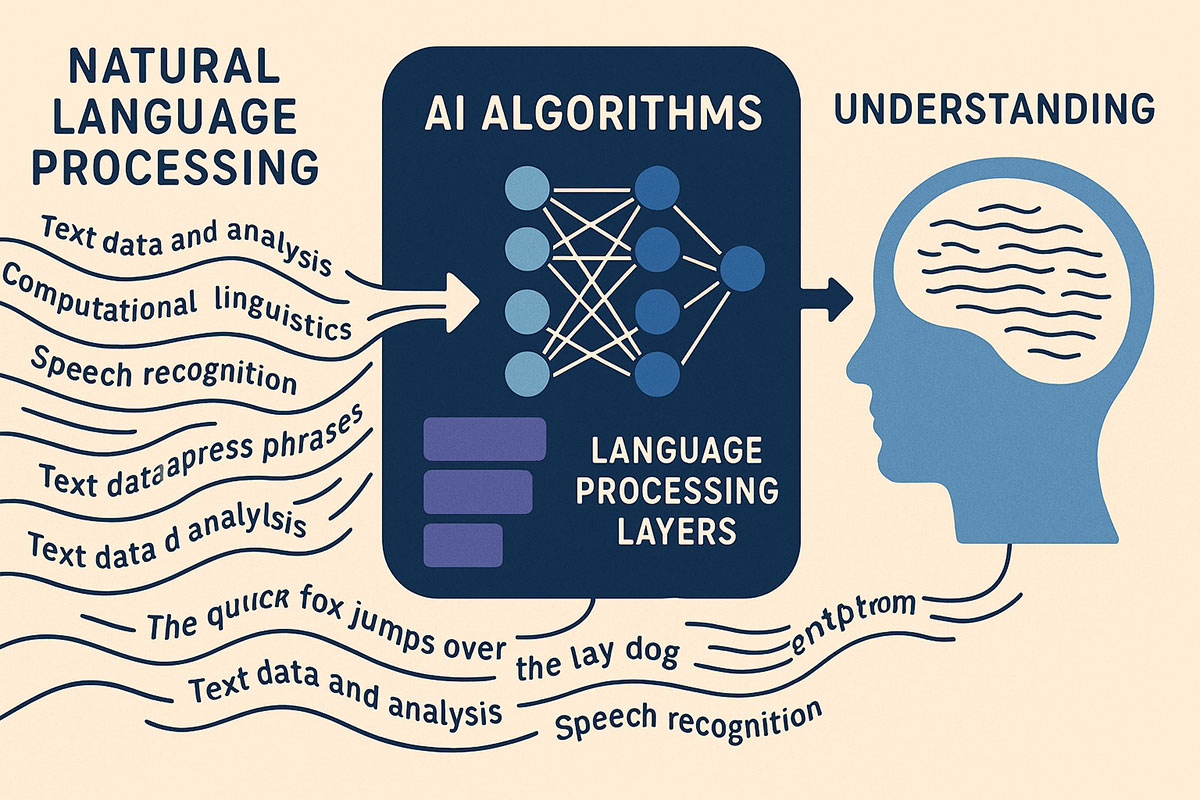

Language is humanity's most powerful tool for transmitting information, expressing ideas, and coordinating action. In 2025, Natural Language Processing (NLP) has evolved from keyword matching into sophisticated systems enabling machines to understand, reason about, and generate human language with impressive fluency.

From ChatGPT and Gemini understanding nuanced questions to systems that translate between hundreds of languages, NLP powers some of AI's most visible achievements. Understanding NLP is central to understanding modern AI.

What Is NLP?

Core Definition

NLP is the field of AI focused on enabling machines to process, analyze, and respond to human language in meaningful ways. This includes:

- Text Understanding: Parsing grammar, semantics, context, and intention

- Text Generation: Producing coherent, contextually appropriate text

- Speech Processing: Converting spoken language to text and back

- Language Inference: Drawing conclusions from textual premises

- Information Extraction: Pulling structured data from unstructured text

Why Language Processing Is Hard

Language presents challenges that seem simple to humans but are profound for machines:

- Ambiguity: "Bank" means financial institution or river edge depending on context

- Context Dependency: Word meaning depends on surrounding context

- Figurative Language: Metaphors, idioms, sarcasm

- Variability: Infinite ways to express the same meaning

- Knowledge Requirements: Understanding text requires world knowledge

These challenges drove NLP research for decades before modern deep learning approaches achieved breakthroughs.

The Evolution of NLP: From Rules to Learning

Phase 1: Rule-Based Systems (1960s-1990s)

Early NLP relied on hand-coded grammatical rules. Systems could parse grammatically correct sentences following patterns humans specified.

Limitations: Any deviation from expected patterns caused failures. The combinatorial explosion of rules made scaling impossible.

Phase 2: Statistical Approaches (1990s-2010s)

Statistical methods learned patterns from data rather than relying on hand-coded rules. Techniques like Hidden Markov Models, Conditional Random Fields, and Support Vector Machines showed that learned patterns outperformed carefully engineered rules.

Phase 3: Deep Learning & Embeddings (2010s)

Neural networks, particularly RNNs and LSTMs, enabled end-to-end learning of complex language patterns. Word embeddings (Word2Vec, GloVe) captured semantic relationships between words mathematically.

Phase 4: Transformers & Pre-Training (2018-present)

The Transformer architecture and large-scale pre-training revolutionized NLP. Models like BERT, GPT, and their successors achieve remarkable capabilities through learning from massive text corpora and fine-tuning on specific tasks.

Core NLP Tasks in 2025

Text Classification

Assigning text to predefined categories. Applications:

- Sentiment analysis (positive/negative/neutral)

- Spam detection

- Topic classification

- Intent recognition for chatbots

Named Entity Recognition (NER)

Identifying people, places, organizations, dates in text. Applications:

- Information extraction from news articles

- Resume parsing

- Medical record analysis

Machine Translation

Converting text from one language to another while preserving meaning. Applications:

- Real-time translation services (Google Translate)

- Multilingual document analysis

- Global communication

Question Answering

Providing answers to natural language questions. Applications:

- Customer support chatbots

- Search engines

- Interactive learning systems

Text Summarization

Condensing lengthy documents into concise summaries. Applications:

- News aggregation

- Research paper summarization

- Document management

Dialogue Systems

Maintaining coherent multi-turn conversations. Applications:

- Virtual assistants

- Customer service

- Interactive entertainment

Modern NLP Architecture: Transformers and Beyond

Transformer-Based Approaches

Modern architectures use transformers, which excel through self-attention mechanisms allowing parallel processing of sequences and capturing long-range dependencies.

Pre-Training and Fine-Tuning

Modern NLP follows a two-stage approach:

- Pre-Training: Learning general language patterns from massive text corpora

- Fine-Tuning: Adapting pre-trained models to specific tasks with modest task-specific data

This dramatically improves data efficiency and performance compared to training from scratch.

Retrieval-Augmented Generation

Combining language models with knowledge bases to ground responses in factual information. Applications:

- Question answering with cited sources

- Customer support with access to product documentation

- Research tools with access to scientific literature

Conclusion

Natural language processing has transformed from academic curiosity to practical technology touching billions daily. Understanding NLP fundamentals is essential for anyone working with modern AI systems.

Explore more: NLP comprehensive guide, chatbot evolution, all AI trends.

Tags

Share this post

Categories

Recent Posts

Opening the Black Box: AI's New Mandate in Science

AI as Lead Scientist: The Hunt for Breakthroughs in 2026

Measuring the AI Economy: Dashboards Replace Guesswork in 2026

Your New Teammate: How Agentic AI is Redefining Every Job in 2026

Related Posts

Continue reading more about AI and machine learning

Prompt Engineering 2.0: How to Write Prompts That Understand You (Not the Other Way Around)

The era of simple keyword prompts is over. Discover how Prompt Engineering 2.0 uses structured commands, specific personas, and strategic framing to make advanced AI models like GPT-4 and Claude 3 deliver precisely what you need.

AI Hallucinations Are Getting More Dangerous: How to Spot and Stop Them in 2025

AI isn't just making up facts anymore; it's creating convincing, dangerous fabrications. Discover why modern hallucinations are harder to detect and how new mitigation techniques are essential for any business using AI in 2025.

Natural Language Processing: How AI Understands Human Language in 2025

NLP technology has advanced to near-human understanding of language. This guide explains how AI processes text and speech with unprecedented accuracy in 2025.