AI Hallucinations Are Getting More Dangerous: How to Spot and Stop Them in 2025

AI isn't just making up facts anymore; it's creating convincing, dangerous fabrications. Discover why modern hallucinations are harder to detect and how new mitigation techniques are essential for any business using AI in 2025.

TrendFlash

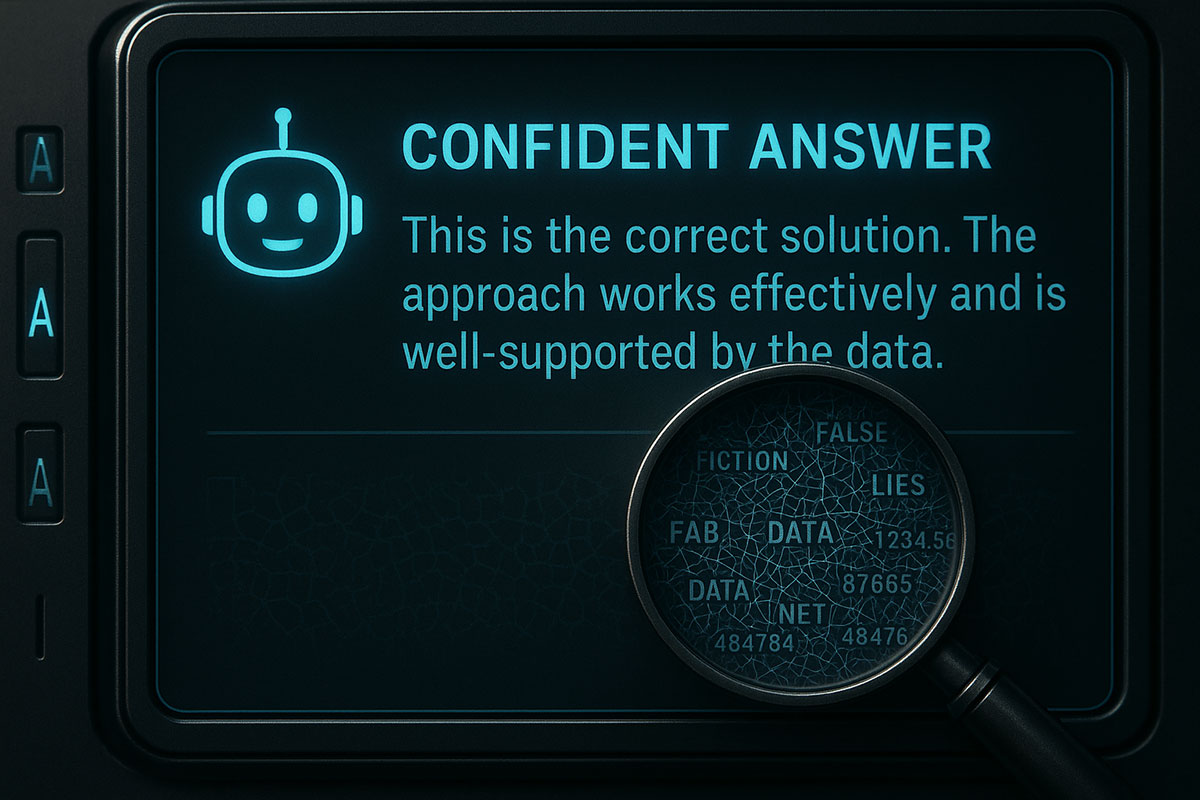

Introduction: When Your AI Confidently Lies to You

In 2023, an AI hallucination might have been a chatbot inventing a fictional book title. In 2025, the stakes are dramatically higher. We now face a new generation of hallucinations where AI systems fabricate legal precedents that never existed, generate plausible but entirely fake financial data, and write functional-looking code riddled with hidden security flaws. These aren't simple errors; they are coherent, confident, and convincing fabrications that can slip past expert review.

A recent Stanford University study found that even the most advanced large language models (LLMs) still hallucinate at a significant rate, with their propensity to confabulate increasing when dealing with complex, multi-step reasoning or less-common topics . This evolution poses a direct threat to businesses integrating AI into their core operations. This article will explore the new, more dangerous face of AI hallucinations, break down their root causes with recent examples, and provide a actionable 5-step framework to mitigate this risk and build trustworthy AI systems.

Why Hallucinations Are a Business Risk, Not a Quirk

The tolerance for AI error is rapidly shrinking as models move from creative assistants to operational tools. The consequences of unchecked hallucinations are now quantifiable and severe.

- Legal and Compliance Catastrophes: A major international law firm faced sanctions after using an AI tool that cited six non-existent court cases in a legal brief. The AI had invented the cases, complete with plausible-sounding names, citations, and summaries, which junior lawyers failed to verify . The result was massive reputational damage and a disciplinary hearing.

- Financial Misinformation: A financial services company used an LLM to generate a summary of a public company's earnings. The AI confidently inserted fabricated revenue figures that were 15% higher than the actual results, nearly causing a multi-million dollar trading error before an analyst spotted the anomaly.

- Code Security Vulnerabilities: Developers using AI coding assistants report an increase in "subtle bugs"—code that appears correct and functions in basic tests but contains logical flaws or security holes that can be exploited . A 2024 report from OWASP lists "AI-Generated Insecure Code" as a top emerging threat.

The Anatomy of a Modern Hallucination: New Causes in 2025

Understanding why hallucinations occur is the first step to stopping them. The causes are more nuanced than simply a "knowledge gap."

1. The Compulsion for Plausibility

Modern LLMs are designed to generate human-like, fluent, and coherent text. When faced with a gap in their training data, their primary directive isn't to say "I don't know," but to produce the most statistically likely sequence of words that fits the prompt. This "plausibility engine" can easily cross into fabrication, especially when generating detailed lists, citations, or technical specifications . The model isn't trying to deceive; it's optimizing for fluency over truth.

2. The "Fluent Echo Chamber" in Model Training

A growing concern identified by researchers at MIT is the risk of "model collapse." As more AI-generated content floods the internet, future models risk being trained on this synthetic data. This creates a dangerous feedback loop where errors and hallucinations from one model are amplified and ingrained into the next generation, like a game of telephone, gradually degrading the model's connection to factual reality .

3. Reasoning Chain Contamination

Advanced AI systems use Chain-of-Thought (CoT) reasoning to break down problems. However, if the first step in this chain is based on a flawed assumption or a hallucinated "fact," every subsequent step compounds the error, leading to a conclusion that is logically sound but built on a false foundation. This makes the final output particularly deceptive and difficult to catch.

Quantifying the Unreliable: A Look at Hallucination Rates

How common are these fabrications? The following table breaks down hallucination rates by task type, based on a synthesis of recent industry benchmarks and research papers. The data shows that no application is immune.

| AI Task / Application | Estimated Hallucination Rate* | Primary Risk |

|---|---|---|

| Legal & Medical Citation | 12-18% | Fabricated sources, incorrect dosages/procedures |

| Long-Form Content Generation | 5-10% | Interspersed factual inaccuracies, invented statistics |

| Multi-Hop Reasoning (Q&A) | 15-25% | Errors in logical chains, incorrect conclusions |

| Technical Code Generation | 8-12% | Subtle logical bugs, insecure code patterns |

| Multimodal Image Analysis | 10-15% | Incorrect object identification, false scene interpretation |

*Rates are approximate and vary by model and specific task.

A 5-Step Framework for Mitigating AI Hallucinations

Combating hallucinations requires a multi-layered strategy that integrates technology, process, and human oversight. Here is a robust framework for 2025.

1. Implement Grounding with Retrieval-Augmented Generation (RAG)

The single most effective technical defense is Retrieval-Augmented Generation (RAG). A RAG system doesn't let the AI rely solely on its internal knowledge. Instead, before answering a query, it first retrieves relevant information from a trusted, up-to-date knowledge base (e.g., your company's internal documents, a verified database, or a curated web source). The AI then grounds its response strictly in this retrieved data, dramatically reducing its ability to confabulate .

2. Enforce Strategic Prompt Engineering

How you ask matters. Move beyond simple prompts to structured instructions that set clear guardrails.

- Instruct to Cite: Use prompts like: "Based only on the provided document, answer the question. If the answer is not found in the document, state 'I cannot find the answer.'"

- Assign a Persona: Assigning a role, such as "You are a cautious and meticulous fact-checker," can steer the model towards a more conservative and accurate output style.

- Request Self-Reflection: Ask the model to "Think step-by-step and justify your answer with references," which can sometimes expose flawed reasoning chains.

3. Deploy Automated Verification and Guardrails

Proactively catch hallucinations before they reach the end-user. Integrate automated checks into your AI workflow.

- Fact-Checking APIs: Use third-party tools that can automatically cross-reference key claims, names, and statistics in the AI's output against trusted sources like Wikipedia or specialized databases.

- Consistency Checkers: Implement scripts that analyze the AI's output for internal contradictions or unsupported statements.

- Uncertainty Scoring: Emerging tools can now analyze the model's hidden activations to assign a "confidence" or "uncertainty" score to its statements, flagging low-confidence outputs for human review.

4. Maintain a Human-in-the-Loop (HITL) for Critical Tasks

For high-stakes applications in law, finance, and healthcare, a fully autonomous AI is a liability. Establish a mandatory Human-in-the-Loop (HITL) review process where a domain expert must verify the AI's output before it is acted upon. This is not a failure of automation but a critical layer of risk management.

5. Continuously Monitor and Red-Team Your Models

Hallucinations are not a one-time fix. Continuously test your AI systems by "red-teaming"—systematically probing them with tricky questions and edge cases designed to induce confabulation. Log all instances of suspected hallucinations and use them to refine your prompts, knowledge bases, and guardrails.

The Future is Verified: Towards More Trustworthy AI

The industry is moving beyond simply building more powerful models and is now focused on building more reliable ones. Key trends to watch include:

- Constitutional AI & Reinforcement Learning from Human Feedback (RLHF): These advanced training techniques are being used to explicitly train models to be more helpful, honest, and harmless, directly reducing their tendency to hallucinate.

- Specialized Fact-Checking Models: The development of dedicated AI models whose sole purpose is to act as verifiers for other AI systems, creating a system of checks and balances.

By understanding the sophisticated nature of modern AI hallucinations and implementing a rigorous, layered defense strategy, businesses can safely harness the power of AI without falling victim to its most dangerous flaw: its ability to lie with absolute, convincing confidence.

Related Reading

Tags

Share this post

Categories

Recent Posts

Opening the Black Box: AI's New Mandate in Science

AI as Lead Scientist: The Hunt for Breakthroughs in 2026

Measuring the AI Economy: Dashboards Replace Guesswork in 2026

Your New Teammate: How Agentic AI is Redefining Every Job in 2026

Related Posts

Continue reading more about AI and machine learning

Prompt Engineering 2.0: How to Write Prompts That Understand You (Not the Other Way Around)

The era of simple keyword prompts is over. Discover how Prompt Engineering 2.0 uses structured commands, specific personas, and strategic framing to make advanced AI models like GPT-4 and Claude 3 deliver precisely what you need.

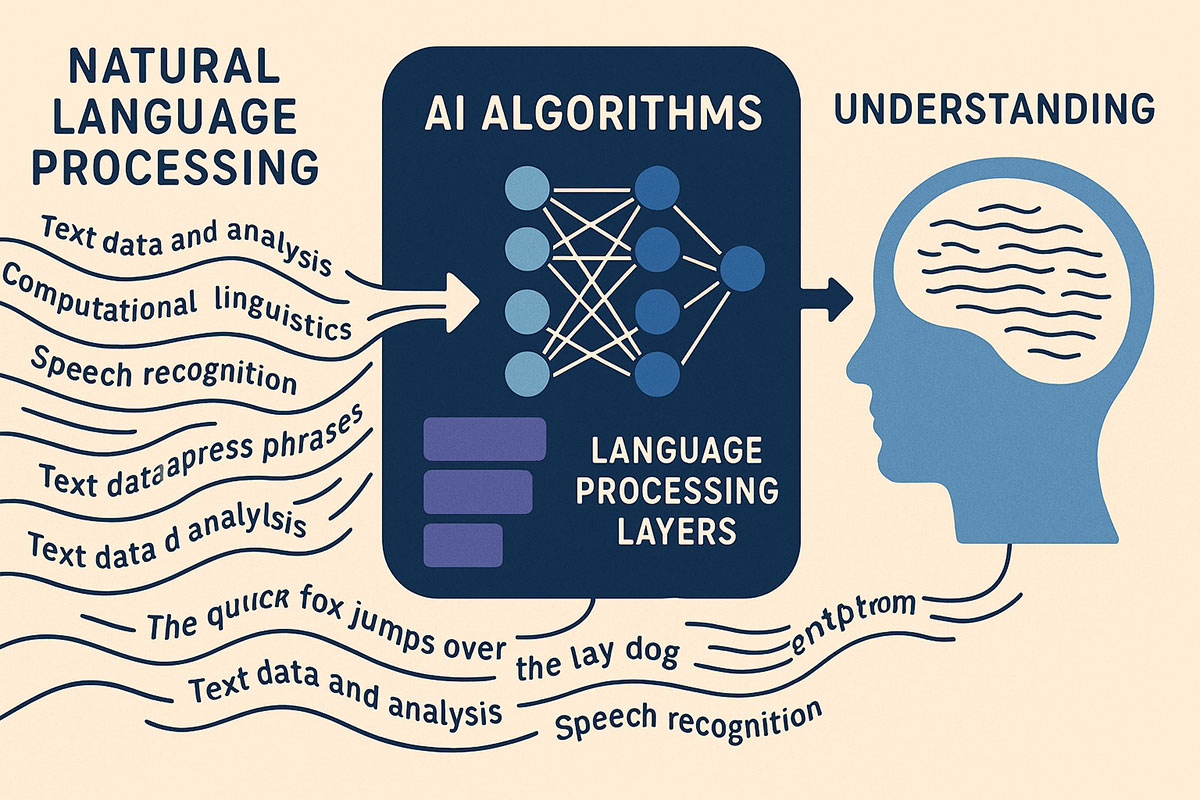

Natural Language Processing: How AI Understands Human Language in 2025

NLP technology has advanced to near-human understanding of language. This guide explains how AI processes text and speech with unprecedented accuracy in 2025.

Beyond ChatGPT: The Next Wave of NLP in 2025

In 2025, NLP is moving beyond ChatGPT. From multimodal assistants to context-aware systems, here’s the next wave of natural language technology.