Efficient AI Models vs Mega Models: Why Smaller May Be Better in 2025

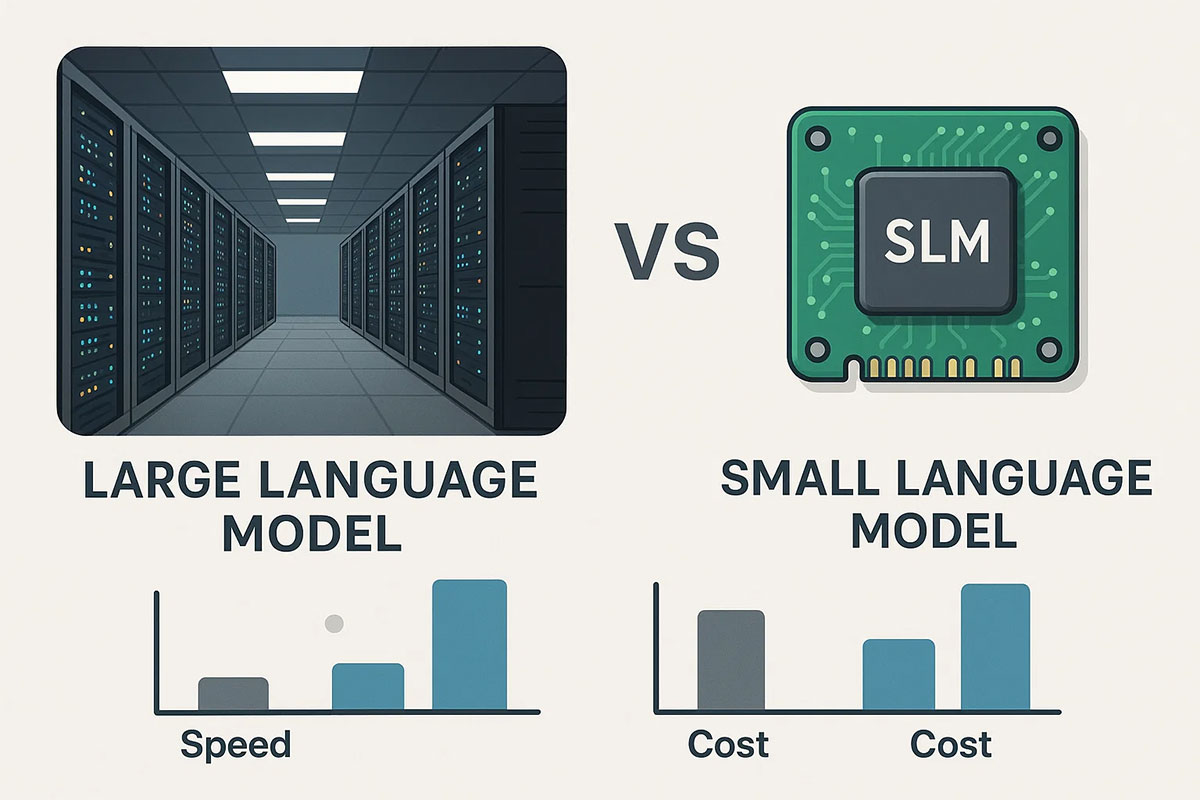

The era of "bigger is better" in AI is evolving. While mega-models grab headlines, efficient smaller models are quietly revolutionizing real-world applications with dramatic cost savings and performance benefits.

TrendFlash

Introduction: The Quiet Revolution in AI Efficiency

Throughout 2024 and into 2025, a significant shift has been reshaping the artificial intelligence landscape. While headlines remain dominated by massive foundation models requiring unprecedented computational resources, a parallel revolution has been occurring with efficient, smaller models that deliver comparable performance at a fraction of the cost. The Stanford AI Index Report 2025 highlights that "2024 was a breakthrough year for smaller AI models," noting that modern compact models can now match the performance that required models 100 times larger just two years ago. This isn't merely an incremental improvement but represents a fundamental transformation in how we approach AI development and deployment.

The driving force behind this shift is both practical and economic. As noted by industry observers, "Smaller models process data faster, reducing latency" while dramatically cutting computational costs. This efficiency breakthrough comes at a crucial moment when many organizations are grappling with the overwhelming expenses of training and deploying large-scale AI systems. The energy consumption alone for massive models has become increasingly unsustainable, with training setups like Grok 3 using about ten times more computational power than its predecessor and consuming energy equivalent to roughly 7% of a typical nuclear reactor's monthly output.

Understanding the Efficiency Advantage

Efficient AI models, often called small language models (SLMs) or lightweight models, differ from their mega counterparts in several fundamental ways. While massive foundation models typically measure their parameters in hundreds of billions, efficient models achieve impressive results with just billions or even millions of parameters. What they sacrifice in theoretical breadth, they gain in practical efficiency, specialization, and deployability.

The Performance Breakthrough

Perhaps the most surprising development has been how quickly the performance gap between large and small models has narrowed. According to the 2025 AI Index Report, "the performance edge between top models is shrinking" significantly. The report notes that "the top-ranked model scored about 12% higher than the tenth-ranked model in early 2024, but only 5% higher in early 2025," indicating that the frontier is "increasingly competitive—and increasingly crowded". This compression at the top means that smaller models are catching up to their larger counterparts at an astonishing rate.

Even more strikingly, "the performance difference between the top and 10th-ranked models fell from 11.9% to 5.4% in a year, and the top two are now separated by just 0.7%". This dramatic narrowing of the performance gap fundamentally changes the value proposition of massive models, particularly for specialized applications where efficient models can be finely tuned to excel.

Cost Economics: The Game Changer

The economic argument for efficient AI models has become undeniably compelling. The Stanford AI Index reveals that "the inference cost for a system performing at the level of GPT-3.5 dropped over 280-fold between November 2022 and October 2024". This represents one of the most rapid cost declines in technological history, making capable AI accessible to organizations and developers who previously couldn't afford entry.

To put this in perspective, "the cost of scoring just over 60% on the MMLU has plummeted, from about US$20 per million tokens in November 2022 to 7 cents per million tokens in October 2024". This reduction of nearly 300x in less than two years fundamentally changes who can afford to deploy AI at scale. For startups and developers, this cost transformation means being able to experiment, iterate, and deploy without the massive capital requirements that previously served as barrier to entry.

When Smaller Models Outperform Mega Models

The advantages of efficient AI models extend beyond mere cost savings. In many practical scenarios, smaller models actually deliver superior results due to their specialized nature and operational characteristics.

Real-Time and Latency-Sensitive Applications

For applications requiring immediate responses, such as interactive chatbots, real-time translation, or voice assistants, the inference speed of efficient models provides a decisive advantage. As one analysis notes, "Smaller models process data faster, reducing latency. This is crucial for real-time applications like voice assistants, fraud detection, and recommendation engines". The architectural simplicity of these models allows for faster processing without the computational overhead that plagues their larger counterparts.

In mobile applications particularly, the difference becomes dramatic. "Running large AI models requires expensive GPUs and cloud services," creating inherent latency as data travels to and from cloud servers. Efficient models, by contrast, can often run directly on devices, eliminating network latency entirely and providing instantaneous responses even without internet connectivity.

Edge Computing and IoT Deployments

The expansion of edge computing has created ideal deployment environments for efficient AI models. "Devices like smart cameras, wearables, and home automation systems rely on edge AI. Smaller models enable real-time processing without needing cloud connectivity". This capability becomes increasingly valuable as more devices require local intelligence for both performance and privacy reasons.

NVIDIA CEO Jensen Huang highlighted this trend at CES, stating, "The next frontier of AI is physical AI. Imagine a large language model, but instead of processing text, it processes its surroundings. Instead of taking a question as a prompt, it takes a request. Instead of producing text, it produces action tokens". This shift toward physical AI inherently favors efficient models that can operate within the resource constraints of real-world devices.

Specialized Domain Applications

While massive foundation models aim for general knowledge, efficient models excel when focused on specific domains. Through techniques like fine-tuning and transfer learning, compact models can achieve expert-level performance in narrow fields while maintaining their efficiency advantages.

In healthcare, for instance, "medical AI tools, like diagnostic assistants and wearable health monitors, depend on efficient AI models. Smaller models allow quick analysis while maintaining accuracy". Similar advantages appear in legal document analysis, financial forecasting, and technical support, where specialized knowledge matters more than broad general understanding.

The Technical Innovations Driving Efficiency

Several key technological breakthroughs have enabled efficient models to achieve capabilities that recently required massive computational resources.

Model Compression Techniques

Advanced compression methods have dramatically reduced model sizes without proportional performance loss. "Advancements in AI compression, such as knowledge distillation and weight pruning, allow models to retain accuracy while reducing size". Knowledge distillation, in particular, has proven remarkably effective, where a large "teacher" model trains a smaller "student" model to mimic its capabilities, effectively condensing knowledge into a more efficient form.

The Stanford/UW s1 model exemplifies this trend, achieving sophisticated reasoning capabilities while being "trained for under $50". This demonstrates how distillation and other compression techniques are making advanced AI increasingly accessible.

Architectural Improvements

Beyond mere compression, fundamental architectural innovations have increased the computational efficiency of smaller models. "New architectures (e.g., Mamba), better model orchestration capabilities, and creative ways of combining Generative and Predictive AI" have all contributed to more capable efficient models. These architectural advances often make more efficient use of parameters, allowing smaller models to achieve performance previously requiring orders of magnitude more resources.

The rise of hybrid approaches has been particularly significant. As noted in analysis of 2025 AI trends, "hybrid models are poised to broaden AI accessibility and accelerate its integration across a wider spectrum of applications". These systems seamlessly blend different AI approaches to maximize capabilities while minimizing computational demands.

Hardware and Software Co-Design

Specialized hardware has emerged specifically optimized for running efficient AI models. "At the hardware level, costs have declined by 30% annually, while energy efficiency has improved by 40% each year". This hardware progression complements the software advances, creating a virtuous cycle of improving efficiency.

For edge deployment particularly, dedicated AI accelerators in smartphones, IoT devices, and embedded systems provide the computational necessary to run sophisticated models without the power consumption or cost of general-purpose AI hardware. This hardware specialization has been crucial in making on-device AI practical for everyday applications.

Practical Implementation: Choosing the Right Model

Selecting between efficient and mega models requires careful consideration of your specific use case, constraints, and objectives. The following table outlines key decision factors:

| Consideration | Choose Efficient Models When... | Choose Mega Models When... |

|---|---|---|

| Budget | Limited budget, need predictable costs | Substantial computational resources available |

| Latency Requirements | Real-time responses needed | Batch processing acceptable |

| Data Privacy | Sensitive data, on-premise deployment preferred | Data can be processed in cloud |

| Specialization | Domain-specific tasks | General knowledge across multiple domains |

| Deployment Environment | Edge devices, mobile applications | Cloud infrastructure with high-bandwidth connectivity |

Implementation Strategies for Efficient Models

Successfully deploying efficient AI models requires thoughtful strategy. For startups and developers, "techniques like quantization and pruning can make AI models even smaller without sacrificing accuracy. By refining models, startups can reduce memory usage and improve speed". These optimization techniques can often reduce model sizes by 50-80% with minimal accuracy impact, making deployment feasible on resource-constrained devices.

Another key strategy involves "running AI on local devices instead of cloud servers which improves speed and data privacy. This is particularly useful for mobile apps and security-focused applications" . This approach not only enhances performance but also addresses growing privacy concerns by keeping sensitive data on-device.

The Future of Efficient AI

Current trends suggest that the momentum behind efficient AI models will accelerate through 2025 and beyond. "The future of AI is shifting towards efficiency. Here's why smaller models will dominate". Several converging trends support this projection.

Democratization of Advanced AI

As efficient models continue to improve, advanced AI capabilities are becoming accessible to a much wider range of users and organizations. "Open-weight models are also closing the gap with closed models, reducing the performance difference from 8% to just 1.7% on some benchmarks in a single year". This rapidly narrowing performance gap, combined with the dramatically lower costs, is democratizing access to technology that was recently available only to well-funded organizations.

Bart Selman, a computer scientist at Cornell University, predicts that "we'll see some individual teams with five people, two people, that come up with some new algorithmic ideas that will shake things up. Which is all good. We don't want the world just to be run by some big companies". This democratization could accelerate innovation as more diverse perspectives contribute to AI development.

Sustainability Imperatives

The environmental impact of massive AI models has drawn increasing scrutiny. "Big AI models consume enormous amounts of power. Training a single large model can use as much energy as multiple households consume in a year. This is unsustainable and raises concerns about AI's environmental impact". As environmental, social, and governance (ESG) considerations become more important for organizations, the energy efficiency of smaller models provides a significant advantage.

With the AI Index reporting that "the energy used to train a typical leading AI model is currently doubling annually", the sustainability argument for efficient models becomes increasingly compelling. Organizations facing pressure to reduce their carbon footprint may find efficient models essential to their AI strategy.

Conclusion: The Right Tool for the Right Task

The evolution of efficient AI models doesn't render massive foundation models obsolete. Rather, it creates a more nuanced landscape where different tools serve different purposes. Mega models continue to excel at broad reasoning tasks requiring extensive world knowledge, while efficient models dominate in specialized, latency-sensitive, and resource-constrained environments.

The most sophisticated AI strategies in 2025 will likely employ a portfolio approach, selecting the appropriate model type for each specific application. As the AI Index notes, "the frontier is increasingly competitive—and increasingly crowded", providing organizations with more choices than ever before.

For most practical applications, particularly those requiring deployment at scale, efficient models offer compelling advantages that make them the default choice rather than a compromise. As the technology continues to evolve, the trend toward efficiency likely represents the future of most real-world AI deployment—proving that for most applications, smaller is indeed better.

Related Reading

Tags

Share this post

Categories

Recent Posts

Opening the Black Box: AI's New Mandate in Science

AI as Lead Scientist: The Hunt for Breakthroughs in 2026

Measuring the AI Economy: Dashboards Replace Guesswork in 2026

Your New Teammate: How Agentic AI is Redefining Every Job in 2026

Related Posts

Continue reading more about AI and machine learning

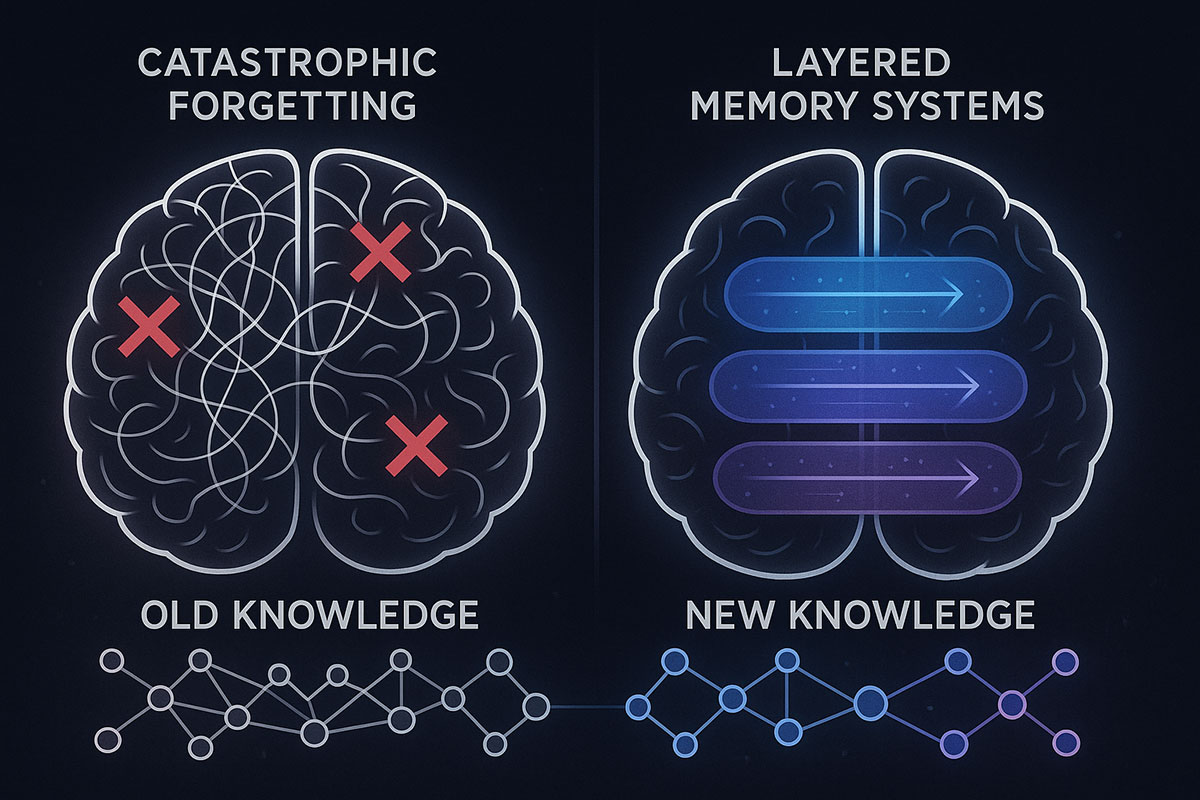

Google's HOPE Model: AI That Finally Learns Continuously (Catastrophic Forgetting Solved)

Google just unveiled HOPE, a self-modifying AI architecture that solves catastrophic forgetting—the fundamental problem preventing AI from learning continuously. For the first time, AI can absorb new knowledge without erasing what it already knows. Here's why this changes everything.

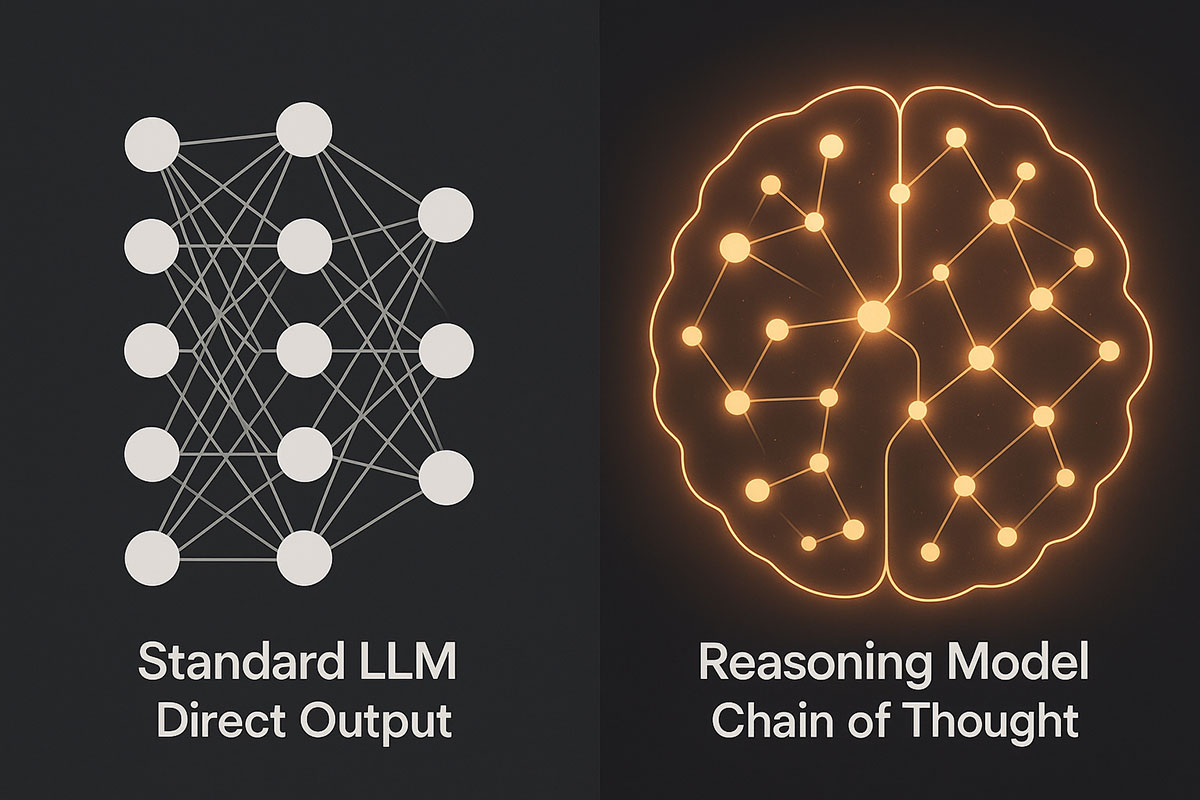

AI Reasoning Models Explained: OpenAI O1 vs DeepSeek V3.2 - The Next Leap Beyond Standard LLMs (November 2025)

Reasoning models represent a fundamental shift in AI architecture. Unlike standard language models that generate answers instantly, these systems deliberately "think" through problems step-by-step, achieving breakthrough performance in mathematics, coding, and scientific reasoning. Discover how O1 and DeepSeek V3.2 are redefining what AI can accomplish.

Why Smaller AI Models (SLMs) Will Dominate Over Large Language Models in 2025: The On-Device AI Revolution

The AI landscape is shifting from "bigger is better" to "right-sized is smarter." Small Language Models (SLMs) are delivering superior business outcomes compared to massive LLMs through dramatic cost reductions, faster inference, on-device privacy, and domain-specific accuracy. This 2025 guide explores why SLMs represent the future of enterprise AI.