Baidu ERNIE Model BEATS GPT-4 & Gemini: The Chinese AI Breakthrough Nobody Is Covering (November 2025)

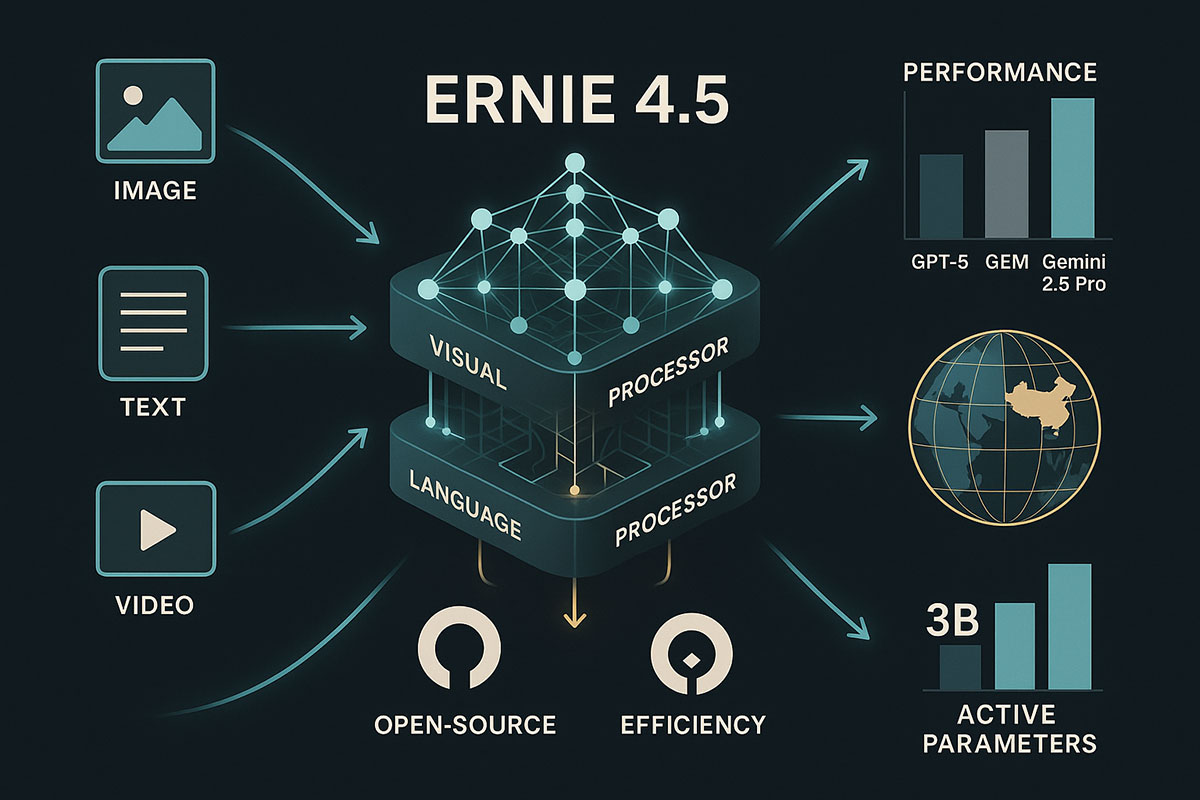

While Western AI companies focus on scaling, China's Baidu has quietly released ERNIE 4.5—a lightweight multimodal model that beats GPT-5 High and Gemini 2.5 Pro on 10 of 15 benchmarks. Open-source and free for commercial use, ERNIE's breakthrough has massive implications for enterprise AI and the US-China technology race.

TrendFlash

The Breakthrough Nobody Saw Coming

In mid-November 2025, Baidu dropped a bombshell that barely registered in Western tech media: ERNIE 4.5-VL-28B-A3B, a lightweight multimodal AI model that outperforms OpenAI's GPT-5 High and Google's Gemini 2.5 Pro on a series of rigorous visual reasoning benchmarks. The kicker? It's open-source, free for commercial use, and demands 10x less computational resources to deploy than the systems it outperforms.

While the news media obsessed over OpenAI's latest announcements and Anthropic's funding rounds, one of the world's largest AI companies executed a quiet but devastating leap forward. The implications ripple across multiple dimensions: enterprise AI adoption, the global competition for technological supremacy, the direction of open-source AI development, and the fundamental question of whether the AI arms race is still dominated by brute-force scaling or whether smarter architecture design can deliver superior results with fewer resources.

This is the story Western AI analysts have largely ignored.

Understanding ERNIE 4.5: A Different Approach

To appreciate ERNIE 4.5's significance, you first need to understand what makes it fundamentally different from GPT-4, Gemini, and other frontier models.

Most cutting-edge AI models today are autoregressive large language models—they predict text one token at a time, optimized for language understanding and generation. Vision capabilities, when added, often feel bolted-on, with images converted to token sequences and processed through text-oriented architectures.

ERNIE 4.5 takes a different architectural path. It's a true multimodal model from the ground up, designed with a sophisticated Mixture-of-Experts (MoE) structure that handles text, images, and their interactions as native information modalities. The architecture includes:

Vision Transformer (ViT) Encoder: Images are processed through a specialized visual encoder that preserves spatial relationships and visual semantics, rather than converting images to text tokens.

Modality-Isolated Routing: Different parts of the neural network are optimized for text processing, visual processing, and cross-modal reasoning. A routing mechanism ensures that information flows through the appropriate pathways without one modality degrading another's performance.

Heterogeneous Mixture-of-Experts: The model has 28 billion total parameters, but only 3 billion are active per token during inference. This means it runs with roughly one-tenth the computational footprint of comparably capable models while maintaining performance parity or superiority.

The result is a system that achieves near-flagship model capability while consuming a fraction of the resources. This isn't just an engineering optimization—it's a fundamentally more efficient way to build AI systems.

The Benchmark Results: ERNIE's Visual Dominance

When Baidu released ERNIE 4.5, they backed their claims with comprehensive benchmarking across 15 different evaluation datasets. The results paint a picture of clear superiority, especially on visual reasoning tasks:

Chart Understanding & Data Analysis:

- ChartQA: ERNIE scores 87.1 vs Gemini 2.5 Pro's 76.3 and GPT-5 High's 78.2. This is a decisive advantage—over 8+ points better than competitors.

- DocVQA: ERNIE reaches 95.3, highest among all three models, demonstrating superior ability to extract information from dense documents containing both text and visual elements.

Complex Mathematical & Spatial Reasoning:

- MathVista: ERNIE scores 82.5, statistically tied with Gemini 2.5 Pro (82.7) but ahead of GPT-5 High (81.3). This benchmark requires understanding mathematical notation, spatial relationships, and problem-solving logic.

- Average Score Across Benchmarks: ERNIE 73.1 vs Gemini 70.3 vs GPT-5 69.4.

Specialized Visual Tasks:

- VLMs Are Blind (scene understanding): ERNIE 77.3 vs Gemini 76.5 vs GPT 69.6

- RealWorldQA (real-world visual understanding): ERNIE leads across multiple variants of this benchmark

- CV-Bench (computer vision reasoning): ERNIE demonstrates consistent superiority

What's particularly striking is the consistency of ERNIE's advantage. It's not dominating on one niche benchmark while underperforming elsewhere. Across a diverse set of visual reasoning tasks, ERNIE maintains a clear edge.

The "A3B" designation in ERNIE's name refers to its architecture: "A3B-Thinking," indicating a system that can operate in two modes—standard mode for fast inference, and a "thinking mode" similar to OpenAI's o1 that allocates additional computation for complex reasoning tasks. In thinking mode, ERNIE's performance on mathematical and logical reasoning tasks increases further.

Why Visual Understanding Matters: The Enterprise Angle

To understand why ERNIE's visual superiority matters beyond benchmark bragging rights, consider what enterprises actually do with AI:

Document & Process Understanding: Corporate systems are drowning in information—engineering schematics, regulatory documents, architectural plans, medical imaging, factory floor video feeds, logistics dashboards. Unlike GPT-4, which essentially converts images to text and loses spatial, hierarchical, and visual semantic information, ERNIE processes visual information natively. This is genuinely valuable.

Quality Control & Inspection: Manufacturing facilities could deploy ERNIE for visual inspection tasks—detecting defects in products, identifying assembly errors, monitoring equipment condition. The benchmark for industrial applications isn't "can it write poetry" but "can it analyze a photograph of a manufacturing line and identify non-conforming products?" Here, ERNIE's visual reasoning directly translates to business value.

Technical Documentation Analysis: Engineering teams spend hours extracting information from schematics, circuit diagrams, and architectural plans. ERNIE's ability to solve circuit problems by applying Ohm's and Kirchhoff's laws demonstrates it can handle technical domain reasoning embedded in visual formats.

Video Understanding: ERNIE can process video natively and extract information at multiple time scales—finding specific scenes, mapping timestamps to discussed topics, and understanding temporal relationships. Enterprises sit on massive video archives (training sessions, security footage, manufacturing process videos) that are essentially unsearchable with current text-focused models. ERNIE unlocks these libraries.

Healthcare Imaging: While regulatory approval processes are complex, ERNIE's visual reasoning could assist in analyzing medical images, identifying patterns in radiological data, and supporting diagnostic workflows. Early benchmarking suggests strong capability, though clinical deployment requires additional validation.

In each case, ERNIE's native multimodal architecture solves problems that bolted-on vision systems struggle with.

The Open-Source & Commercial Advantage

Here's where ERNIE 4.5 becomes genuinely disruptive: it's released under the Apache 2.0 license, permitting unrestricted commercial use.

This is significant because both GPT-4 and Gemini operate under closed-source commercial licenses. Organizations building products with these models pay per-token API fees to OpenAI and Google. This creates an ongoing revenue stream for the API providers but also creates a strategic vulnerability for customers: if pricing increases, switching costs are high. Integration is deep. Dependence grows.

ERNIE 4.5, by contrast, can be deployed on-premises. Organizations can fine-tune it on proprietary data. They can optimize it for their specific use cases. They're not locked into a provider's pricing model or forced to send sensitive data through third-party APIs. For enterprises dealing with regulated information (financial data, healthcare records, trade secrets), this is massively appealing.

Baidu provides ERNIEKit, an industrial-grade toolkit enabling organizations to:

- Fine-tune ERNIE on custom datasets

- Perform Supervised Fine-Tuning (SFT) for specific tasks

- Apply Low-Rank Adaptation (LoRA) for parameter-efficient customization

- Execute Direct Preference Optimization (DPO) for behavior alignment

- Quantize models for deployment efficiency

- Deploy across multiple inference frameworks (Transformers, vLLM, FastDeploy)

This level of customization capability is unavailable with closed-source commercial models. An organization using Gemini or GPT-4 is locked into whatever parameters Google or OpenAI provide. ERNIE users can adapt the model to their exact requirements.

The Efficiency Revolution: 3B Active Parameters

Perhaps most compelling is ERNIE's computational efficiency. Most frontier models run billions of parameters across the entire network simultaneously. ERNIE's architecture uses a Mixture-of-Experts structure where only 3 billion of 28 billion parameters activate per inference pass.

This creates cascading advantages:

Deployment Cost Reduction: An organization can deploy ERNIE 4.5 on significantly cheaper hardware than GPT-5 or Gemini 2.5. A single NVIDIA H100 GPU (80GB memory) can host ERNIE, whereas deploying a similarly capable closed-source model might require multiple high-end GPUs or cloud API calls.

Inference Speed: Fewer active parameters means faster processing. Where a customer might wait 5 seconds for a GPT-4 response to a complex visual question, ERNIE returns an answer in 2-3 seconds. This matters for real-time applications: customer service, robotic systems, interactive analysis tools.

Scalability: If an enterprise needs to process thousands of documents or images daily, ERNIE's efficiency profile makes large-scale deployment economically feasible in-house, whereas relying on external APIs would become prohibitively expensive.

Environmental Impact: Fewer active parameters means lower energy consumption. At scale, this matters for carbon footprint and operational sustainability metrics—increasingly important for large enterprises with ESG commitments.

This efficiency matters because it levels the playing field. Smaller enterprises and startups can't compete in GPU access with OpenAI or Google. But they can run ERNIE on commodity hardware. This democratizes access to sophisticated multimodal AI.

The Competitive Landscape: US vs. China

ERNIE 4.5's release arrives at a consequential moment in the US-China technology race. For the past two years, the Western narrative has been overwhelming: OpenAI dominates. Google has Gemini and DeepMind. Anthropic is well-funded. China, this story goes, is perpetually 12-18 months behind.

ERNIE 4.5 complicates this narrative. It's not that Baidu is catching up to OpenAI. It's that Baidu is taking a different path—optimizing for different metrics than the Western giants pursue.

Western companies have bet heavily on frontier capability scaling—building the biggest, most capable models regardless of resource cost. OpenAI's o1 is estimated to cost tens of millions to train. Gemini 2.5 Pro is an enormous model. The assumption is that scale and capability are inexorably linked.

Baidu's approach suggests an alternative: smarter architecture design can deliver competitive capability at a fraction of the resource cost. ERNIE's Mixture-of-Experts approach with modality-isolated routing is genuinely innovative, not simply a scale play. This is research-backed optimization, not just compute-backed brute force.

The geopolitical implications are substantial:

Technology Independence: China's tech sector no longer needs to rely entirely on US-based APIs or models. ERNIE enables Chinese enterprises to build products without API dependencies on OpenAI, Google, or Anthropic. This reduces US technological leverage over Chinese companies.

Export & Influence: An open-source, commercially-usable ERNIE could become the foundation for AI systems across Asia, Africa, and Latin America—regions where Chinese technological influence is growing. Enterprises in these regions could build ERNIE-based AI products rather than depending on US companies.

Talent & Innovation: Breakthrough results like ERNIE 4.5 signal that innovation in AI is globally distributed, not concentrated in Silicon Valley. This matters for attracting talent and maintaining credibility in international AI research.

Competitive Pressure: For OpenAI, Google, and Anthropic, ERNIE 4.5 is a wake-up call. The assumption that bigger is always better, that only massive resource-intensive models can be competitive, is no longer defensible. Smarter architecture matters.

This doesn't mean China is "ahead" overall—the Western companies still command more total compute, funding, and talent. But it means the competition is more multidimensional than media narratives suggest. It's not just about who builds the biggest model but who builds systems that solve real problems efficiently.

Deployment & Use Cases: Where ERNIE Excels

For organizations considering ERNIE 4.5, several use cases stand out as particularly promising:

E-Commerce & Retail: Visual search, product analysis from images, inventory management from shelf photography, quality assurance automation. Retailers could deploy ERNIE to analyze product photos, extract details, categorize items, and flag potential issues without relying on external APIs.

Manufacturing & Engineering: Quality inspection, defect detection, technical documentation analysis, process optimization. Factories could analyze production line imagery in real-time, identifying issues before products leave the line.

Financial Services: Document processing, compliance analysis, fraud detection from visual patterns, invoice and receipt analysis. Banks could deploy ERNIE to understand complex financial documents, regulatory paperwork, and identify anomalies.

Healthcare (with appropriate regulatory compliance): Medical image analysis assistance, diagnostic support, pathology report generation. While clinical deployment requires regulatory approval, research institutions could experiment with ERNIE for radiology and pathology tasks.

Legal & Document Services: Contract analysis, evidence review, document categorization. Law firms could process document archives, extracting key information and identifying relevant precedents.

Government & Defense: Satellite image analysis, document classification, compliance monitoring. Government agencies could deploy ERNIE for imagery intelligence and documentation processing.

Logistics & Supply Chain: Shipment tracking from photography, warehouse management, damage assessment. Logistics companies could analyze photos of shipments and warehouses to optimize operations.

The common thread: all these applications involve processing visual information for business-critical decision-making. In each case, ERNIE's combination of visual reasoning capability, open-source availability, and computational efficiency directly translates to enterprise value.

The Technical Innovation: What Makes ERNIE Smart

ERNIE 4.5's success isn't accidental. The model incorporates several genuinely innovative technical approaches:

Heterogeneous MoE Pre-Training: Rather than training a single unified architecture, Baidu designed a specialized Mixture-of-Experts where different expert networks are optimized for different tasks (text processing, visual understanding, cross-modal reasoning). During pre-training, the model learns when to route information through which experts—essentially learning to specialize internally.

Modality-Isolated Routing: A critical challenge in multimodal models is negative transfer—when training for one modality degrads performance in another. ERNIE addresses this through routing mechanisms that ensure visual information doesn't interfere with text processing and vice versa. The router orthogonal loss and multimodal token-balanced loss during training optimize for this balance.

Efficient Inference Architecture: ERNIE uses multi-expert parallel collaboration during inference, allowing multiple expert networks to contribute simultaneously while remaining computationally tractable. Convolutional code quantization enables aggressive model compression (4-bit or 2-bit quantization) without performance loss.

Vision Transformer Integration: Rather than converting images to token sequences, ERNIE maintains native visual representation through a Vision Transformer (ViT) encoder. Images are divided into patches, each processed independently while maintaining spatial relationships. This preserves visual semantic information rather than converting it to language-approximations.

These aren't minor tweaks. They represent genuine research contributions to multimodal AI architecture. Published papers backing these approaches will likely influence how the broader research community approaches multimodal systems.

Limitations & Deployment Challenges

To be clear-eyed: ERNIE 4.5 isn't a silver bullet, and deployment isn't frictionless.

Hardware Requirements: While more efficient than massive frontier models, ERNIE still demands significant hardware. Single-card deployment requires 80GB GPU memory—placing it out of reach for small organizations or resource-constrained environments. You need enterprise-grade AI infrastructure to run ERNIE meaningfully.

Fine-Tuning Complexity: While ERNIEKit provides fine-tuning tools, optimizing ERNIE for specific use cases requires data science expertise. Organizations can't just upload their documents and expect magic. Proper dataset curation, labeling, and validation are necessary.

Language Support: ERNIE's multilingual capability is strong but not universal. If your use case involves less common languages or specialized domain vocabularies, performance may degrade. Western models have similar limitations, but worth noting.

Regulatory & Trust: Deploying a Chinese-origin model raises regulatory questions in some sectors. US defense contractors might face restrictions on using Baidu technology. Regulatory frameworks around AI sourcing are evolving and create uncertainty.

Benchmark vs. Production: Benchmarks measure narrow capabilities. Real-world performance in production deployment often differs from lab results. Organizations implementing ERNIE should expect to validate performance on their specific datasets and use cases before full-scale deployment.

Competitive Dynamics: The AI landscape moves fast. By 2026, new models will emerge. ERNIE's competitive advantage in benchmarks could narrow. Organizations should view ERNIE as a current-generation solution, not a long-term lock-in.

The Broader Implications for AI Development

ERNIE 4.5's success illustrates several important realities reshaping the AI landscape:

Efficiency Matters as Much as Scale: The assumption that "more parameters = better models" is increasingly questioned. Smarter architecture design delivers better results per unit of resource. This opens doors for smaller organizations and resource-constrained regions to compete with massive labs.

Multimodal > Unimodal: The future of enterprise AI increasingly involves processing multiple data types simultaneously—images, text, video, sensor data, structured data. Models optimized specifically for multimodal understanding will outcompete text-only systems for real-world problems.

Open Source Creates Leverage: Commercial closed-source models still dominate in raw capability, but open-source alternatives are becoming viable for enterprise deployment. This shifts bargaining power away from API providers and toward customers.

Geographic Distribution Matters: Innovation in AI is no longer concentrated in Silicon Valley. Chinese, European, and other international research teams are making significant contributions. The technology race is genuinely global.

Different Approaches Can Coexist: OpenAI's scaling approach, Anthropic's alignment focus, and Baidu's efficiency optimization represent different but potentially complementary research directions. Cognitive diversity in how teams approach AI development likely accelerates overall progress.

What's Next: ERNIE's Trajectory

Baidu will likely iterate rapidly on ERNIE 4.5. Expect:

Larger Models: ERNIE-4.5-300B and ERNIE-4.5-424B (which exist in Baidu's current roadmap) will offer additional capability for organizations with greater computational resources.

Specialized Variants: Domain-specific ERNIE models optimized for healthcare, finance, manufacturing, and other sectors. Just as general-purpose language models have spawned specialized versions, ERNIE will likely follow suit.

Hardware Optimization: Continued compression and quantization improvements enabling ERNIE to run on less powerful hardware, expanding the addressable market.

Integration Frameworks: Better integration with popular ML platforms, data science tools, and enterprise software stacks. Making deployment frictionless is key to adoption.

International Distribution: As ERNIE gains adoption, localized versions supporting specific languages and cultural contexts will likely emerge.

Competitive Response: Expect OpenAI, Google, Anthropic, and other Western companies to prioritize efficiency improvements and multimodal capability enhancements in response to ERNIE's breakthrough. Competition accelerates innovation.

The Historical Parallel

ERNIE 4.5's breakthrough brings to mind historical parallels in technology competition. In the 1980s, many analysts believed Japanese semiconductor companies would dominate the industry because they focused on efficiency and quality while American companies pursued raw scale. The conclusion: Japanese companies won by optimizing what mattered in real products, not just benchmarks.

ERNIE might represent a similar dynamic in AI. Western companies have pursued "biggest model" metrics. Baidu is optimizing for "most useful model per resource unit." History suggests the latter often matters more for real-world impact.

Bottom Line: What Enterprises Should Know

For enterprise decision-makers evaluating AI systems:

-

ERNIE 4.5 is genuinely competitive with GPT-5 and Gemini 2.5 Pro on visual tasks, and in some benchmarks surpasses them.

-

Open-source commercial licensing creates strategic advantages—no API dependencies, on-premises deployment, customization capability.

-

Efficiency matters in production. Fewer active parameters means lower infrastructure costs and faster inference, translating directly to business value.

-

Multimodal capability solves real problems that text-only models struggle with. If your use case involves processing images, documents, or video, ERNIE's architecture is specifically designed for this.

-

Competition benefits everyone. ERNIE's existence will push OpenAI, Google, and Anthropic to prioritize efficiency and multimodal capability. The entire ecosystem improves.

-

Risk diversification suggests not depending on a single provider. Organizations using only GPT-4 or Gemini benefit from evaluating alternatives like ERNIE to reduce single-point-of-failure risks.

ERNIE 4.5 represents not just a technical achievement but a signal about the future direction of AI development: toward efficiency, multimodality, open accessibility, and genuine global competition. The next chapter of AI history isn't being written solely in San Francisco anymore.

Related Reading

- Multimodal AI Explained: How Text, Image, Video, Audio Models Are Merging in 2025

- Why Smaller AI Models (SLMs) Will Dominate Over Large Language Models in 2025

- AI Reasoning Models Explained: OpenAI O1 vs DeepSeek V3.2

- AI Infrastructure Arms Race: Inside the Multi-Gigawatt Deals Fueling Next-Gen Models

- Deep Learning Architectures That Actually Work in 2025

- The Future of Work in 2025: How AI Is Redefining Careers and Skills

- AI News & Trends

- Machine Learning

Tags

Share this post

Categories

Recent Posts

Opening the Black Box: AI's New Mandate in Science

AI as Lead Scientist: The Hunt for Breakthroughs in 2026

Measuring the AI Economy: Dashboards Replace Guesswork in 2026

Your New Teammate: How Agentic AI is Redefining Every Job in 2026

Related Posts

Continue reading more about AI and machine learning

AI as Lead Scientist: The Hunt for Breakthroughs in 2026

From designing new painkillers to predicting extreme weather, AI is no longer just a lab tool—it's becoming a lead researcher. We explore the projects most likely to deliver a major discovery this year.

Your New Teammate: How Agentic AI is Redefining Every Job in 2026

Imagine an AI that doesn't just answer questions but executes a 12-step project independently. Agentic AI is moving from dashboard insights to autonomous action—here’s how it will change your workflow and why every employee will soon have a dedicated AI teammate.

The "DeepSeek Moment" & The New Open-Source Reality

A seismic shift is underway. A Chinese AI lab's breakthrough in efficiency is quietly powering the next generation of apps. We explore the "DeepSeek Moment" and why the era of expensive, closed AI might be over.