The Rise of Small Models: Why Lightweight AI Is Overtaking Giants in Real-World Use

While giant LLMs grab headlines, a quiet revolution is underway. Small, specialized AI models are proving more effective for real-world tasks. Explore the 2025 shift towards efficiency, privacy, and practicality.

TrendFlash

Introduction: The Efficiency Era of AI

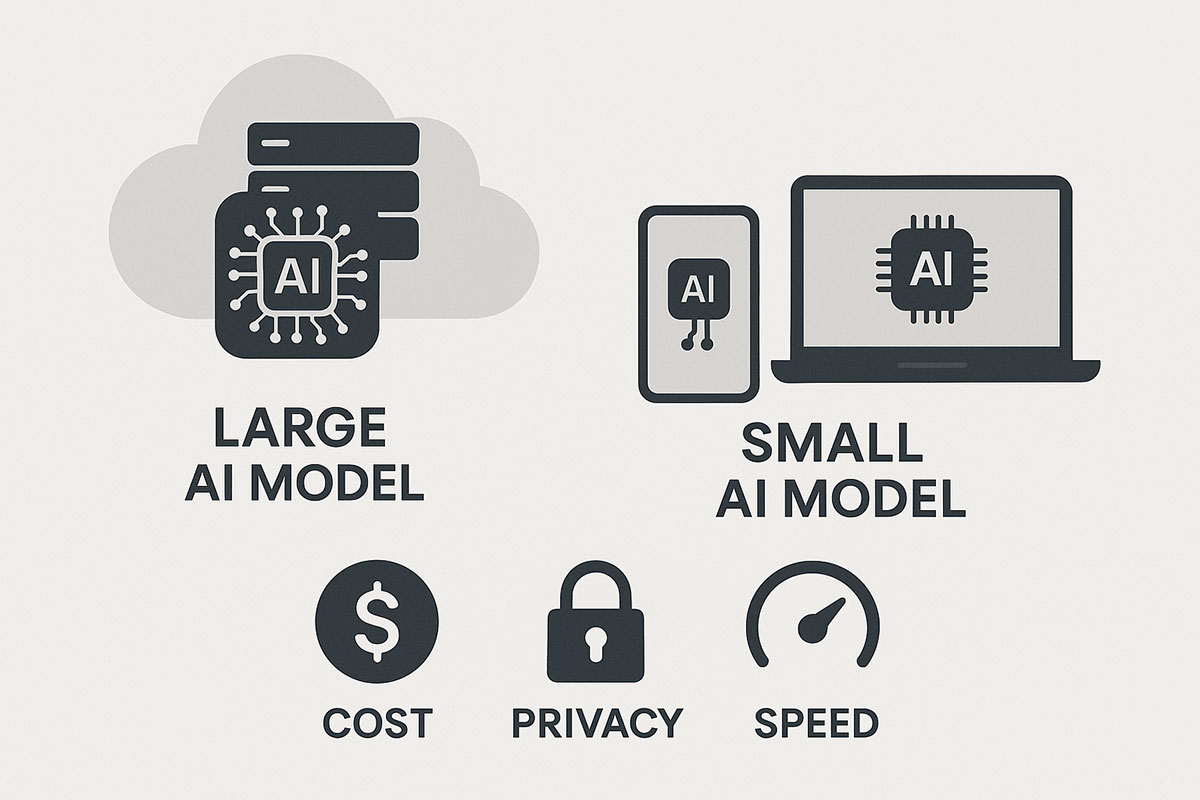

In 2025, the narrative that "bigger is better" in artificial intelligence is being decisively challenged. While frontier models like GPT-5 and Claude Opus continue to push the boundaries of what's possible, a parallel revolution is taking place off the cloud and on our devices. The era of the small, efficient, and hyper-specialized AI model has arrived. Fueled by advancements in model distillation and a growing demand for practical deployment, these lightweight models are not just alternatives to their larger counterparts; in many real-world scenarios, they are superior. This shift is redefining the AI landscape, prioritizing cost, privacy, and deployment flexibility over raw, generalized power.

The trend is clear. The 2025 Stanford AI Index Report highlights that AI is becoming radically more efficient and affordable, with the inference cost for a system performing at the level of GPT-3.5 dropping over 280-fold in less than two years. Furthermore, the performance gap between open-weight and closed models is narrowing dramatically, from 8% to just 1.7% on some benchmarks in a single year. This means businesses and developers no longer need to choose between capability and practicality. They can have both.

What Defines a "Lightweight" AI Model?

Before diving into the "why," it's crucial to understand the "what." A lightweight LLM isn't merely a shrunken-down large model. It's a deliberately optimized system designed for a specific balance of performance and efficiency. Key defining factors include:

- Model Size: Typically ranging from 1 billion to 7 billion parameters, a fraction of the hundreds of billions found in frontier models.

- Efficiency: Lower memory requirements and faster inference times, reducing hardware and energy costs significantly.

- Deployment Flexibility: Compatibility with cloud environments, edge devices, and even on mobile phones and embedded systems.

This shift is enabled by techniques like quantization, which reduces model size and computational needs without a major performance loss, and knowledge distillation, where knowledge from a massive "teacher" model is transferred to a smaller "student" model.

Why Small Models are Winning in the Real World

The theoretical advantages of small models translate into tangible, game-changing benefits for practical applications. Here’s why they are gaining such rapid traction in 2025.

1. Unbeatable Cost Efficiency

For businesses, the cost of using large, cloud-based AI models can be unpredictable and prohibitive. Popular models are proprietary and bill users per token, which can snowball quickly with scale. In contrast, running an open-source model locally means electricity is pretty much the only cost. This is a key reason why U.S. private AI investment grew to $109.1 billion in 2024, as companies seek to build and deploy cost-effective solutions. The rise of consumption-based pricing for cloud AI has introduced significant budget volatility, making the predictable, low cost of local small models increasingly attractive.

2. Maximum Privacy and Data Security

In an age of increasing data regulation and security concerns, small models offer a compelling advantage: your data never leaves your device. With local AI, there is no API call to a remote server, which means no risk of a third-party provider using your data for training, selling it for ads, or it being exposed in a security breach. This is especially critical for industries like healthcare, legal, and finance, and for any company using its sensitive internal data with AI. It provides a level of data governance that is simply impossible with cloud-based giants.

3. Ultra-Low Latency and Offline Capabilities

When you use an online API, you must send data, wait for it to be processed on a remote server, and wait for the response to return—all of which takes time. Local AI delivers responses instantly. This ultra-low latency is crucial for real-time applications like live translation, voice assistants, and interactive features. Furthermore, small models work anywhere, during flights, in remote locations, and anywhere else where connectivity is unreliable or nonexistent.

4. High Customizability and Specialization

Open-source small models can be retrained or fine-tuned on your own specific data, a process known as retrieval-augmented generation (RAG). This means you can take a general-purpose model and tailor it to understand your company's internal jargon, knowledge base, or unique processes, making its answers far more accurate and relevant than a one-size-fits-all giant model. You have full control over the model's deployment, updates, and behavior.

Top Lightweight AI Models to Know in 2025

The ecosystem of small models is rich and diverse. Here are some of the stand-out performers defining the category this year.

| Model Name | Key Features | Best For |

|---|---|---|

| Mistral 7B | Great performance-to-size ratio, strong multilingual support, outperforms many larger models. | General-purpose chatbots, content generation, and cost-effective AI solutions. |

| LLaMA 3.2 1B & 3.2 3B | Meta's tiny but powerful models, ideal for low-resource environments like mobile devices. | Keyword extraction, lightweight chatbots, and grammar correction on-device. |

| Google Gemma 2 (2B & 9B) | Google's optimized models for on-device AI, offering faster response times and lower power consumption. | Personal AI assistants, mobile apps, and embedded AI solutions. |

| Qwen 2 1.5B | Part of Alibaba's Qwen series, offers the best quality for its size class for mobile devices. | On-device chatting and question-answering where speed and privacy are key. |

| Microsoft Phi-3 Mini | An ultra-lightweight model focused on mobile and embedded applications, with extremely fast inference. | IoT applications, AI-powered wearables, and offline AI processing. |

Making the Choice: When to Use Small vs. Large Models

Choosing the right model is about fitting the tool to the job. The following flowchart can help guide this decision:

- Choose a Small Model if: Your priority is data privacy, you have limited budget/connectivity, you need low-latency responses, or your task is well-defined and can be specialized.

- Choose a Large Model if: You need state-of-the-art performance on a highly complex, novel reasoning task, you require broad general knowledge, or you lack the technical resources for local deployment.

As the 2025 AI Index Report notes, while AI has made major strides, complex reasoning remains a challenge for all models, often failing to reliably solve logic tasks even when correct solutions exist. For many high-stakes settings where precision is critical, the careful application of a specialized model or a human-in-the-loop system is still essential.

The Future is Hybrid and Efficient

The rise of small models does not spell the end for large ones. Instead, the future points toward a hybrid AI approach, where local small models handle routine, privacy-sensitive tasks, and cloud-based giants are queried only for complex, non-sensitive reasoning problems. This combines the best of both worlds: the efficiency and privacy of local AI with the vast capabilities of frontier models.

This trend is being driven by the broader industry movement towards efficiency. The cost of AI is dropping, energy efficiency is improving by 40% each year, and open-weight models are closing the performance gap. In 2025, the barriers to powerful AI are rapidly falling, and small models are the key that is unlocking a new wave of accessible, practical, and transformative applications.

Related Reading

Tags

Share this post

Categories

Recent Posts

Opening the Black Box: AI's New Mandate in Science

AI as Lead Scientist: The Hunt for Breakthroughs in 2026

Measuring the AI Economy: Dashboards Replace Guesswork in 2026

Your New Teammate: How Agentic AI is Redefining Every Job in 2026

Related Posts

Continue reading more about AI and machine learning

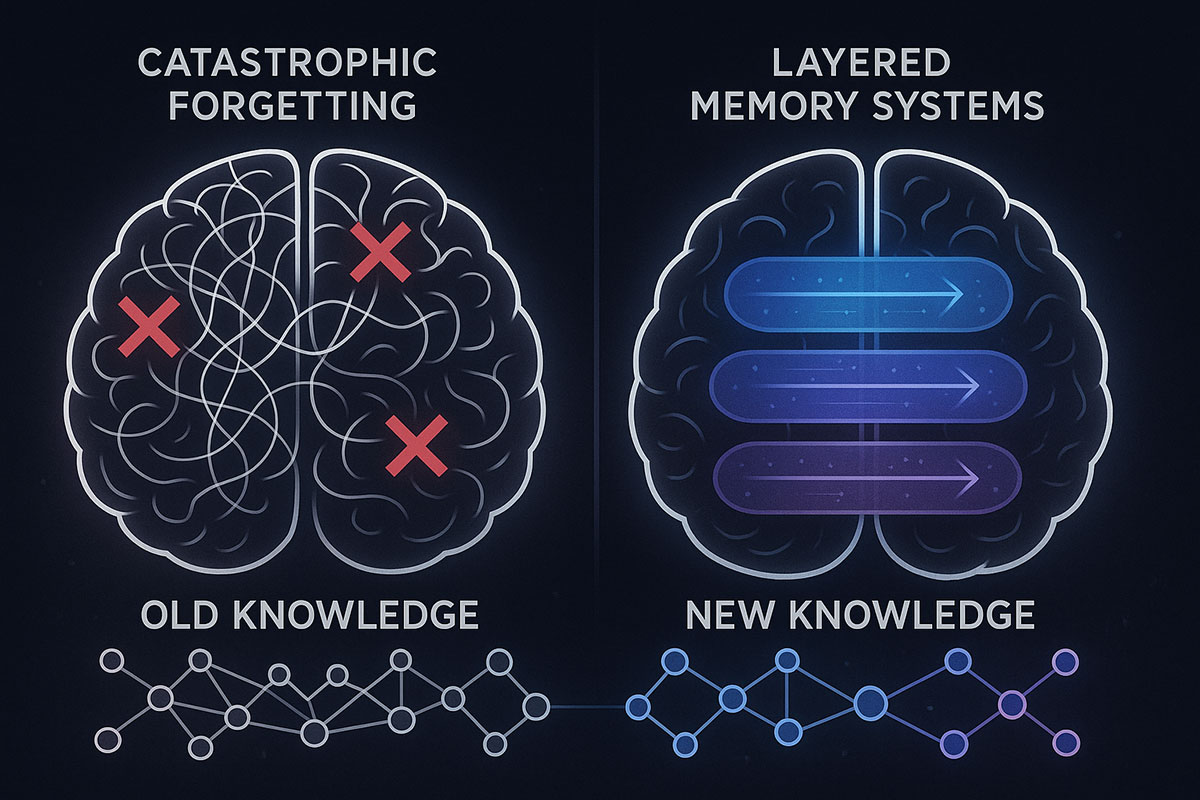

Google's HOPE Model: AI That Finally Learns Continuously (Catastrophic Forgetting Solved)

Google just unveiled HOPE, a self-modifying AI architecture that solves catastrophic forgetting—the fundamental problem preventing AI from learning continuously. For the first time, AI can absorb new knowledge without erasing what it already knows. Here's why this changes everything.

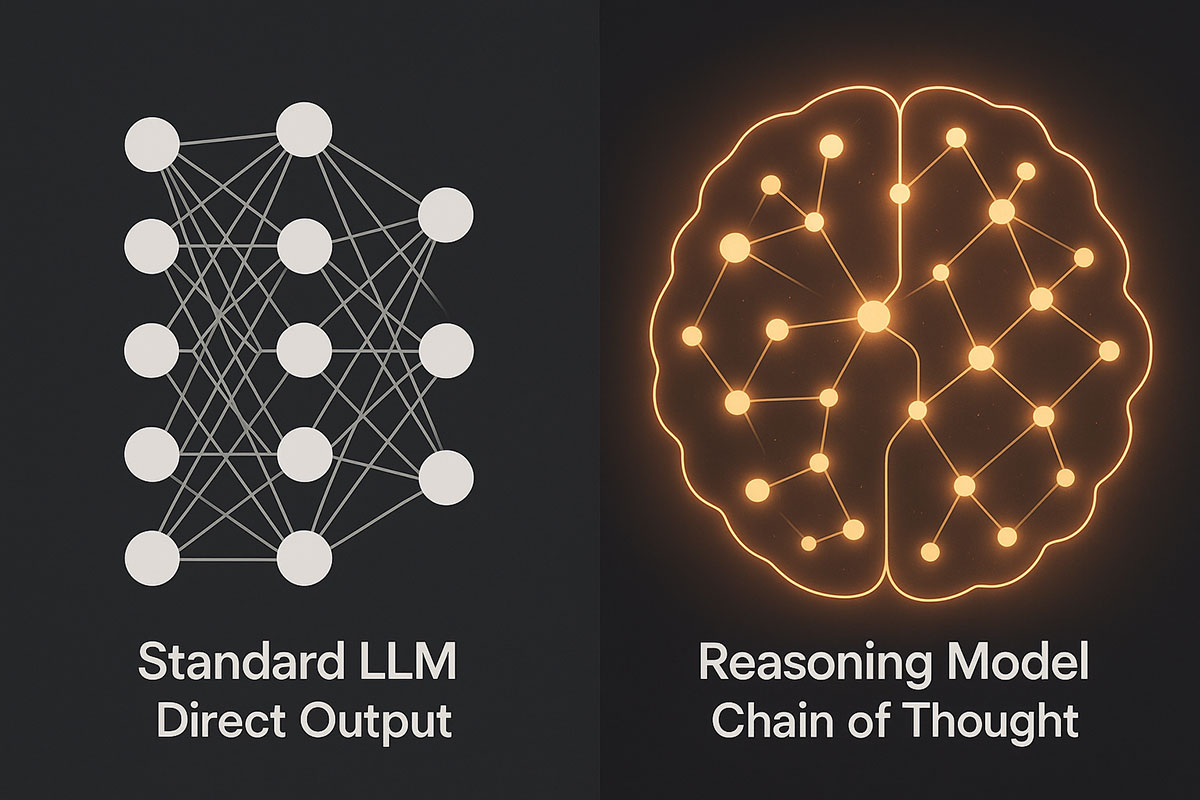

AI Reasoning Models Explained: OpenAI O1 vs DeepSeek V3.2 - The Next Leap Beyond Standard LLMs (November 2025)

Reasoning models represent a fundamental shift in AI architecture. Unlike standard language models that generate answers instantly, these systems deliberately "think" through problems step-by-step, achieving breakthrough performance in mathematics, coding, and scientific reasoning. Discover how O1 and DeepSeek V3.2 are redefining what AI can accomplish.

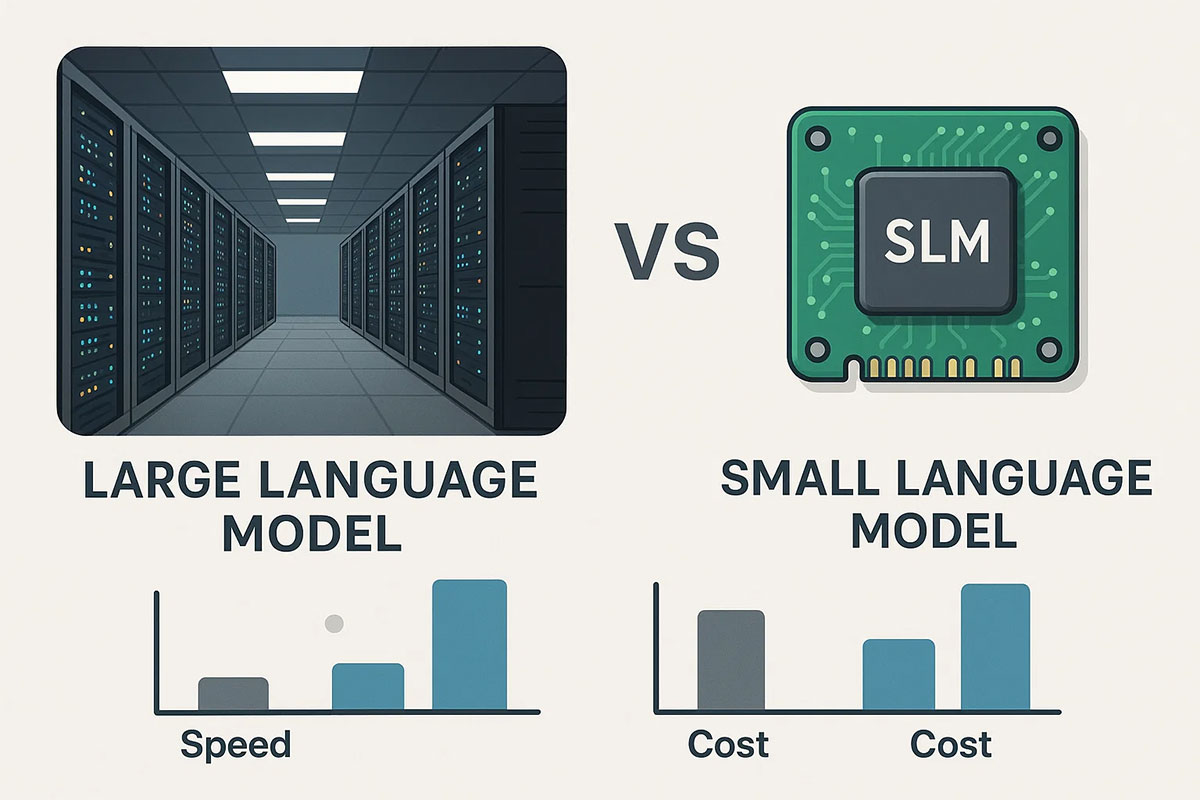

Why Smaller AI Models (SLMs) Will Dominate Over Large Language Models in 2025: The On-Device AI Revolution

The AI landscape is shifting from "bigger is better" to "right-sized is smarter." Small Language Models (SLMs) are delivering superior business outcomes compared to massive LLMs through dramatic cost reductions, faster inference, on-device privacy, and domain-specific accuracy. This 2025 guide explores why SLMs represent the future of enterprise AI.