How Computer Vision Is Powering Autonomous Robots in 2025

Computer vision gives machines the power to see, interpret, and act. In 2025, it’s enabling autonomous robots, cars, and drones to make intelligent real-time decisions.

TrendFlash

Introduction: Robots Learning to See

Robots have long been the province of manufacturing—mechanical arms following programmed trajectories, precisely repeating movements millions of times. In 2025, a different breed of robot is emerging. Equipped with computer vision, powered by deep learning, these robots can perceive their environments, understand what they see, and adapt their behavior dynamically.

This transformation is reshaping manufacturing, warehouse operations, healthcare, and research. Understanding how computer vision powers autonomous robots is essential for anyone involved in robotics, manufacturing, or autonomous systems.

What Is Computer Vision?

Core Concept

Computer vision is the AI field focused on enabling machines to see, interpret, and react to visual information. This includes:

- Image Classification: Identifying what is in an image

- Object Detection: Locating and identifying specific objects

- Semantic Segmentation: Pixel-level categorization

- 3D Perception: Understanding depth and spatial structure

- Pose Estimation: Understanding orientation and movement

- Anomaly Detection: Identifying unusual patterns

Why Vision Matters for Robotics

Without vision, robots are blind. They cannot:

- Locate objects they need to manipulate

- Avoid obstacles

- Identify quality issues or defects

- Adapt to environmental variations

- Understand human intentions through gestures

Vision transforms robots from mechanical scripts to adaptive, intelligent systems.

Core Computer Vision Tasks in Robotics

Object Detection and Localization

Robots must find and locate objects. Modern systems use deep learning models (YOLO, Faster R-CNN, RetinaNet) to detect objects and provide their positions and orientations.

Applications:

- Pick-and-place robots in warehouses locating packages

- Manufacturing robots finding components to assemble

- Surgical robots identifying anatomical structures

Pose Estimation

Understanding the 3D position and orientation of objects (or humans). This is critical for manipulation—grasping requires understanding exactly where and how objects are oriented.

Semantic Understanding

Beyond detecting objects, robots need to understand context:

- "That's a fragile object—handle gently"

- "That's a human—move safely"

- "That looks damaged—set aside for inspection"

Semantic segmentation and advanced classifiers enable this contextual understanding.

Real-Time Scene Understanding

Robots need to understand entire scenes, not just individual objects. Scene understanding combines detection, segmentation, and reasoning about relationships.

Technology Enabling Autonomous Robots

Deep Learning Architecture

CNNs dominate robotics vision due to their efficiency, enabling real-time processing on robot hardware. Vision Transformers are emerging for applications where accuracy matters more than latency.

Sensor Fusion

Modern robots combine:

- RGB cameras (color images)

- Depth cameras (3D structure)

- Thermal cameras (heat signatures)

- Lidar (laser-based 3D scanning)

- Proprioceptive sensors (robot joint angles, forces)

Fusing these modalities creates rich understanding of environments.

Real-Time Processing

Robot decisions must be fast. Real-time constraints drive architecture choices—models must run at 30-60 Hz while keeping latency under 100ms.

Sim-to-Real Transfer

Training in simulation then transferring to real robots dramatically reduces real-world training time. Domain randomization helps models trained in perfect simulation work on real, messy environments.

Real-World Applications in 2025

Warehouse Automation

Amazon Robotics uses vision-equipped robots to navigate warehouses, locate packages, and work collaboratively with humans. These robots now handle millions of items daily.

Manufacturing Quality Control

Vision systems inspect products at production line speeds, detecting defects humans would miss. This maintains quality while improving throughput.

Healthcare and Surgery

Surgical robots using computer vision enable minimally invasive procedures. Surgeons see magnified, real-time views while robots provide steady hands and precision.

Autonomous Vehicles

Computer vision powers autonomous vehicle perception, enabling road scene understanding, traffic sign recognition, and obstacle detection.

Inspection and Maintenance

Drones with vision systems inspect infrastructure (power lines, bridges, solar panels) improving safety and reducing human risk.

Research and Exploration

Robots with vision explore challenging environments—caves, space, underwater—gathering data inaccessible to humans.

Challenges and Future Directions

Generalization

Systems trained on one environment often fail in new environments. Enabling robots to generalize across conditions remains challenging.

Long-Horizon Planning

Most systems handle immediate perception and reaction. Multi-step planning involving visual understanding remains frontier research.

Common Sense Reasoning

Humans understand physics, cause-and-effect, and social norms intuitively. Encoding this knowledge into robots is ongoing work.

Safety and Verification

As robots operate autonomously around humans, ensuring safe behavior becomes paramount. Formal verification of vision-based decision-making is an emerging area.

Conclusion

Computer vision is the sense enabling autonomous robots. As vision systems improve and deployment scales, robotics will transition from scripted automation to truly adaptive, intelligent systems that understand and respond to complex environments.

Explore more: warehouse automation, autonomous vehicles, robotics and vision.

Tags

Share this post

Categories

Recent Posts

Opening the Black Box: AI's New Mandate in Science

AI as Lead Scientist: The Hunt for Breakthroughs in 2026

Measuring the AI Economy: Dashboards Replace Guesswork in 2026

Your New Teammate: How Agentic AI is Redefining Every Job in 2026

Related Posts

Continue reading more about AI and machine learning

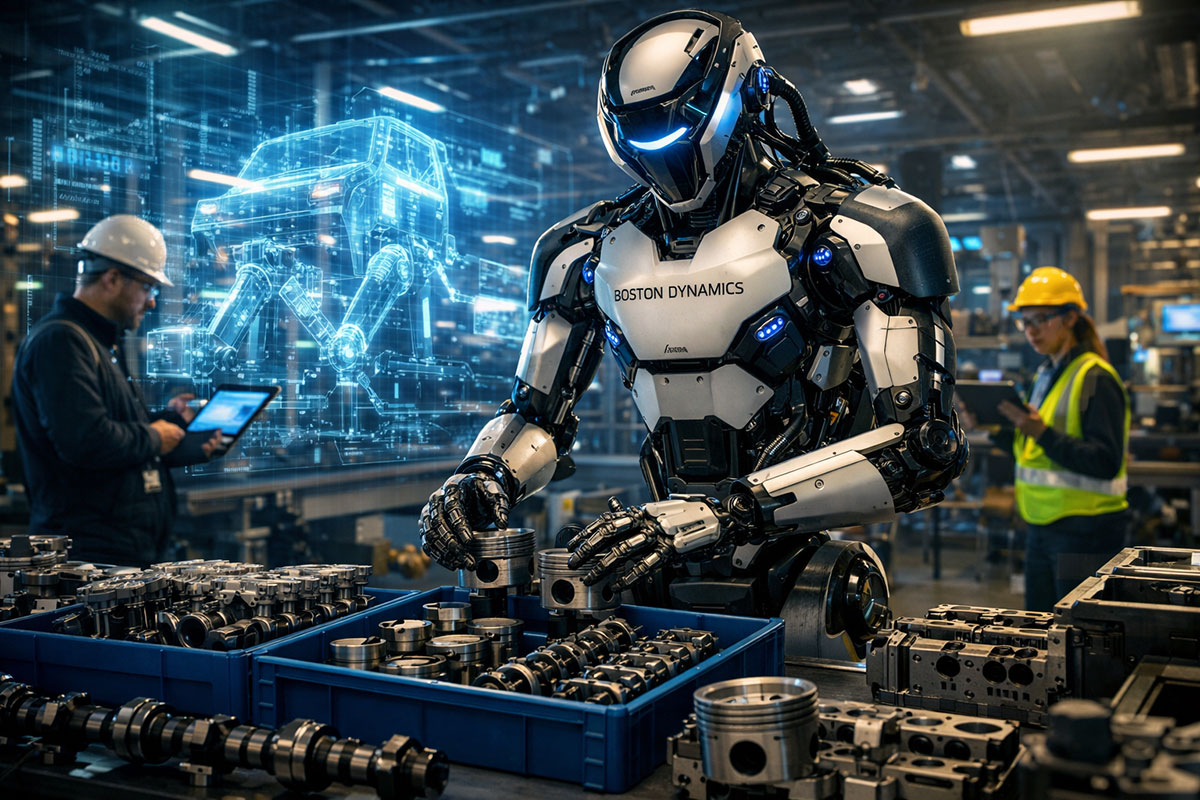

Physical AI Hits the Factory Floor: Beyond the Humanoid Hype

Forget the viral dance videos. In early 2026, Physical AI moved from the lab to the assembly line. With the electric Boston Dynamics Atlas beginning field tests at Hyundai, we explore the "Vision-Language-Action" models and digital twins turning humanoid hype into industrial reality.

Boston Dynamics' Atlas at Hyundai: The Humanoid Robot Era Begins

The humanoid robot era isn't coming—it's here. Boston Dynamics' Atlas just walked onto a real factory floor at Hyundai's Georgia facility, marking the first commercial deployment of a humanoid robot in manufacturing. With 99.8% reliability, superhuman flexibility, and the ability to learn new tasks in a single day, Atlas represents a fundamental shift in how factories operate. But here's what nobody's talking about: this isn't about replacing workers. It's about redefining what manufacturing jobs look like.

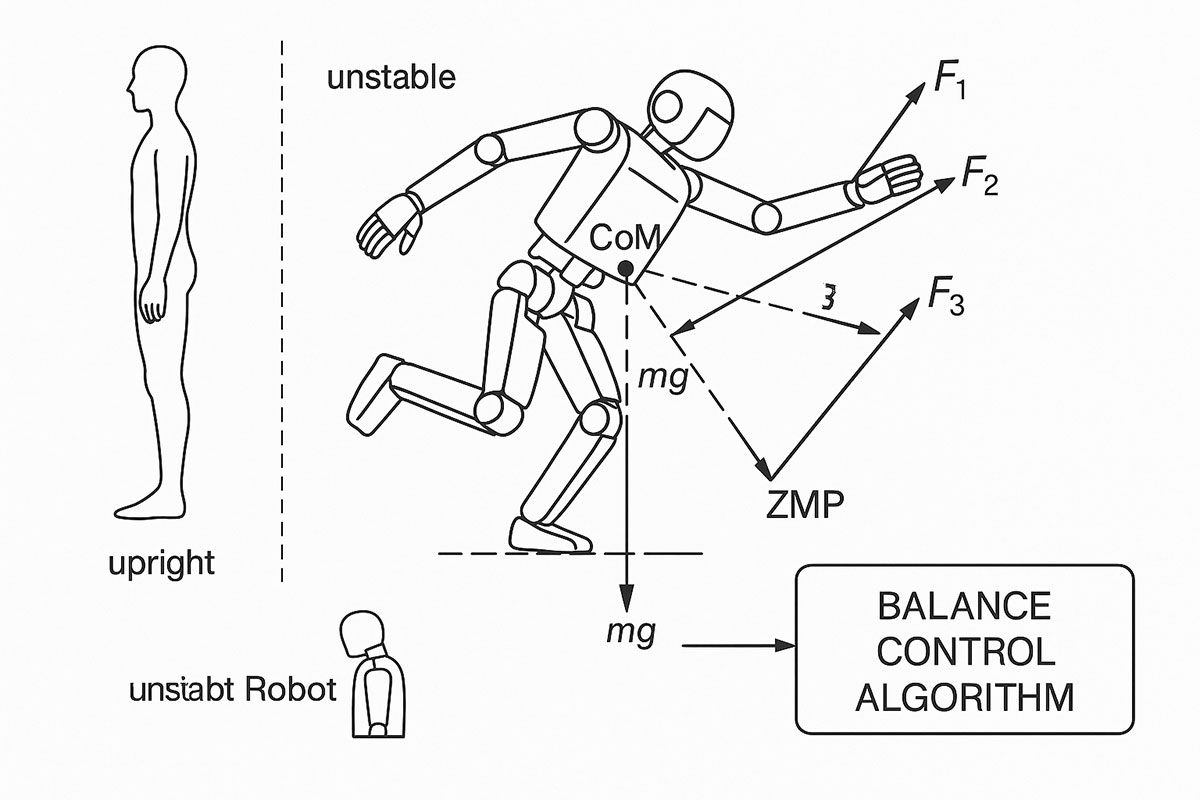

Russia's First AI Humanoid Robot Falls on Stage: The AIdol Disaster & What It Teaches About AI Robotics (November 2025)

On November 10, 2025, Russia's first humanoid AI robot, AIdol, became an instant internet sensation for all the wrong reasons—it collapsed face-first on its debut stage. While the viral video sparked laughter and memes online, the incident reveals profound truths about why humanoid robotics remains one of AI's greatest challenges.