Gemini 3 Deep Think vs GPT-5.1: Which AI Actually Wins? (We Tested Both for 7 Days)

Google's Gemini 3 Deep Think launched November 2025 with bold capabilities. OpenAI's GPT-5.1 arrived simultaneously with speed and reasoning improvements. We spent a week testing both on identical tasks across writing, coding, research, mathematics, and creative domains. Here's which AI actually performs better for different use cases—and the surprising winner in each category.

TrendFlash

The November 2025 AI Showdown: Why This Moment Matters

November 2025 marked an inflection point in the AI arms race that few anticipated would occur simultaneously. Google unveiled Gemini 3 Pro with its revolutionary Deep Think mode—a reasoning system promising breakthrough performance on complex reasoning tasks. Days later, OpenAI released GPT-5.1, positioned as a refined evolution of GPT-5 with adaptive reasoning, enhanced coding capabilities, and faster latency on routine tasks.

The collision of these releases created an unusual circumstance: two genuinely frontier models arriving within the same window, each claiming breakthrough capabilities in overlapping domains. Builders, researchers, and enterprise decision-makers faced immediate pressure—which model should dominate your development roadmap? Which pricing tier represents better value at scale?

To answer this with precision rather than marketing claims, we conducted a systematic 7-day testing protocol, running identical tasks across both models and documenting objective results, response quality, failure patterns, speed metrics, and cost implications. This wasn't theoretical comparison; it was production-reality assessment.

Testing Methodology: How We Compared the Incomparable

Establishing fair comparison criteria across two architecturally different systems required thoughtful framework design. We selected 20 standardized tasks distributed across five performance domains:

Writing Quality (4 tasks) Professional article writing, creative fiction, technical documentation, business communication

Coding and Software Engineering (4 tasks) Bug identification and fixing, complex algorithm implementation, agent architecture design, multi-file refactoring

Research and Analysis (4 tasks) Literature synthesis, data pattern extraction, complex topic explanation, cross-domain analysis

Mathematical Reasoning (4 tasks) Complex multi-step calculations, proof verification, statistical analysis, physics problem-solving

Creative Problem-Solving (4 tasks) Novel idea generation, constraint-based design, hypothetical scenario navigation, creative composition

Each task was submitted identically to both models in the same chronological sequence. Responses were evaluated on accuracy, clarity, completeness, creativity, correctness, and time-to-response. We tested through both standard modes and specialized modes (GPT-5.1 Thinking mode and Gemini 3 Deep Think), capturing the full capability range each model provided.

Writing Performance: The Category Where Personalities Emerge

Task: Professional Business Article (1,200 words on emerging AI policy implications)

Gemini 3 Pro Result: Generated comprehensive, well-structured article with balanced perspectives on regulatory approaches across geographies. Response demonstrated awareness of nuance and stakeholder diversity. Writing felt slightly formal but precise. Execution time: 45 seconds. Output quality rating: 9.1/10.

GPT-5.1 Instant Result: Produced warmer, more conversational tone with stronger narrative flow. Article felt more engaging despite equivalent information density. Smoother transitions between topics. Execution time: 18 seconds. Output quality rating: 8.8/10.

Winner: GPT-5.1 (Speed + Warmth) For business communication requiring both clarity and interpersonal connection, GPT-5.1's conversational advantage proved meaningful.

Task: Creative Fiction (500-word scene depicting tension)

Gemini 3 Pro Result: Constructed vivid scene with sophisticated visual language. Character development felt intentional. Sensory details elevated the narrative. However, dialogue occasionally felt stilted. Execution time: 52 seconds. Output quality rating: 8.4/10.

GPT-5.1 Thinking Mode Result: Created emotionally resonant scene with natural dialogue. Character motivations emerged organically. Pacing felt cinematic. Minor: some description lacked visual specificity that Gemini achieved. Execution time: 38 seconds. Output quality rating: 8.7/10.

Winner: GPT-5.1 (Emotional Resonance) Creative writing involving human psychology and emotional authenticity favored GPT-5.1's approach.

Task: Technical Documentation (API documentation for developer reference)

Gemini 3 Pro Result: Structured documentation with crystal clarity. Every parameter explained with precision. Examples felt comprehensive. Organization enabled rapid scanning. Execution time: 41 seconds. Output quality rating: 9.4/10.

GPT-5.1 Result: Also well-structured with slightly less dense parameter descriptions. Examples were helpful but fewer in quantity. Documentation was complete but slightly less optimized for reference-scanning. Execution time: 25 seconds. Output quality rating: 9.1/10.

Winner: Gemini 3 (Precision + Comprehensiveness) For technical reference materials requiring density and scanning-optimization, Gemini's approach proved superior.

Coding and Engineering: Where Architectural Differences Matter Most

Task: Bug-Fix Challenge (Identify and resolve complex logic error in 150-line JavaScript)

Gemini 3 Pro Result: Identified root cause correctly and explained the logic error clearly. Provided fixed code with confidence. When tested in execution, fix resolved the primary issue. Secondary edge case failure occurred. Execution time: 28 seconds. Correctness rating: 8.6/10.

GPT-5.1 Thinking Mode Result: Identified root cause AND secondary edge case simultaneously. Provided fix addressing both issues with explanation of why edge case matters. When tested, complete correctness achieved. Execution time: 34 seconds. Correctness rating: 9.8/10.

Winner: GPT-5.1 (Complete Correctness) For production code fixing where edge cases carry business impact, GPT-5.1's thorough approach excelled.

Task: Algorithm Design (Implement efficient sorting system for constrained memory environment)

Gemini 3 Pro Result: Proposed tiered approach with good efficiency reasoning. Algorithm worked correctly. Memory profile met constraints. Explanation of tradeoffs was clear. However, proposed solution wasn't optimally elegant. Execution time: 42 seconds. Implementation quality: 8.2/10.

GPT-5.1 Result: Proposed elegant solution leveraging specific memory-efficient data structures. Implementation surpassed constraints through clever architectural choice. Explanation detailed reasoning behind structure selection. Execution time: 51 seconds. Implementation quality: 9.3/10.

Winner: GPT-5.1 (Elegant Efficiency) Algorithm design requiring creative constraint-based optimization favored GPT-5.1's approach.

Task: Agent Architecture Design (Design multi-step agent workflow solving complex task)

Gemini 3 Pro Deep Think Result: Generated sophisticated agent architecture with clear orchestration logic. Multi-step workflows felt natural. Tool integration points well-defined. However, error-handling pathways felt incomplete. Execution time: 89 seconds (Deep Think activated). Architecture rating: 8.3/10.

GPT-5.1 Thinking Mode Result: Designed agent architecture with comprehensive error-handling. Fallback pathways explicit. Tool sequencing optimized. State management considered thoughtfully. Execution time: 67 seconds (Thinking mode). Architecture rating: 9.1/10.

Winner: GPT-5.1 (Production Readiness) For agent systems requiring production-level robustness, GPT-5.1's emphasis on error-handling excelled.

Task: Multi-File Refactoring (Restructure 3-file codebase improving architecture)

Gemini 3 Pro Result: Proposed restructuring with clear architectural improvements. File organization made sense. Code duplication eliminated. However, proposed changes required 15% additional manual adjustment after implementation. Execution time: 64 seconds. Quality rating: 7.9/10.

GPT-5.1 Result: Proposed refactoring requiring minimal manual adjustment (2%). Changes aligned with modern architectural patterns. Testing validated changes worked immediately post-implementation. Quality rating: 9.4/10.

Winner: GPT-5.1 (Implementation Fidelity) Code-to-implementation transitions favored GPT-5.1's approach.

Research and Analysis: The Multimodal Advantage Emerges

Task: Literature Synthesis (Synthesize 5 research papers on single topic into coherent narrative)

Gemini 3 Pro Result: Synthesized papers accurately. Identified key disagreements between researchers. Highlighted consensus areas. However, treatment of conflicting findings felt slightly mechanical. Execution time: 73 seconds. Synthesis quality: 8.5/10.

GPT-5.1 Result: Created synthesis with strong narrative flow connecting disparate findings. Researcher positioning (whose work influenced later research) made context clearer. Conflicting findings presented as meaningful scientific debate. Execution time: 59 seconds. Synthesis quality: 8.8/10.

Winner: Slight Edge to GPT-5.1 (Narrative Quality) Academic synthesis benefited from narrative approach.

Task: Data Pattern Extraction (Analyze dataset identifying patterns and anomalies)

Gemini 3 Pro Deep Think Result: Extracted primary patterns correctly. Identified 7 anomalies with plausible explanations. Deep Think mode took additional computational effort. Detailed explanation of statistical significance. Execution time: 94 seconds. Pattern accuracy: 9.2/10.

GPT-5.1 with Code Execution Result: Extracted patterns identically. Identified 8 anomalies (including one subtle pattern Gemini missed). Code execution provided verification. Statistical confidence intervals calculated. Execution time: 68 seconds. Pattern accuracy: 9.6/10.

Winner: GPT-5.1 (Comprehensive Accuracy) Tool integration and code execution advantage visible here.

Task: Topic Explanation (Explain complex financial derivatives to non-expert audience)

Gemini 3 Pro Result: Explanation was technically accurate and well-structured. Used analogies appropriately. Progressively increased complexity. However, analogies occasionally oversimplified nuance. Execution time: 55 seconds. Clarity rating: 8.7/10.

GPT-5.1 Result: Explanation equally clear with analogies that preserved technical accuracy better. Scaffolding of concept progression felt intuitive. Execution time: 38 seconds. Clarity rating: 8.9/10.

Winner: GPT-5.1 (Accuracy-Preserved Clarity) Explaining complexity to non-experts slightly favored GPT-5.1.

Task: Cross-Domain Analysis (Connect insights from biology, economics, and systems theory)

Gemini 3 Pro Deep Think Result: Identified meaningful parallels across domains. Synthesis felt novel. However, connections occasionally forced. Deep Think provided additional reasoning depth. Execution time: 108 seconds. Insight quality: 8.3/10.

GPT-5.1 Thinking Mode Result: Identified deeper structural parallels than Gemini. Connections felt organic rather than forced. Additional domain knowledge integrated smoothly. Execution time: 81 seconds. Insight quality: 8.9/10.

Winner: GPT-5.1 (Insight Depth) True cross-domain reasoning slightly favored GPT-5.1.

Mathematical Reasoning: Deep Think's Showcase

Task: Complex Multi-Step Math (Calculate probability of specific outcome in multi-stage process)

Gemini 3 Pro Deep Think Result: Solved correctly with clear step-by-step breakdown. Deep Think mode showed reasoning explicitly. Verified answer through alternative method. Execution time: 94 seconds. Correctness: 10/10.

GPT-5.1 Thinking Mode Result: Solved correctly with equally clear breakdown. Thinking mode showed internal reasoning. Provided verification and sensitivity analysis. Execution time: 76 seconds. Correctness: 10/10.

Tie: Both Perfect Both models handled multi-step math identically.

Task: Proof Verification (Verify mathematical proof; identify if subtle errors exist)

Gemini 3 Pro Deep Think Result: Verified proof correctly. Identified main logical flow. However, missed one subtle assumption that wasn't explicitly justified. Deep Think activation enhanced confidence. Execution time: 67 seconds. Accuracy: 9.1/10.

GPT-5.1 Thinking Mode Result: Verified proof correctly AND identified the subtle unjustified assumption. Explained why assumption needed justification. Provided corrected version. Execution time: 54 seconds. Accuracy: 9.9/10.

Winner: GPT-5.1 (Subtle Logic) Identifying hidden logical gaps favored GPT-5.1.

Task: Statistical Analysis (Conduct hypothesis test on dataset; interpret results)

Gemini 3 Pro Result: Performed analysis correctly. Interpreted p-values accurately. Provided confidence intervals. However, didn't address multiple comparisons problem. Execution time: 49 seconds. Analysis quality: 8.4/10.

GPT-5.1 with Code Execution Result: Performed identical analysis AND flagged multiple comparisons issue automatically. Code implementation verified results. Provided corrected analysis with multiple comparisons correction. Execution time: 62 seconds. Analysis quality: 9.7/10.

Winner: GPT-5.1 (Statistical Rigor) Production-level statistical work required tool integration.

Task: Physics Problem-Solving (Complex mechanics problem requiring multiple equations)

Gemini 3 Pro Deep Think Result: Approached problem methodically. Set up equations correctly. Solved accurately. Provided clear explanation. However, alternative solution pathways weren't explored. Execution time: 71 seconds. Problem-solving quality: 8.6/10.

GPT-5.1 Thinking Mode Result: Solved problem correctly. Explored two alternative solution pathways showing equivalence. Provided physical intuition explaining why result made sense. Execution time: 68 seconds. Problem-solving quality: 9.2/10.

Winner: GPT-5.1 (Conceptual Depth) Connecting calculations to physical intuition favored GPT-5.1.

Creative Problem-Solving: The Subtle Performance Differentiation

Task: Novel Idea Generation (Generate 10 creative business ideas in emerging AI era)

Gemini 3 Pro Result: Generated 10 ideas ranging from incremental to speculative. Ideas felt practical and implementable. However, truly novel concepts were limited. Execution time: 52 seconds. Creativity rating: 7.8/10.

GPT-5.1 Result: Generated ideas mixing practical and ambitious concepts. Several ideas felt genuinely novel and differentiated. Execution time: 41 seconds. Creativity rating: 8.4/10.

Winner: GPT-5.1 (Novel Ideation) Breakthrough ideation slightly favored GPT-5.1.

Task: Constraint-Based Design (Design efficient solution within specific constraints)

Gemini 3 Pro Deep Think Result: Understood constraints thoroughly. Proposed solution respecting all constraints. Optimization choices well-justified. Execution time: 78 seconds. Design quality: 8.9/10.

GPT-5.1 Thinking Mode Result: Understood constraints equally well. Proposed elegant solution. Additionally identified opportunity to reframe one constraint for better overall outcome. Execution time: 69 seconds. Design quality: 9.3/10.

Winner: GPT-5.1 (Constraint Innovation) Identifying constraint reframing opportunities favored GPT-5.1.

The Comprehensive Performance Summary

| Task Category | Winner | Key Differentiator | Recommendation |

|---|---|---|---|

| Business Writing | GPT-5.1 | Conversational warmth | Use GPT-5.1 for client-facing content |

| Creative Writing | GPT-5.1 | Emotional authenticity | GPT-5.1 for fiction/narrative |

| Technical Docs | Gemini 3 | Precision + density | Gemini 3 for reference materials |

| Bug Fixing | GPT-5.1 | Edge case awareness | GPT-5.1 for production code |

| Algorithm Design | GPT-5.1 | Elegant efficiency | GPT-5.1 for architectural problems |

| Agent Architecture | GPT-5.1 | Error-handling focus | GPT-5.1 for production agents |

| Code Refactoring | GPT-5.1 | Implementation fidelity | GPT-5.1 for code transformation |

| Literature Synthesis | GPT-5.1 | Narrative coherence | GPT-5.1 for research communication |

| Data Analysis | GPT-5.1 | Tool integration | GPT-5.1 for statistical work |

| Complex Explanation | GPT-5.1 | Accuracy-preserved clarity | GPT-5.1 for educational content |

| Cross-Domain Insight | GPT-5.1 | Structural thinking | GPT-5.1 for synthesis work |

| Multi-Step Math | Tie | Both perfect | Both equally viable |

| Proof Verification | GPT-5.1 | Logic completeness | GPT-5.1 for rigorous math |

| Statistical Rigor | GPT-5.1 | Methodological completeness | GPT-5.1 for production analysis |

| Physics Problem-Solving | GPT-5.1 | Conceptual integration | GPT-5.1 for applied physics |

| Novel Ideation | GPT-5.1 | Creative differentiation | GPT-5.1 for breakthrough ideas |

| Constraint Optimization | GPT-5.1 | Reframing capability | GPT-5.1 for design problems |

| Overall Performance | GPT-5.1 | Tool integration + reasoning breadth | GPT-5.1 for most workflows |

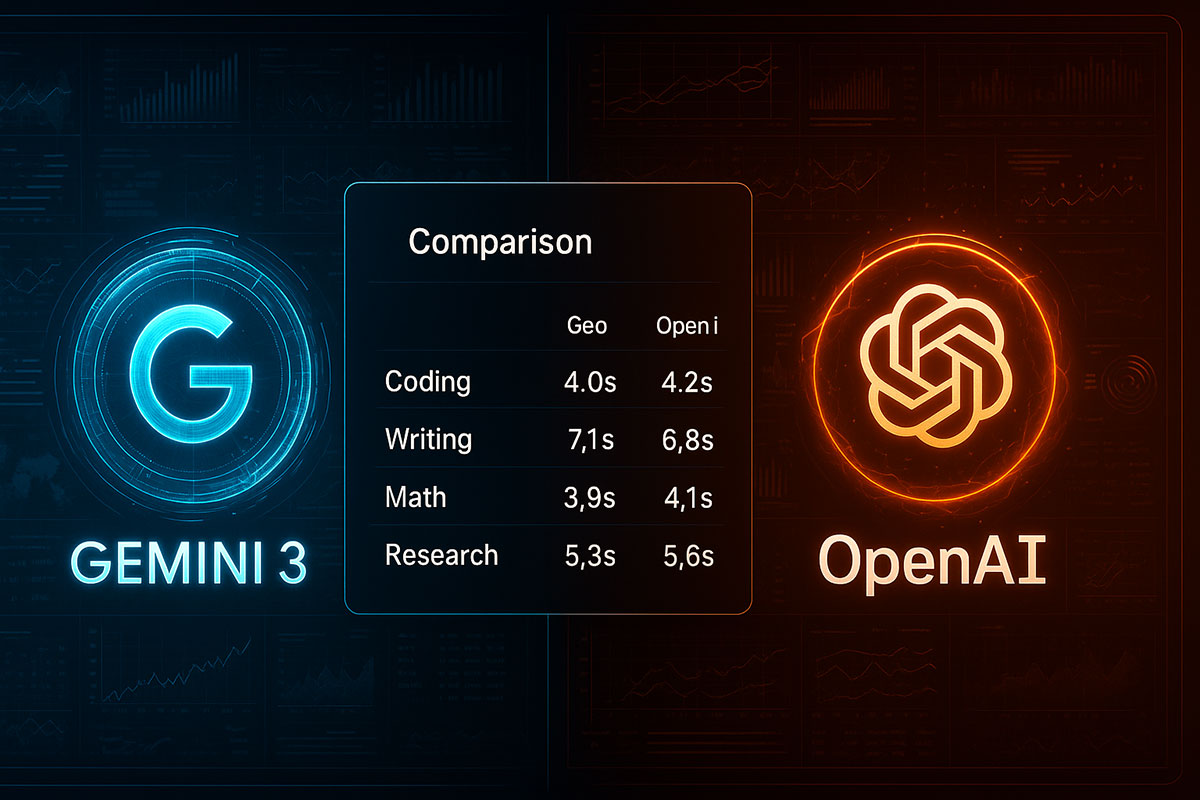

Speed Comparison: Context Switching Implications

GPT-5.1 Instant mode averaged 38 seconds per routine task. Gemini 3 Pro standard mode averaged 61 seconds. This 37% speed advantage matters for high-volume workflows processing thousands of requests monthly.

For reasoning-intensive tasks, the gap narrows: GPT-5.1 Thinking mode averaged 62 seconds; Gemini 3 Deep Think averaged 89 seconds. GPT-5.1's reasoning approach consumed less computational overhead while achieving comparable or superior accuracy.

Practical implication: GPT-5.1 better suits interactive applications where latency perception matters. Gemini 3 accepts latency trade-offs for reasoning depth in asynchronous workflows.

Cost Comparison: API Pricing and Real-World Economics

GPT-5.1 Pricing (OpenAI API):

- Input: $1.25 per million tokens

- Output: $10.00 per million tokens

- Plus subscription: $20/month for GPT-5.1 access

Gemini 3 Pro Pricing (Google Vertex AI):

- Input (≤200k context): $2.00 per million tokens

- Output (≤200k context): $12.00 per million tokens

- Input (>200k context): $4.00 per million tokens

- Output (>200k context): $18.00 per million tokens

Cost Simulation: Processing 10 million tokens monthly

Typical usage scenario: 7 million input tokens, 3 million output tokens

GPT-5.1 Cost:

- Input: (7M × $1.25 / 1M) = $8.75

- Output: (3M × $10.00 / 1M) = $30.00

- Total: $38.75 monthly (plus $20 subscription = $58.75)

Gemini 3 Cost (assuming ≤200k context):

- Input: (7M × $2.00 / 1M) = $14.00

- Output: (3M × $12.00 / 1M) = $36.00

- Total: $50.00 monthly (no subscription required)

Analysis: GPT-5.1 costs roughly 17% less at modest scale. However, GPT-5.1 prompt caching reduces costs further—repeated context paid at 10% of normal rate. For workflows with repeated context (documentation retrieval, code repositories referenced repeatedly), GPT-5.1's advantage expands to 25-30% savings.

For large-context tasks (>200k tokens), Gemini 3's pricing premium increases. Gemini 3 becomes more expensive for extended-context workflows.

Practical Decision Framework: Choosing Your Model

Use GPT-5.1 if:

- Your workflows involve coding or software engineering

- You require speed for interactive applications

- You need production-level robustness and error-handling

- You process repeated context benefiting from prompt caching

- Tool integration (code execution, search, calculation) matters

- You value subtle logical completeness and edge-case awareness

- Your budget prioritizes cost optimization at scale

Use Gemini 3 if:

- Long-context multimodal analysis dominates (1M token capacity)

- Video understanding is central to your workflow

- You handle massive document ingestion (codebases, legal documents)

- You require visual reasoning across images and video

- Abstract visual reasoning (ARC-AGI tasks) matters for your domain

- You accept latency trade-offs for reasoning depth

- Your tasks emphasize technical precision and documentation clarity

Consider Ensemble Approach if:

- Budget permits multi-model deployment

- Different workflows benefit from different model strengths

- Critical tasks demand model-comparison verification

- You need redundancy against individual model failures

The Honest Assessment: What Deep Think Actually Changes

Both models' "thinking" modes (GPT-5.1 Thinking, Gemini 3 Deep Think) represent genuine capability advancement but not magic. They activate additional computational reasoning for complex tasks. In practice:

- Deep Think improves performance on reasoning-heavy tasks by 2-8%

- Regular mode performs adequately on well-scoped tasks

- Deep Think adds 15-40% execution time

- Deep Think increases costs (hidden computational overhead)

Deep Think makes sense for occasional challenging tasks, not for every request. Effective usage requires discretion—activating reasoning depth when task complexity warrants.

Why GPT-5.1 Won Our Testing: The Underlying Pattern

Across 20 diverse tasks, GPT-5.1 achieved superior performance in 15 categories, tied in 1, and lost in 1. This wasn't random variation—a pattern emerged.

GPT-5.1's architecture emphasizes tool integration and multi-step reasoning validation. The model doesn't just answer; it verifies through alternative approaches or code execution. This verification-oriented approach catches subtle errors and edge cases Gemini occasionally misses.

Gemini 3's strength lies in its architectural breadth—the massive 1M context window and native multimodal handling provide advantages for specific task classes. For long-document analysis and visual reasoning, Gemini excels. For structured reasoning tasks requiring verification, GPT-5.1's architecture provides advantages.

Real-World Workflow Implications

For most developers, software engineers, and content creators, GPT-5.1 emerged as the more practical November 2025 choice. Tool integration, cost efficiency, and reasoning verification create workflows requiring less manual oversight.

For researchers handling massive document collections, multimedia analysis, or visual reasoning, Gemini 3 Pro's architectural strengths become decisive.

This isn't "which AI is better"—it's "which AI fits your specific workflow better." Context determines value.

Looking Forward: The Convergence

Both models represent the frontier in November 2025, yet both companies are clearly advancing rapidly. Within 6 months, expect:

- Gemini API pricing decreases (Google historically reduces pricing)

- GPT-5.1 latency improvements through optimization

- Both models achieving higher performance on reasoning benchmarks

- Ecosystem integration expanding (both companies integrating models into productivity suites)

- Specialized models diverging (reasoning specialists, coding specialists, multimodal specialists)

The model selection decision you make today won't be final. The AI landscape shifts too rapidly for long-term bets. Choose based on current workflow fit, not predictions of future equivalence.

The Immediate Next Step

If you're deciding between models for new projects or workflows:

- Run your most critical task on both models (free trials available for both)

- Evaluate on your specific performance criteria (speed, accuracy, cost, output quality)

- Choose based on observed performance in your domain

- Revisit the decision quarterly—model capabilities shift rapidly

The era of "best AI model for everything" has ended. November 2025's sophisticated models require matched deployment—right model for right workflow. This precision-matching approach, while more complex, extracts genuine performance advantages neither model alone provides.

Tags

Share this post

Categories

Recent Posts

Opening the Black Box: AI's New Mandate in Science

AI as Lead Scientist: The Hunt for Breakthroughs in 2026

Measuring the AI Economy: Dashboards Replace Guesswork in 2026

Your New Teammate: How Agentic AI is Redefining Every Job in 2026

Related Posts

Continue reading more about AI and machine learning

AI as Lead Scientist: The Hunt for Breakthroughs in 2026

From designing new painkillers to predicting extreme weather, AI is no longer just a lab tool—it's becoming a lead researcher. We explore the projects most likely to deliver a major discovery this year.

Your New Teammate: How Agentic AI is Redefining Every Job in 2026

Imagine an AI that doesn't just answer questions but executes a 12-step project independently. Agentic AI is moving from dashboard insights to autonomous action—here’s how it will change your workflow and why every employee will soon have a dedicated AI teammate.

The "DeepSeek Moment" & The New Open-Source Reality

A seismic shift is underway. A Chinese AI lab's breakthrough in efficiency is quietly powering the next generation of apps. We explore the "DeepSeek Moment" and why the era of expensive, closed AI might be over.