Can AI Beat the Stock Market in 2025? What Investors Should Know

In 2025, AI is analyzing millions of signals to predict stocks. But can it really beat the market? Here’s what investors need to know.

TrendFlash

Introduction: AI Isn't All Sunshine

The media loves to celebrate AI's benefits. But every powerful technology has dark sides. AI is no exception. Understanding the risks is essential for navigating the AI future responsibly.

This guide explores AI's genuine dangers and what we're doing (or should be doing) to mitigate them.

The Major AI Risks

1. Algorithmic Bias & Discrimination

The Problem:

- AI trained on biased historical data perpetuates bias

- Facial recognition 30%+ error rate on dark-skinned faces (vs. 1% on light skin)

- Loan algorithms deny credit to minorities more often

- Resume screening AI penalizes female candidates

Real Impact: Discriminatory outcomes at massive scale

Safeguards:

- Bias auditing before deployment

- Diverse training data

- Human oversight of high-impact decisions

2. Privacy & Mass Surveillance

The Problem:

- AI enables mass surveillance at scale

- Behavioral tracking (location, browsing, spending)

- Predictive profiling (inferring sensitive attributes)

- Facial recognition in public spaces

Real Impact: No privacy possible in public, governments using for population control

Safeguards:

- Data protection laws (GDPR, etc.)

- Transparency requirements

- User rights (access, deletion)

- Public oversight

3. Misinformation & Deepfakes

The Problem:

- AI generates convincing fake videos/audio

- AI writes persuasive misinformation at scale

- Deepfakes (fake celebrity videos) eroding trust

- Political misinformation campaigns using AI

Real Impact: Erosion of truth, political instability, election interference

Safeguards:

- Detection tools for deepfakes

- Media literacy education

- Provenance tracking (proving origin)

- Platform responsibility

4. Job Displacement & Economic Inequality

The Problem:

- Millions of jobs being automated

- Transformation happening faster than retraining

- Economic benefits concentrating with tech companies

- Regional disparities widening

Real Impact: Unemployment, poverty, social instability in some regions

Safeguards:

- Retraining programs

- Social safety nets

- Equitable benefit sharing

- Regional economic diversification

5. Cybersecurity & Adversarial Attacks

The Problem:

- AI systems vulnerable to adversarial attacks

- Small changes to input cause wrong outputs

- Example: Stop sign misread as speed limit

- Malicious actors can exploit vulnerabilities

Real Impact: Autonomous vehicle crashes, security system bypasses

Safeguards:

- Adversarial training

- Robustness testing

- Human oversight of critical systems

- Red-teaming (friendly hackers)

6. Concentration of Power

The Problem:

- Few companies control most AI (Google, Microsoft, Meta, OpenAI, Anthropic)

- These companies shape what AI can do

- Winner-take-all economics in AI

- Regulatory capture (companies shaping their own rules)

Real Impact: Corporate power over society, reduced competition

Safeguards:

- Antitrust enforcement

- Open-source alternatives

- Independent research

- Regulatory oversight

7. Environmental Impact

The Problem:

- Training large AI models uses massive energy

- Data centers consuming 15%+ of global electricity

- Water usage for cooling (in water-scarce regions)

- E-waste from hardware

Real Impact: Accelerating climate change, resource depletion

Safeguards:

- Energy efficiency improvements

- Renewable energy adoption

- Model compression techniques

- Regulatory limits on energy use

8. Autonomous Weapons & Military AI

The Problem:

- AI used for weapons systems

- Autonomous weapons making kill decisions

- Arms race dynamics (countries competing)

- Potential for escalation beyond human control

Real Impact: Potential for wars beyond human understanding, war crimes

Safeguards:

- International treaties (being negotiated)

- Advocacy groups pushing limits

- Transparency requirements

9. Manipulation & Addiction Design

The Problem:

- AI optimized for engagement (= addiction)

- Algorithmic feeds designed for time-on-platform

- Personalization techniques exploiting psychology

- Particularly harmful to teenagers

Real Impact: Mental health crisis, social media addiction, polarization

Safeguards:

- Addiction research and warnings

- Parental controls

- Design regulation (no addictive patterns)

- Digital literacy education

10. Loss of Human Agency

The Problem:

- Outsourcing decisions to AI systems

- Humans becoming passive consumers

- Loss of understanding how things work

- Dependence on systems we don't control

Real Impact: Reduced autonomy, vulnerability to system failures

Safeguards:

- Maintaining human oversight

- Right to explanation

- Transparency about AI use

- Human-in-the-loop for important decisions

The Mitigation Strategies Currently Happening

Regulation

- EU AI Act (strictest, went into effect 2024)

- US sector-specific rules (piecemeal)

- China's content control framework

Industry Self-Regulation

- AI ethics boards (companies reviewing systems)

- Transparency reports (companies disclosing)

- Bias auditing (companies testing)

Research Into Safety

- Adversarial robustness

- Interpretability (understanding why AI decides)

- Alignment (making AI values match human values)

Public Advocacy

- Open letter campaigns

- Nonprofit pressure

- Academic research on harms

What You Can Do

As Individual

- Be skeptical of AI claims

- Understand tools you use

- Protect your data privacy

- Stay informed about AI risks

As Employee/Employer

- Demand ethical AI practices

- Audit your own systems for bias

- Maintain human oversight

- Support responsible AI

As Citizen

- Vote for AI regulation

- Support advocacy organizations

- Stay informed on policy

- Hold companies and government accountable

Conclusion: Risks Are Real But Manageable

AI risks are serious. But they're not inevitable. With proper governance, safeguards, and societal commitment, we can build AI systems that benefit everyone while minimizing harms.

The question isn't whether to adopt AI. It's how to adopt it responsibly. Explore more on AI ethics and governance at TrendFlash.

Share this post

Categories

Recent Posts

Opening the Black Box: AI's New Mandate in Science

AI as Lead Scientist: The Hunt for Breakthroughs in 2026

Measuring the AI Economy: Dashboards Replace Guesswork in 2026

Your New Teammate: How Agentic AI is Redefining Every Job in 2026

Related Posts

Continue reading more about AI and machine learning

AI in Finance: How Agents & Generative Tools Are Transforming Risk, Trading & Compliance

The financial sector is being reshaped by autonomous AI agents and generative tools. Discover how these technologies are creating a competitive edge through sophisticated risk modeling, automated compliance, and adaptive trading strategies.

AI in Finance 2025: From Risk Models to Autonomous Trading

Discover how artificial intelligence is transforming financial services in 2025, moving beyond traditional models to power autonomous systems that optimize trading, enhance security, and navigate complex regulatory demands.

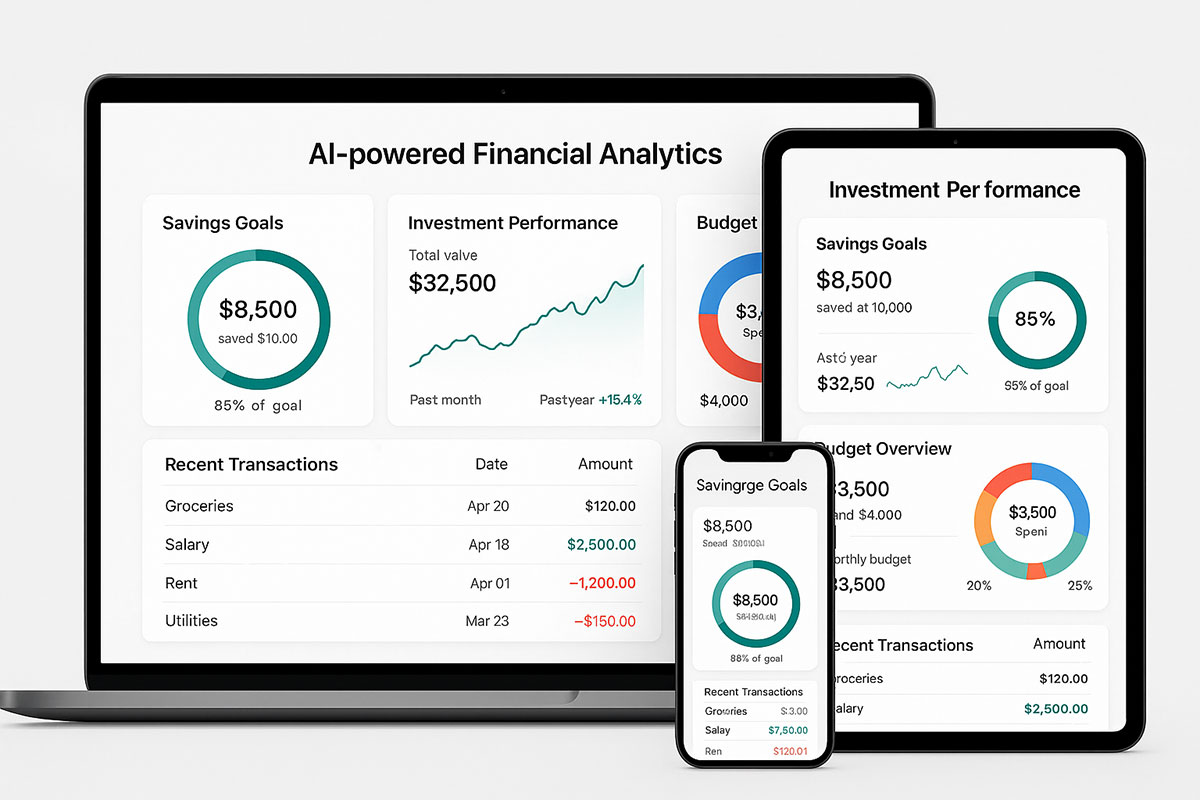

AI-Powered Personal Finance: How Algorithms are Managing Money in 2025

AI financial assistants now manage billions in personal assets. These smart systems optimize spending, automate investing, and prevent financial mistakes in real-time.