Beyond Sight: How Robots Use AI to Understand the World

Robots in 2025 don’t just see—they understand. With AI vision, they interpret complex environments, navigate uncertainty, and collaborate with humans safely.

TrendFlash

Introduction: From Seeing to Understanding

For decades, robots were blind. In the 1960s and 1970s, researchers invested enormous effort teaching robots to see, treating visual perception as the primary challenge. Today, the challenge has fundamentally shifted. Robots can see—now they must understand what they see.

The gap between perception and understanding is vast. A camera can capture images. But understanding requires combining vision with reasoning, knowledge, and context. In 2025, this capability is advancing rapidly, enabling robots to interpret complex environments and adapt intelligently.

From Cameras to Comprehension

The Three Levels of Machine Vision

Level 1 - Raw Perception: Cameras capture pixels. Early computer vision stopped here, treating image processing as the endpoint.

Level 2 - Recognition: Modern systems identify objects, classify scenes, detect humans. This is where most current robotics applications operate.

Level 3 - Understanding: Comprehending not just what is present, but relationships, intentions, consequences, and context. This is the frontier.

What Understanding Requires

Robots reaching Level 3 must:

- Classify objects and recognize patterns

- Estimate 3D position, orientation, and dynamics

- Recognize human gestures and infer intent

- Build semantic maps of entire environments

- Reason about physical consequences of actions

- Understand social and cultural contexts

- Learn from limited examples

- Adapt to novel situations

Achieving this across diverse domains is an open challenge.

Technologies Enabling Environmental Understanding

Deep Learning Vision Models

CNN Evolution: From basic image classification to sophisticated systems performing multiple tasks simultaneously. ResNet, EfficientNet, and specialized architectures handle different vision challenges.

Vision Transformers: Increasingly popular for their global context understanding, enabling robots to relate distant scene elements.

Multimodal Learning: Combining RGB cameras with depth, thermal, and other sensors into unified models that understand environments more richly.

3D Scene Understanding

Robots need 3D models of environments, not just 2D images. Technologies enabling this:

- Depth Estimation: Inferring 3D structure from single or multiple images

- Semantic 3D Mapping: Building 3D maps where each region is labeled by type (wall, floor, furniture, human)

- Point Clouds: Dense 3D representations of environments from lidar or photogrammetry

- Scene Graphs: Representing relationships between objects

Temporal Understanding

Static scene understanding is insufficient. Robots must understand dynamics:

- How objects move

- Human activity trajectories

- Consequence prediction ("if I push this, what happens?")

- Change detection

Video understanding and RNN-based models enable temporal reasoning.

Knowledge Integration

Raw perception is limited without knowledge:

- Physics Knowledge: Understanding gravity, friction, collision

- Object Knowledge: Properties and typical use of objects

- Social Knowledge: Norms, safety, human preferences

- Causal Knowledge: Why things happen and what causes what

Modern systems increasingly incorporate knowledge through hybrid approaches combining learning with symbolic reasoning.

Key Technologies for Robot Understanding

Semantic Segmentation

Pixel-level scene understanding where every pixel is categorized (person, wall, furniture, floor). Modern systems achieve 85%+ accuracy on complex indoor scenes, enabling rich environmental understanding.

Panoptic Segmentation

Advanced systems perform both semantic segmentation (categories) and instance segmentation (individual objects) simultaneously, providing detailed scene comprehension.

Object Relationship Understanding

Robots must understand not just what objects exist, but their relationships:

- "Person is sitting on chair"

- "Cup is on table"

- "Hand is grasping object"

Graph neural networks and relational reasoning models enable this.

Action Affordance Understanding

Objects afford different actions. A chair is for sitting, a cup for drinking. Robots learning affordances can interact with novel objects intelligently, inferring appropriate interactions from learned patterns.

Real-World Applications: Robots That Understand

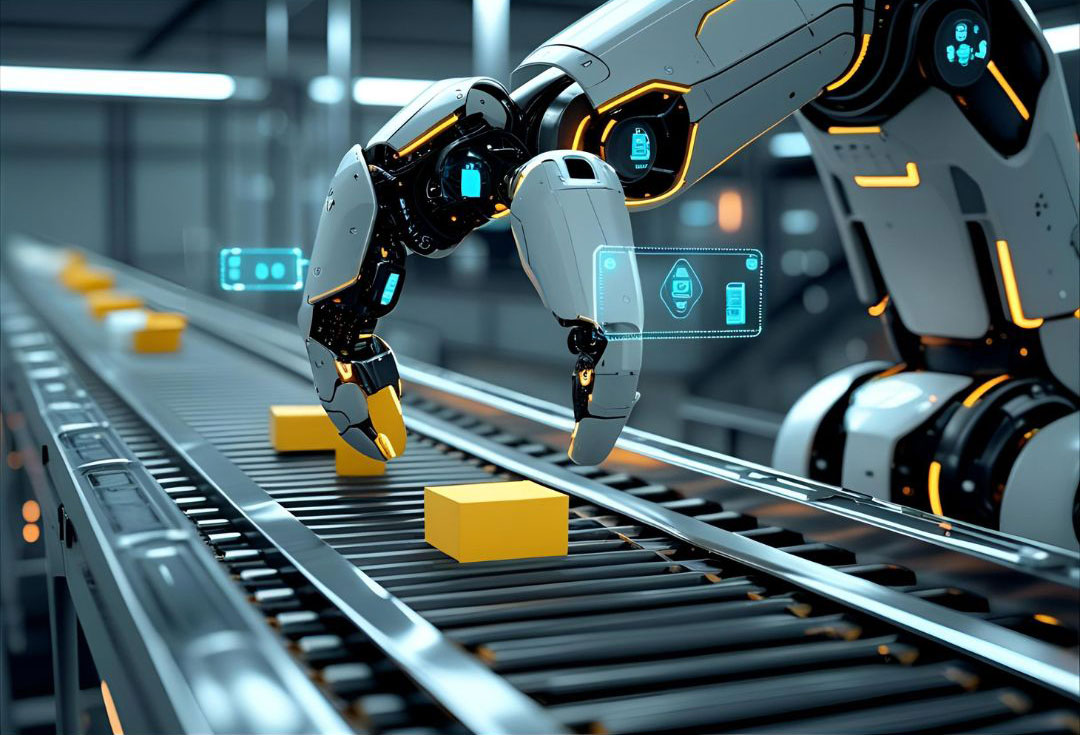

Collaborative Manufacturing

Robots working alongside humans must understand human intentions from gestures, maintain awareness of human safety needs, and communicate intentions clearly.

Assistive Robotics

Robots assisting elderly or disabled individuals must understand needs, preferences, and safety requirements. This requires rich environmental understanding combined with social intelligence.

Autonomous Delivery and Navigation

Autonomous systems navigating public spaces must understand complex scenes: distinguish humans from objects, understand traffic patterns, predict pedestrian behavior.

Search and Rescue

Robots searching disaster zones must understand damaged environments, locate victims, assess stability—all while operating in unpredictable conditions.

Scientific Research

Robots exploring caves, deep ocean, or extraterrestrial environments must understand novel, complex scenes and gather scientifically meaningful data.

Challenges on the Path to True Understanding

Generalization

Systems trained in specific environments often fail dramatically in new contexts. Achieving robust generalization remains elusive.

Long-Tail Events

Robots must handle rare situations gracefully. This requires either massive training data or sophisticated reasoning capabilities.

Common Sense Knowledge

Humans understand physics, causality, and consequences intuitively. Encoding this knowledge into machines remains challenging.

Explainability

When robots make safety-critical decisions, understanding their reasoning becomes essential. Deep neural networks are notoriously opaque.

Real-Time Constraints

Robots often need decisions in milliseconds. This limits computational complexity available for sophisticated reasoning.

The Future: From Understanding to Wisdom

Continual Learning

Robots improving through deployment experience, adapting to new environments and situations continuously.

Transfer Learning

Learning in one domain and applying to novel domains with minimal retraining.

Causal Reasoning

Moving beyond correlation to causal understanding, enabling robots to predict consequences and reason about interventions.

Human-Robot Collaboration

Robots as partners understanding human intentions, preferences, and constraints, working seamlessly alongside humans.

Conclusion: Understanding as Foundation

Raw perception is just the beginning. True robotic intelligence requires understanding—comprehending environments, inferring human intentions, reasoning about consequences, and adapting to novel situations. The gap between current capabilities and true understanding remains substantial, but progress accelerates.

Organizations leading robotics will be those that invest in understanding, not just perception. Robots that see are useful. Robots that understand will be transformative.

Explore further: Computer Vision in Robotics, Learning Robotics, Complete Robotics Guide.

Tags

Share this post

Categories

Recent Posts

Opening the Black Box: AI's New Mandate in Science

AI as Lead Scientist: The Hunt for Breakthroughs in 2026

Measuring the AI Economy: Dashboards Replace Guesswork in 2026

Your New Teammate: How Agentic AI is Redefining Every Job in 2026

Related Posts

Continue reading more about AI and machine learning

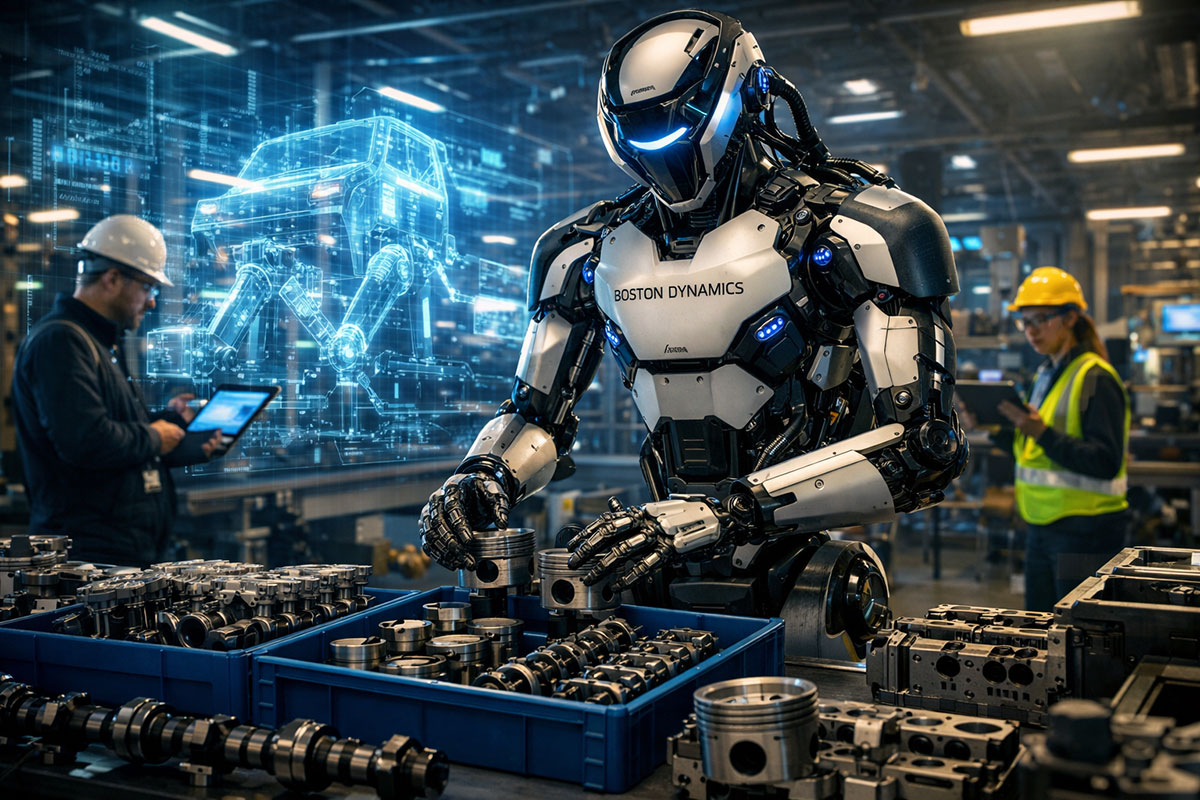

Physical AI Hits the Factory Floor: Beyond the Humanoid Hype

Forget the viral dance videos. In early 2026, Physical AI moved from the lab to the assembly line. With the electric Boston Dynamics Atlas beginning field tests at Hyundai, we explore the "Vision-Language-Action" models and digital twins turning humanoid hype into industrial reality.

Boston Dynamics' Atlas at Hyundai: The Humanoid Robot Era Begins

The humanoid robot era isn't coming—it's here. Boston Dynamics' Atlas just walked onto a real factory floor at Hyundai's Georgia facility, marking the first commercial deployment of a humanoid robot in manufacturing. With 99.8% reliability, superhuman flexibility, and the ability to learn new tasks in a single day, Atlas represents a fundamental shift in how factories operate. But here's what nobody's talking about: this isn't about replacing workers. It's about redefining what manufacturing jobs look like.

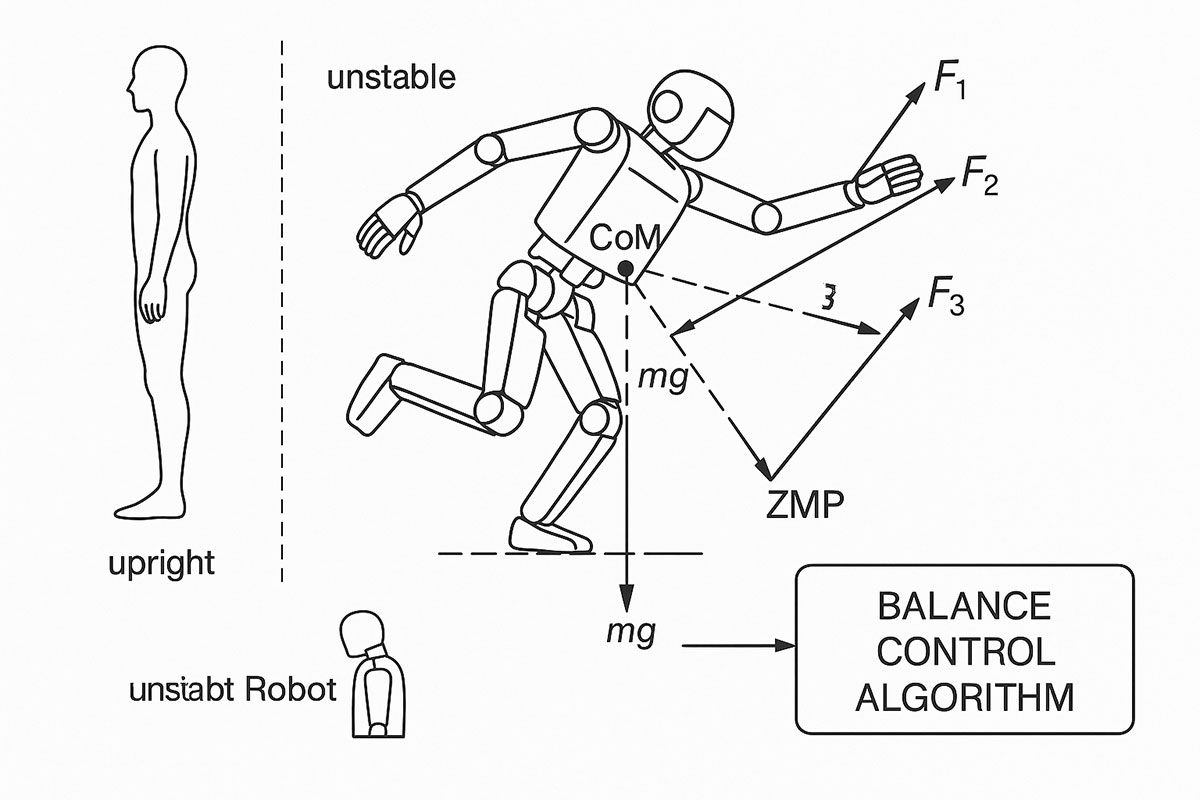

Russia's First AI Humanoid Robot Falls on Stage: The AIdol Disaster & What It Teaches About AI Robotics (November 2025)

On November 10, 2025, Russia's first humanoid AI robot, AIdol, became an instant internet sensation for all the wrong reasons—it collapsed face-first on its debut stage. While the viral video sparked laughter and memes online, the incident reveals profound truths about why humanoid robotics remains one of AI's greatest challenges.