Autonomous Vehicles in 2025: How Computer Vision Is Driving Safer Roads

Computer vision is steering the future of transport in 2025. Here’s how autonomous cars are using AI to make driving safer and smarter.

TrendFlash

Introduction: Can AI Be Your Therapist?

Depression, anxiety, and mental illness are at crisis levels. Therapists are scarce and expensive. AI is emerging as mental health support—but with important limitations. This guide explores what AI can and can't do for mental health.

What AI Can Do for Mental Health

1. Accessibility

The Problem: Mental health crisis, therapy shortage, high costs ($100-300/session)

AI Solution: Available 24/7, free or cheap ($0-15/month), no appointment waiting

Real Impact: Gets people help who wouldn't otherwise seek it

2. Self-Awareness

What AI does:

- Listens without judgment

- Asks clarifying questions

- Reflects back patterns

- Helps understand triggers

Example: User tells chatbot about anxiety, AI helps identify pattern

3. Coping Strategies

What AI teaches:

- Breathing exercises

- Cognitive behavioral therapy (CBT) techniques

- Meditation and mindfulness

- Grounding techniques

Real tools: Woebot (AI therapy), Replika (AI companion), CBT chatbots

4. Crisis Support

What AI does: Provides immediate support when human isn't available

Limitations: Can't do more than stabilize until human help available

5. Mental Health Monitoring

What AI does: Tracks mood, sleep, anxiety patterns over time

Insight: Helps identify what helps/hurts (personalization)

The Limitations (Critical)

Limitation 1: AI Can't Diagnose

Reality: Only licensed professionals can diagnose mental illness

Danger: Self-diagnosis through AI can be wrong (harmful)

Important: AI should recommend professional evaluation, not replace it

Limitation 2: AI Lacks True Empathy

Reality: AI can't truly understand human suffering

What happens: Responses feel helpful but are ultimately generic

Missing: Real human connection, genuine understanding

Limitation 3: No Crisis Intervention

Risk: Suicidal crisis requires immediate human intervention

AI can: Recognize crisis, suggest resources, but can't physically help

Danger: Over-reliance on AI for serious crisis

Limitation 4: Privacy Concerns

Risk: Sharing mental health details with AI = permanent record with company

Danger: Data breaches expose sensitive information

Unknown: How companies use/sell mental health data

Limitation 5: No Ongoing Relationship

Reality: Each conversation starts fresh (AI doesn't remember previous sessions)

Human therapy: Continuity of care, relationship building over time

Impact: Limits therapeutic progress

Limitation 6: Potential for Harm

Risks:

- AI misinterpreting needs, giving bad advice

- Over-reliance preventing professional help-seeking

- Addiction to AI companion (parasocial relationship)

- Reinforcement of problematic thinking

The Research Reality

What Studies Show

- Woebot: Some studies show modest benefit for anxiety/depression

- CBT chatbots: Effective for mild anxiety (comparable to minimal therapy)

- Overall: AI helpful as supplement, not replacement

The Important Caveat

Short-term: Users report feeling supported

Long-term: Limited data (most studies < 12 months)

Unknown: Whether AI mental health support creates dependency or barrier to real therapy

When AI Is Appropriate

Good Uses

- Mild anxiety/stress (situational, temporary)

- Sleep problems (relaxation, meditation)

- Daily coping strategies

- Supplement to human therapy

- Access for people who can't afford therapy

- Crisis stabilization until professional help

Not Appropriate

- Serious mental illness (bipolar, schizophrenia, severe depression)

- Active suicidal ideation (needs emergency intervention)

- Trauma (requires specialized therapy)

- Psychosis or delusions

- Substance abuse

The Ethical Framework

Transparency

AI should clearly state: "I'm not a therapist. For serious issues, seek professional help."

Safety Guardrails

AI should recognize crisis and escalate to emergency services

Privacy Protection

Mental health data should be strongly protected, with user control

Evidence-Based

AI mental health tools should be studied and validated before wide deployment

The Future

2025-2026: Growth with Caution

- More AI mental health apps launching

- Some regulation emerging

- Questions about effectiveness increasing

2027+: Appropriate Positioning

- AI positioned as supplement, not replacement

- Clear scope of practice (what it can/can't do)

- Integration with human therapists (hybrid model)

Conclusion: Helpful But Not Replacement

AI can help with mental health—increased access, immediate support, helpful tools. But it can't replace human therapists. If you're struggling, AI is a good start. But please see a human professional for serious issues.

Explore more on mental health and technology at TrendFlash.

Share this post

Categories

Recent Posts

Opening the Black Box: AI's New Mandate in Science

AI as Lead Scientist: The Hunt for Breakthroughs in 2026

Measuring the AI Economy: Dashboards Replace Guesswork in 2026

Your New Teammate: How Agentic AI is Redefining Every Job in 2026

Related Posts

Continue reading more about AI and machine learning

Physical AI Hits the Factory Floor: Beyond the Humanoid Hype

Forget the viral dance videos. In early 2026, Physical AI moved from the lab to the assembly line. With the electric Boston Dynamics Atlas beginning field tests at Hyundai, we explore the "Vision-Language-Action" models and digital twins turning humanoid hype into industrial reality.

Boston Dynamics' Atlas at Hyundai: The Humanoid Robot Era Begins

The humanoid robot era isn't coming—it's here. Boston Dynamics' Atlas just walked onto a real factory floor at Hyundai's Georgia facility, marking the first commercial deployment of a humanoid robot in manufacturing. With 99.8% reliability, superhuman flexibility, and the ability to learn new tasks in a single day, Atlas represents a fundamental shift in how factories operate. But here's what nobody's talking about: this isn't about replacing workers. It's about redefining what manufacturing jobs look like.

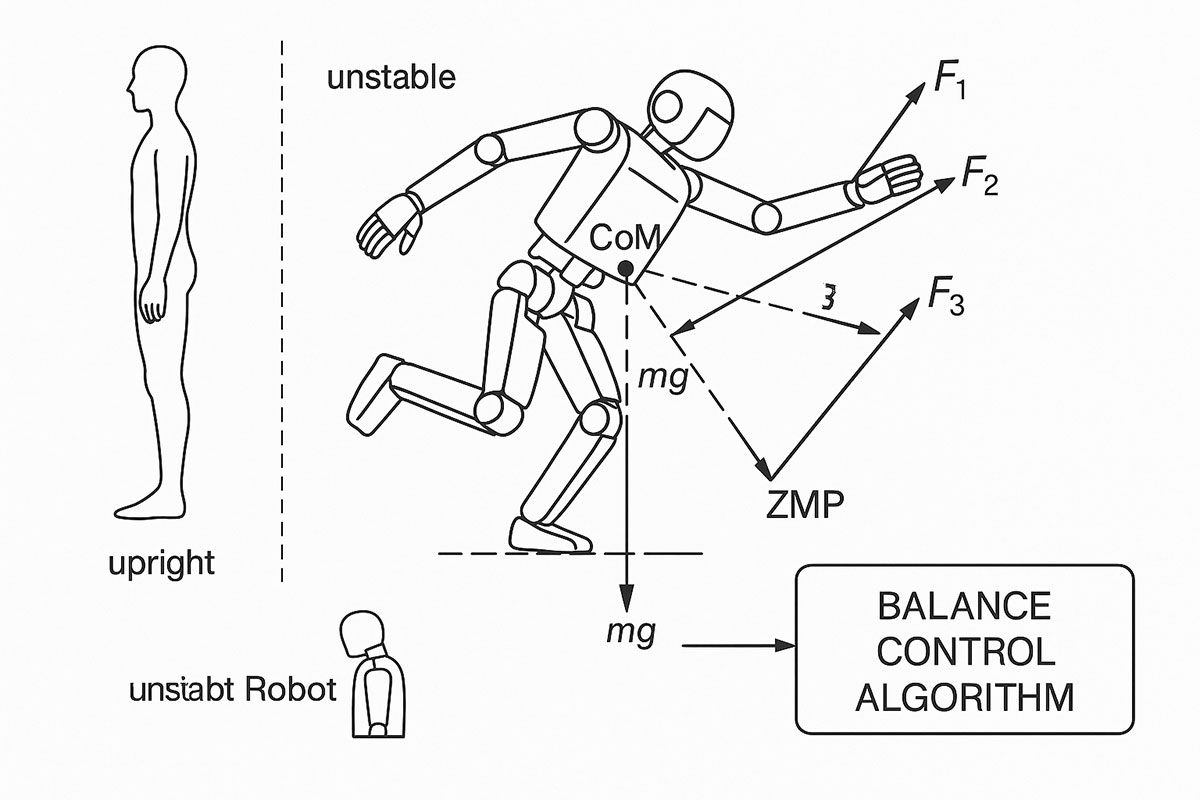

Russia's First AI Humanoid Robot Falls on Stage: The AIdol Disaster & What It Teaches About AI Robotics (November 2025)

On November 10, 2025, Russia's first humanoid AI robot, AIdol, became an instant internet sensation for all the wrong reasons—it collapsed face-first on its debut stage. While the viral video sparked laughter and memes online, the incident reveals profound truths about why humanoid robotics remains one of AI's greatest challenges.